Deployment, Stats, & License

PyThresh is a comprehensive and scalable Python toolkit for thresholding outlier detection likelihood scores in univariate/multivariate data. It has been written to work in tandem with PyOD and has similar syntax and data structures. However, it is not limited to this single library. PyThresh is meant to threshold likelihood scores generated by an outlier detector. It thresholds these likelihood scores and replaces the need to set a contamination level or have the user guess the amount of outliers that may exist in the dataset beforehand. These non-parametric methods were written to reduce the user's input/guess work and rather rely on statistics instead to threshold outlier likelihood scores. For thresholding to be applied correctly, the outlier detection likelihood scores must follow this rule: the higher the score, the higher the probability that it is an outlier in the dataset. All threshold functions return a binary array where inliers and outliers are represented by a 0 and 1 respectively.

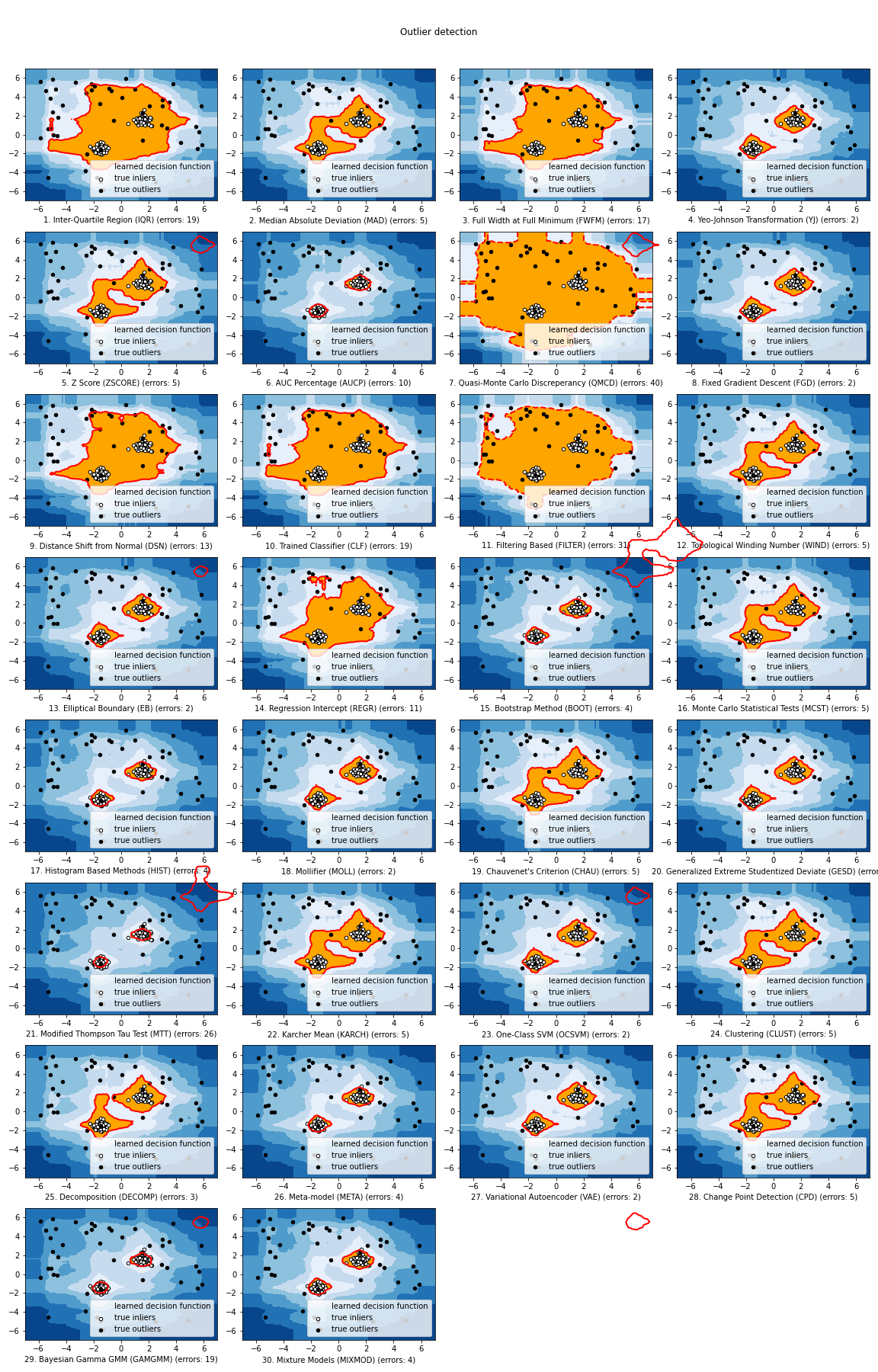

PyThresh includes more than 30 thresholding algorithms. These algorithms range from using simple statistical analysis like the Z-score to more complex mathematical methods that involve graph theory and topology.

Visit PyThresh Docs for full documentation or see below for a quickstart installation and usage example.

To cite this work you can visit PyThresh Citation

Outlier Detection Thresholding with 7 Lines of Code:

# train the KNN detector

from pyod.models.knn import KNN

from pythresh.thresholds.filter import FILTER

clf = KNN()

clf.fit(X_train)

# get outlier scores

decision_scores = clf.decision_scores_ # raw outlier scores on the train data

# get outlier labels

thres = FILTER()

labels = thres.eval(decision_scores)or using multiple outlier detection score sets

# train multiple detectors

from pyod.models.knn import KNN

from pyod.models.pca import PCA

from pyod.models.iforest import IForest

from pythresh.thresholds.filter import FILTER

clfs = [KNN(), IForest(), PCA()]

# get outlier scores for each detector

scores = [clf.fit(X_train).decision_scores_ for clf in clfs]

scores = np.vstack(scores).T

# get outlier labels

thres = FILTER()

labels = thres.eval(scores)It is recommended to use pip or conda for installation:

pip install pythresh # normal install

pip install --upgrade pythresh # or update if neededconda install -c conda-forge pythreshAlternatively, you can get the version with the latest updates by cloning the repo and run setup.py file:

git clone https://github.com/KulikDM/pythresh.git

cd pythresh

pip install .Or with pip:

pip install https://github.com/KulikDM/pythresh/archive/main.zipRequired Dependencies:

- matplotlib

- numpy>=1.13

- pyod

- scipy>=1.3.1

- scikit_learn>=0.20.0

Optional Dependencies:

- pyclustering (used in the CLUST thresholder)

- ruptures (used in the CPD thresholder)

- scikit-lego (used in the META thresholder)

- joblib>=0.14.1 (used in the META thresholder and RANK)

- pandas (used in the META thresholder)

- torch (used in the VAE thresholder)

- tqdm (used in the VAE thresholder)

- xgboost>=2.0.0 (used in the RANK)

- eval(score): evaluate a single outlier or multiple outlier detection likelihood score sets.

Key Attributes of threshold:

- thresh_: Return the threshold value that separates inliers from outliers. Outliers are considered all values above this threshold value. Note the threshold value has been derived from likelihood scores normalized between 0 and 1.

- confidence_interval_: Return the lower and upper confidence interval of the contamination level. Only applies to the COMB thresholder

- dscores_: 1D array of the TruncatedSVD decomposed decision scores if multiple outlier detector score sets are passed

- mixture_: fitted mixture model class of the selected model used for thresholding. Only applies to MIXMOD. Attributes include: components, weights, params. Functions include: fit, loglikelihood, pdf, and posterior.

Towards Data Science: Thresholding Outlier Detection Scores with PyThresh

Towards Data Science: When Outliers are Significant: Weighted Linear Regression

ArXiv: Estimating the Contamination Factor's Distribution in Unsupervised Anomaly Detection.

The comparison among implemented models and general implementation is made available below

Additional benchmarking has been

done on all the thresholders and it was found that the MIXMOD

thresholder performed best while the CLF thresholder provided the

smallest uncertainty about its mean and is the most robust (best least

accurate prediction). However, for interpretability and general

performance the MIXMOD, FILTER, and META thresholders are good

fits.

Further utilities are available for assisting in the selection of the most optimal outlier detection and thresholding methods ranking as well as determining the confidence with regards to the selected thresholding method thresholding confidence

For Jupyter Notebooks, please navigate to notebooks.

A quick look at all the thresholders performance can be found at "/notebooks/Compare All Models.ipynb"

Anyone is welcome to contribute to PyThresh:

- Please share your ideas and ask questions by opening an issue.

- To contribute, first check the Issue list for the "help wanted" tag and comment on the one that you are interested in. The issue will then be assigned to you.

- If the bug, feature, or documentation change is novel (not in the Issue list), you can either log a new issue or create a pull request for the new changes.

- To start, fork the main branch and add your improvement/modification/fix.

- To make sure the code has the same style and standard, please refer to qmcd.py for example.

- Create a pull request to the main branch and follow the pull request template PR template

- Please make sure that all code changes are accompanied with proper new/updated test functions. Automatic tests will be triggered. Before the pull request can be merged, make sure that all the tests pass.

Please Note not all references' exact methods have been employed in PyThresh. Rather, the references serve to demonstrate the validity of the threshold types available in PyThresh.

| [1] | A Robust AUC Maximization Framework With Simultaneous Outlier Detection and Feature Selection for Positive-Unlabeled Classification |

| [2] | An evaluation of bootstrap methods for outlier detection in least squares regression |

| [3] | Chauvenet's Test in the Classical Theory of Errors |

| [4] | Linear Models for Outlier Detection |

| [5] | Cluster Analysis for Outlier Detection |

| [6] | Changepoint Detection in the Presence of Outliers |

| [7] | Influence functions and outlier detection under the common principal components model: A robust approach |

| [8] | Fast and Exact Outlier Detection in Metric Spaces: A Proximity Graph-based Approach |

| [9] | Elliptical Insights: Understanding Statistical Methods through Elliptical Geometry |

| [10] | Iterative gradient descent for outlier detection |

| [11] | Filtering Approaches for Dealing with Noise in Anomaly Detection |

| [12] | Sparse Auto-Regressive: Robust Estimation of AR Parameters |

| [13] | Estimating the Contamination Factor's Distribution in Unsupervised Anomaly Detection |

| [14] | An adjusted Grubbs' and generalized extreme studentized deviation |

| [15] | Effective Histogram Thresholding Techniques for Natural Images Using Segmentation |

| [16] | A new non-parametric detector of univariate outliers for distributions with unbounded support |

| [17] | Riemannian center of mass and mollifier smoothing |

| [18] | Periodicity Detection of Outlier Sequences Using Constraint Based Pattern Tree with MAD |

| [19] | Testing normality in the presence of outliers |

| [20] | Automating Outlier Detection via Meta-Learning |

| [21] | Application of Mixture Models to Threshold Anomaly Scores |

| [22] | Riemannian center of mass and mollifier smoothing |

| [23] | Using the mollifier method to characterize datasets and models: The case of the Universal Soil Loss Equation |

| [24] | Towards a More Reliable Interpretation of Machine Learning Outputs for Safety-Critical Systems using Feature Importance Fusion |

| [25] | Rule extraction in unsupervised anomaly detection for model explainability: Application to OneClass SVM |

| [26] | Deterministic and quasi-random sampling of optimized Gaussian mixture distributions for vibronic Monte Carlo |

| [27] | Linear Models for Outlier Detection |

| [28] | Likelihood Regret: An Out-of-Distribution Detection Score For Variational Auto-encoder |

| [29] | Robust Inside-Outside Segmentation Using Generalized Winding Numbers |

| [30] | Transforming variables to central normality |

| [31] | Multiple outlier detection tests for parametric models |

%20%3D%20%27text%27%5D%5Blast()%5D&logo=data%3Aimage%2Fsvg%2Bxml%3Bbase64%2CPHN2ZyBzdHlsZT0iZW5hYmxlLWJhY2tncm91bmQ6bmV3IDAgMCAyNCAyNDsiIHZlcnNpb249IjEuMSIgdmlld0JveD0iMCAwIDI0IDI0IiB4bWw6c3BhY2U9InByZXNlcnZlIiB4bWxucz0iaHR0cDovL3d3dy53My5vcmcvMjAwMC9zdmciIHhtbG5zOnhsaW5rPSJodHRwOi8vd3d3LnczLm9yZy8xOTk5L3hsaW5rIj48ZyBpZD0iaW5mbyIvPjxnIGlkPSJpY29ucyI%2BPGcgaWQ9InNhdmUiPjxwYXRoIGQ9Ik0xMS4yLDE2LjZjMC40LDAuNSwxLjIsMC41LDEuNiwwbDYtNi4zQzE5LjMsOS44LDE4LjgsOSwxOCw5aC00YzAsMCwwLjItNC42LDAtN2MtMC4xLTEuMS0wLjktMi0yLTJjLTEuMSwwLTEuOSwwLjktMiwyICAgIGMtMC4yLDIuMywwLDcsMCw3SDZjLTAuOCwwLTEuMywwLjgtMC44LDEuNEwxMS4yLDE2LjZ6IiBmaWxsPSIjZWJlYmViIi8%2BPHBhdGggZD0iTTE5LDE5SDVjLTEuMSwwLTIsMC45LTIsMnYwYzAsMC42LDAuNCwxLDEsMWgxNmMwLjYsMCwxLTAuNCwxLTF2MEMyMSwxOS45LDIwLjEsMTksMTksMTl6IiBmaWxsPSIjZWJlYmViIi8%2BPC9nPjwvZz48L3N2Zz4%3D&label=downloads)