Course Materials for Data Science at Scale

Sign up for a new github account

BOGUS TEXT

Fork the dsc402 repositiory into your new account. Note: this will create a copy of the course repo for you to add and work on within your

own account.

Goto https://github.com/lpalum/dscc202-402-spring2023 and hit the fork button while you are logged into your github account:

git clone https://github.com/[your account name]/dscc202-402-spring2023.git

note: you may want to clone this repo into a dirtory on your machine that you organize for code e.g. /home//code/github

note: /home/[your account name] should be /Users/[your account name] to work with the paths that are defined in Mac OS X.

|

|

|

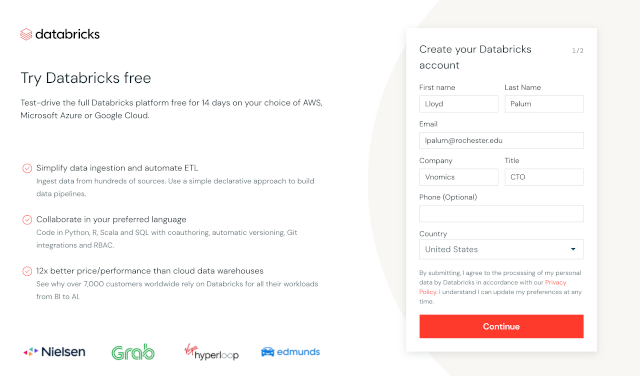

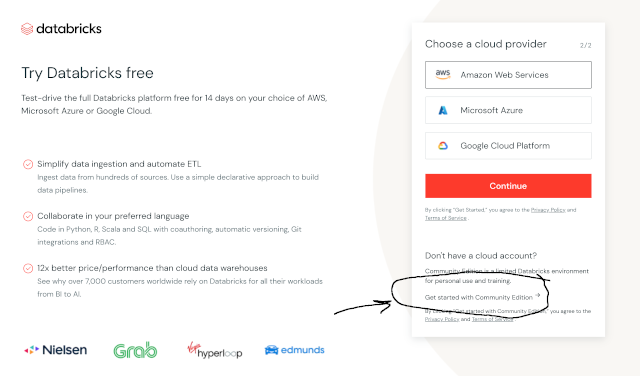

- THE SELECTION OF THE DATABRICKS COMMUNITY EDITION LINK AT THE BOTTOM OF THE FORM... DO NOT SIGN UP FOR THE FULL VERSION!

- You will also be receiving an invite later in the course to the class shared Databricks Workspace which is where you will be doing your final project.

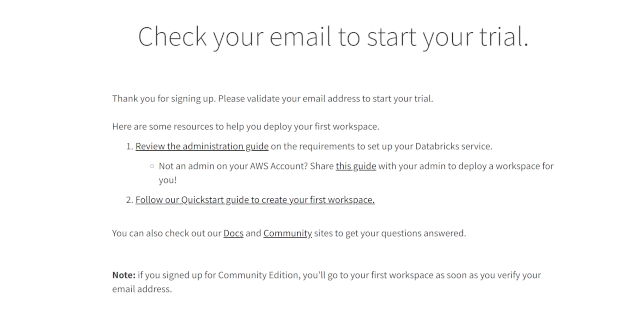

Here is some helpful information about importing archives into to the Databricks Envioronment: https://docs.databricks.com/notebooks/notebooks-manage.html#import-a-notebook

import the DBC archive from the Learning Spark v2 github repositiory into your account. (this is the code that goes along with the test book) DBC Archive

Import the DBC archives for the class after unzipping dscc202-402-dbc.zip on your machine.

- asp.dbc apache spark programming

- de.dbc data engineering with delta lake and spark

- ml.dbc machine learning on spark

Install docker on your computer

Pull the all-spark-notebook image from docker hub:

https://hub.docker.com/r/lpalum/dsc402

Launch the docker image to open a Jupyter Lab instance in your local browser:

docker run -it --rm -p 8888:8888 --name all-spark --volume /home/[your account name]/code/github:/home/jovyan/work lpalum/dsc402 start.sh jupyter lab

This will start a jupyter lab instance on your machine that you will be able to access at port 8888 in your browser and it will mount the github repo that you previouly cloned into the containers working directory.