-

Notifications

You must be signed in to change notification settings - Fork 7

Home

Functional localizer experiment developed by the Stanford Vision & Perception Neuroscience Lab to define category-selective cortical regions.

This package contains stimuli and presentation code for a functional localizer experiment that can be used to define category-selective cortical regions that respond preferentially to faces (e.g., fusiform face area), places (e.g., parahippocampal place area), bodies (e.g., extrastriate body area), and characters (e.g., visual word form area). We recommend collecting at least 2-3 runs of localizer data per subject to have sufficient power to define these and other regions of interest.

The localizer uses a miniblock design in which eight stimuli of the same category are presented in each 4 second trial (500 ms/image). For each 5 minute run, a novel stimulus sequence is generated that counterbalances the ordering of five stimulus domains (characters, bodies, faces, places, and objects) and a blank baseline condition. For each stimulus domain, there are two associated image categories that are presented in alternation over the course of a run but never intermixed within a trial:

-

Characters

- Word - pronounceable English pseudowords

- Number - uncommon whole numbers

-

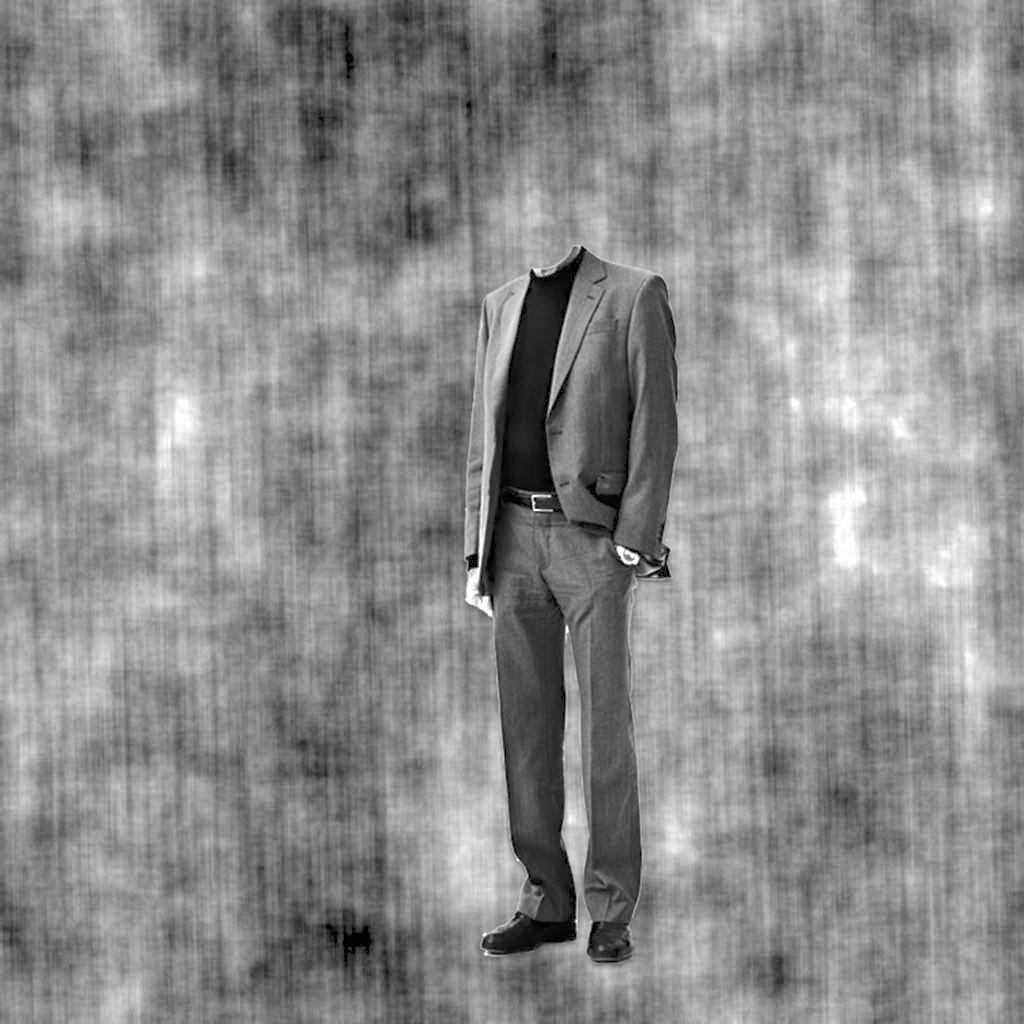

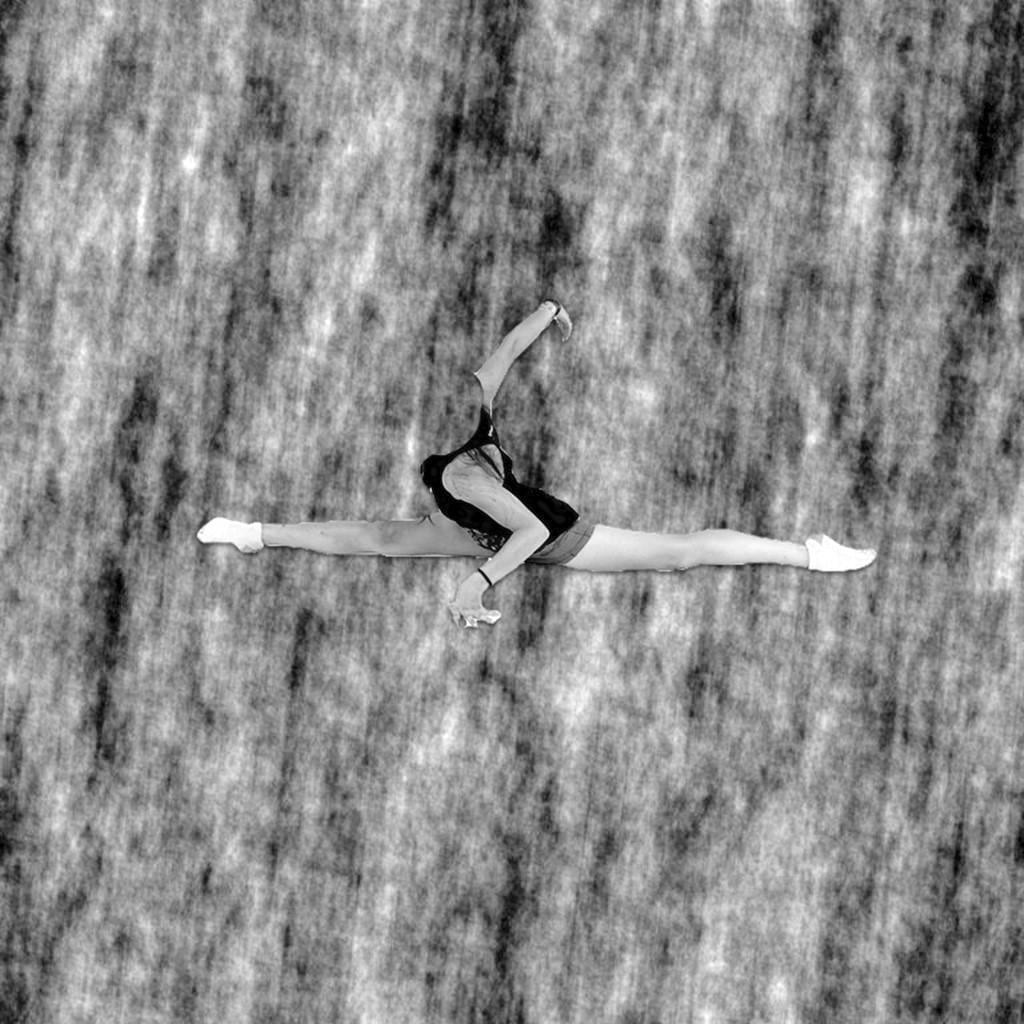

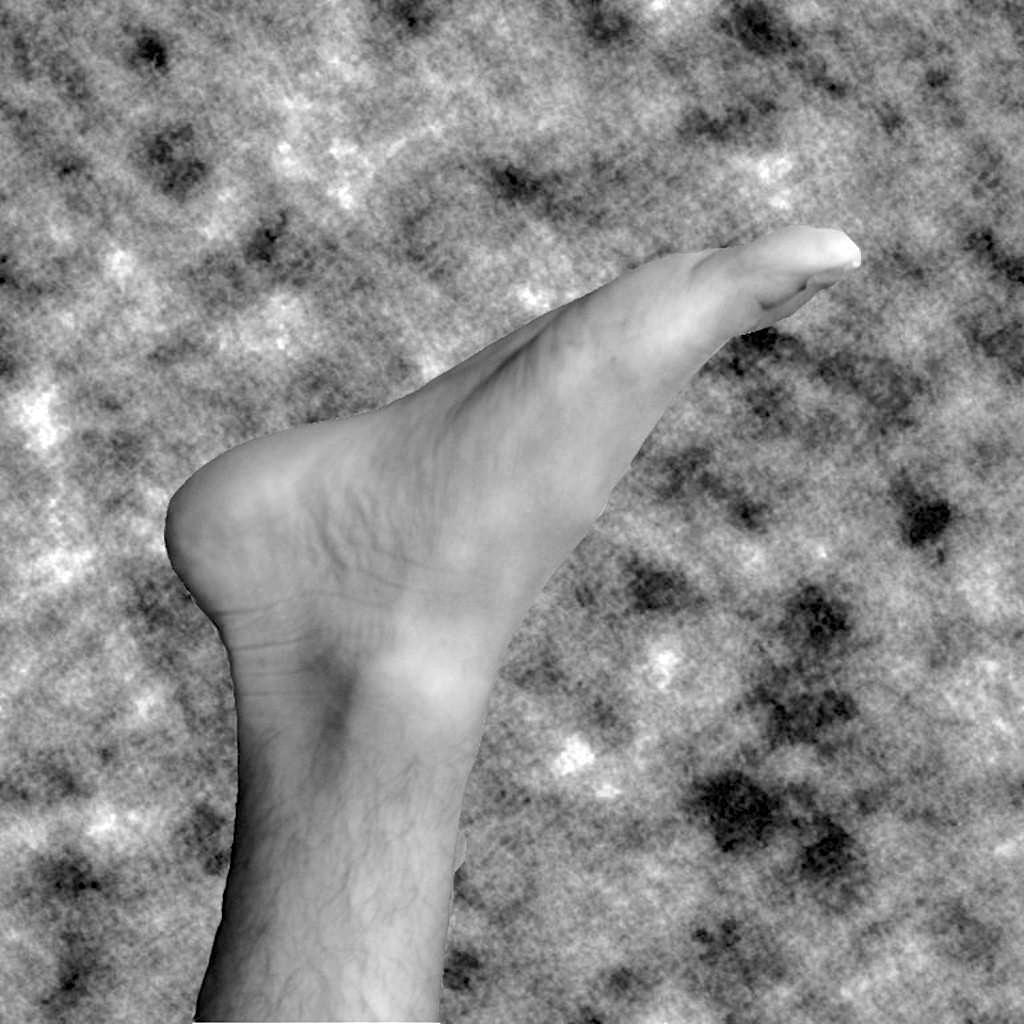

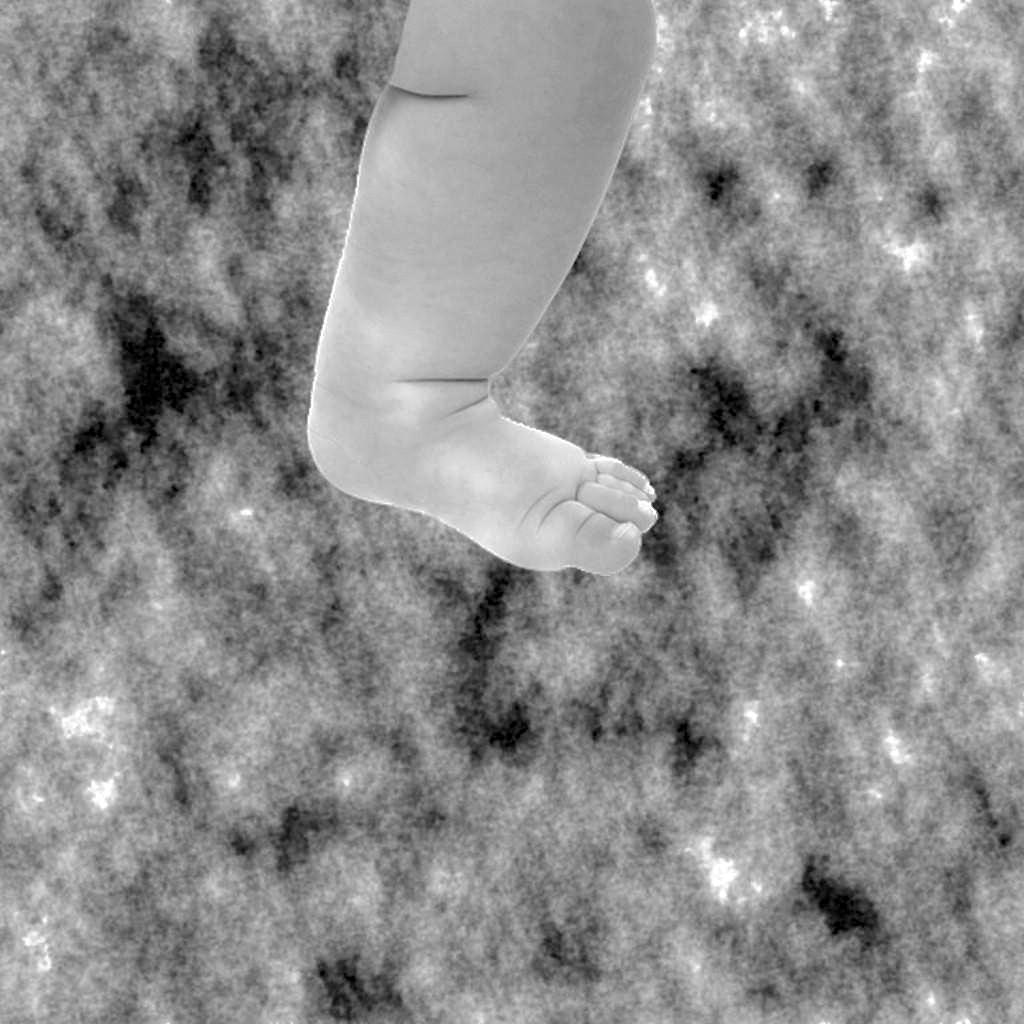

Bodies

- Body - whole bodies with cropped heads

- Limb - isolated arms, legs, hands, and feet

-

Faces

- Adult - portraits of adults

- Child - portraits of children

-

Places

- Corridor - indoor views of hallways

- House - outdoor views of buildings

-

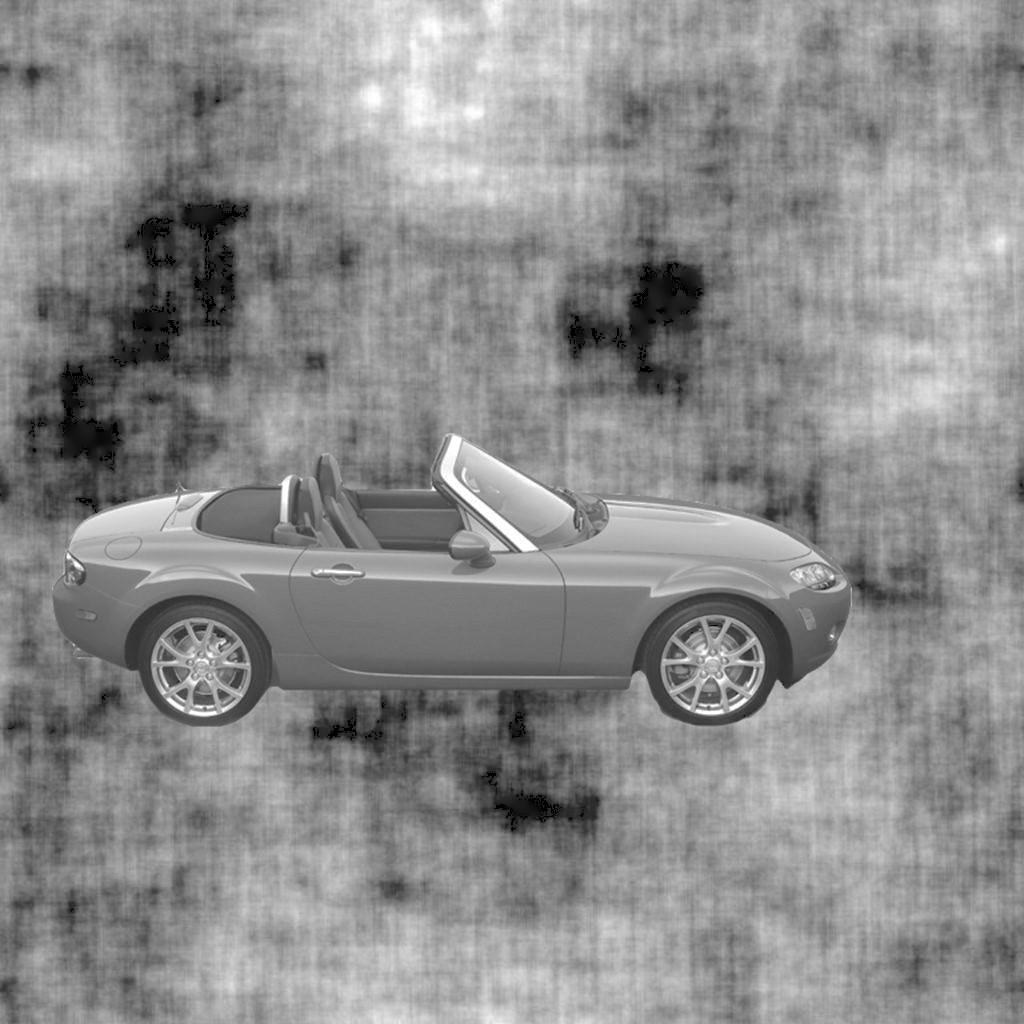

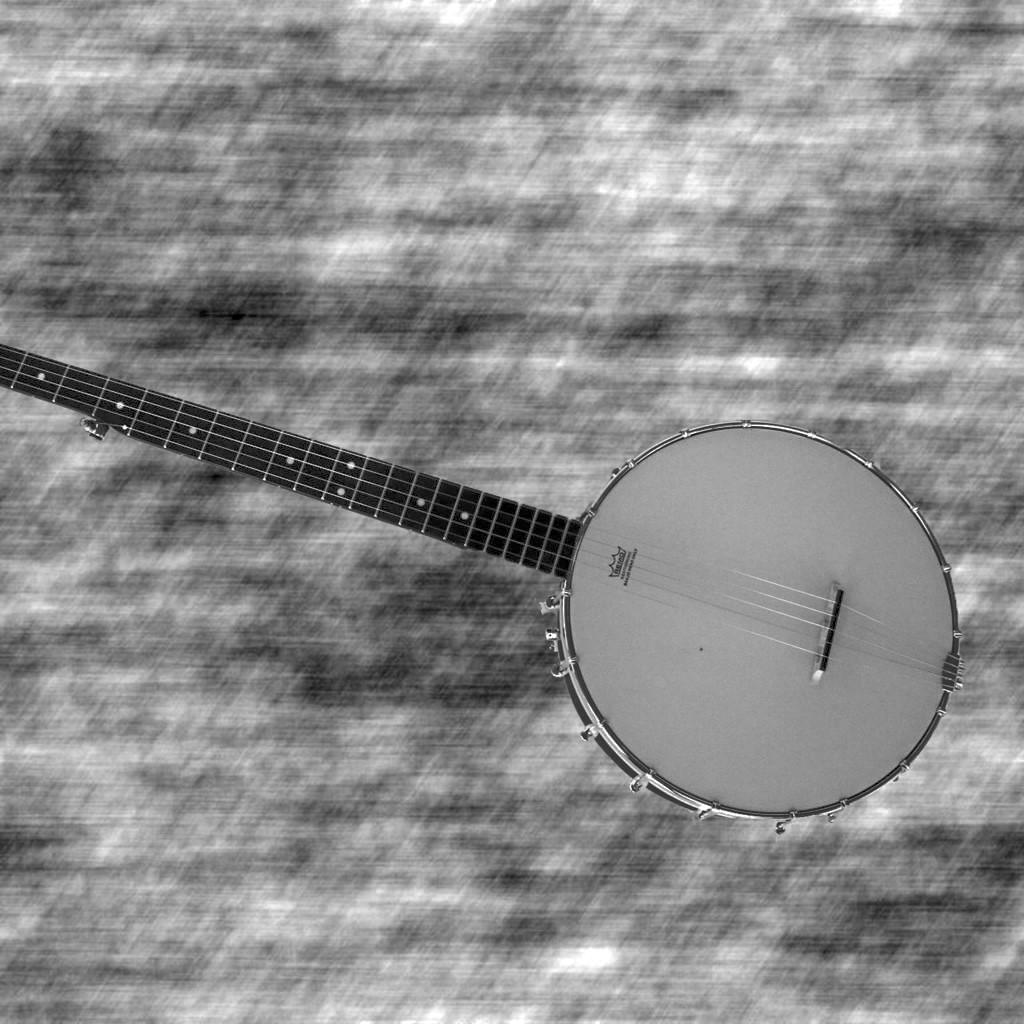

Objects

- Car - motor vehicles with four wheels

- Instrument - musical string instruments

The experimenter selects which behavioral task the subject is to perform while executing the experiment. Three options are available:

- 1-back - detect back-to-back image repetition

- 2-back - detect image repetition with an intervening stimulus

- Oddball - detect replacement of a stimulus with scrambled image

The frequency of task probes (i.e., trials containing an image repetition or oddball) is equated across stimulus categories with no more than one such event per trial. By default subjects are allowed 1 second to respond to a task probe, and responses outside of this time window are counted as false alarms. Behavioral data displayed at the end of each run thus summarize the hit rate (percentage of task probes detected within the time limit) and the false alarm count (number of responses outside of task-relevant time windows) for the preceding run.

The entire stimulus set included in the localizer package is available for download in various formats:

JPG | PowerPoint

- Characters

* Bodies

* Bodies

* Faces

* Faces

* Places

* Places

* Objects

* Objects

The code included in this package is written in MATLAB and calls Psychtoolbox-3 functions.

- Navigate to the functions directory (~/fLoc/functions/)

- Modify response collection functions for button box and keyboard:

- getBoxNumber.m - Change value of buttonBoxID to the "Product ID number" of local scanner button box (line 9)

- getKeyboardNumber.m - Change value of keyboardID to the "Product ID number" of native laptop keyboard (line 9)

- Add Psychtoolbox to MATLAB path

- Navigate to base experiment directory in MATLAB (~/fLoc/)

- Execute runme.m wrapper function:

- Enter runme to execute 3 runs sequentially (default - entire stimulus set is used without recycling images)

- Enter runme(N) to execute N runs sequentially (stimuli recycled after 3 runs)

- If experiment is interrupted, enter runme(N,startRun) to continue execution of pre-generated sequence of runs from startRun to N

- Enter subject initials when prompted

- Select behavioral task:

- Enter 1 for 1-back image repetition detection task

- Enter 2 for 2-back image repetition detection task

- Enter 3 for oddball task (detect scrambled image)

- Select triggering method

- Enter 0 if not attempting to trigger scanner (e.g., while debugging)

- Enter 1 to automatically trigger scanner at onset of experiment

- Wait for task instructions screen to display

- Press g to start experiment (and trigger scanner if option is selected)

- Wait for behavioral performance to display after each run

- Press g to continue experiment and start execution of next run

- Press [Command + period] to halt experiment

- Enter sca to return to MATLAB command line

- Please report bugs on GitHub

Session information, behavioral data, and presentation parameters are saved as MAT files:

- Session information and behavioral data files are written for each run and saved in the data directory (~/fLoc/data/)

- Script and parameter files are written for each stimulus sequence and stored in the scripts directory (~/fLoc/scripts/)

Parameter files (.par) contain information used to generate the design matrix for a General Linear Model (GLM):

- Trial onset time (from end of countdown in seconds)

- Condition number (baseline = 0)

- Condition name

- Condition plotting color (RGB values from 0 to 1)

After acquiring and preprocessing functional data, a General Linear Model (GLM) is fit to the time course of each voxel to estimate Beta values of response amplitude to each stimulus category (e.g., Worsley et al., 2002). For preprocessing we recommend performing motion correction, detrending, and transforming time series data to percent signal change without spatial smoothing.

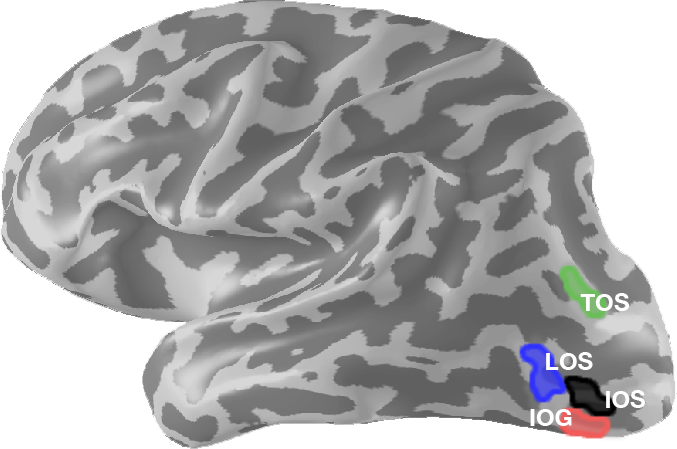

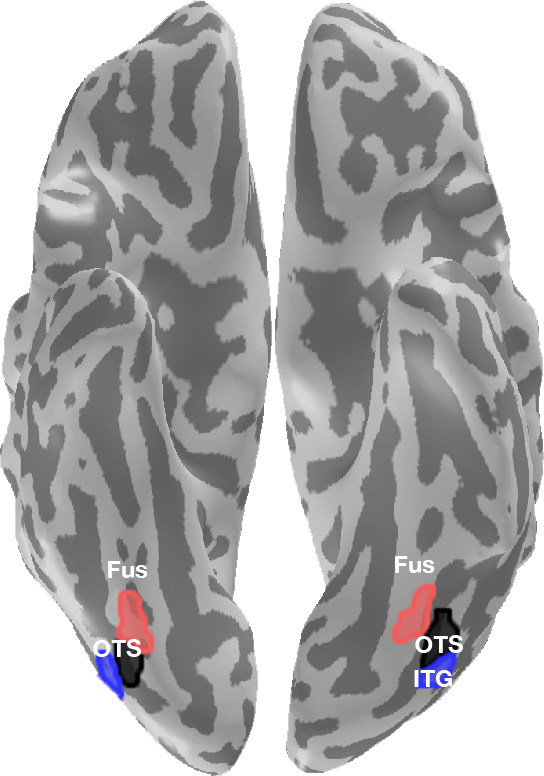

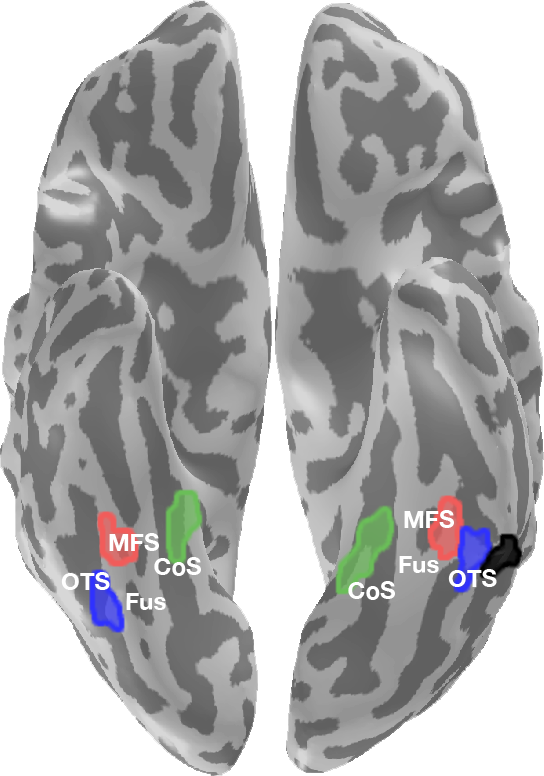

Category-selective regions are defined by statistically contrasting Beta values of categories belonging to a given stimulus domain vs. all other categories in each voxel and thresholding resulting maps (e.g., t-value > 4):

-

Character-selective regions (blacks)

- [word number] > [body limb child adult corridor house car instrument]

- selective voxels typically clustered around the inferior occipital sulcus (IOS) and along the occipitotemporal sulcus (OTS)

-

Body part-selective regions (blues)

- [body limb] > [word number child adult corridor house car instrument]

- selective voxels typically clustered around the lateral occipital sulcus (LOS), inferior temporal gyrus (ITG), and occipitotemporal sulcus (OTS)

-

Face-selective regions (reds)

- [child adult] > [word number body limb corridor house car instrument]

- selective voxels typically clustered around the inferior occipital gyrus (IOG), posterior fusiform gyrus (Fus), and mid-fusiform sulcus (MFS)

-

Place-selective regions (greens)

- [corridor house] > [word number body limb child adult car instrument]

- selective voxels typically clustered around the transverse occipital sulcus (TOS) and collateral sulcus (CoS)

-

Object-selective regions

- [car instrument] > [word number body limb child adult corridor house]

- selective voxels are not typically clustered in occipitotemporal cortex when contrasted against characters, bodies, faces, and places

- object-selective regions in lateral occipital cortex can be defined in a separate experiment (contrasting objects > scrambled images)

Category-selective regions defined in three anatomical sections of occipitotemporal cortex (see Stigliani et al., 2015; Fig. 3A) are shown below on the inflated cortical surface of a representative subject with anatomical labels overlaid on significant sulci and gyri (see reference to labels above):

- Lateral Occipitotemporal Cortex (left hemisphere)

* _**Posterior Ventral Temporal Cortex**_

* _**Posterior Ventral Temporal Cortex**_

* _**Mid Ventral Temporal Cortex**_

* _**Mid Ventral Temporal Cortex**_

Stigliani, A., Weiner, K. S., & Grill-Spector, K. (2015). Temporal processing capacity in high-level visual cortex is domain specific. Journal of Neuroscience, in press. HTML | PDF

Anthony Stigliani: astiglia [at] stanford [dot] edu

Kalanit Grill-Spector: kalanit [at] stanford [dot] edu

If you would like to acknowledge using our localizer or stimulus set, you might include a sentence like one of the following:

"We defined regions of interest using the fLoc functional localizer (Stigliani et al., 2015)..."

"We used stimuli included in the fLoc functional localizer package (Stigliani et al., 2015)..."