Ruoyu Wang, Shiheng Wang, Songyu Du, Erdong Xiao, Wenzhen Yuan, Chen Feng

This repository contains PyTorch implementation associated with the paper: "Real-time Soft Body 3D Proprioception via Deep Vision-based Sensing", RA-L/ICRA 2020.

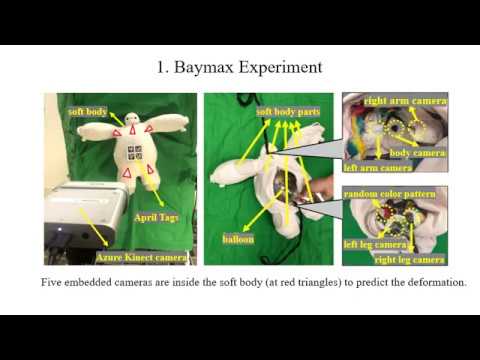

Soft bodies made from flexible and deformable materials are popular in many robotics applications, but their proprioceptive sensing has been a long-standing challenge. In other words, there has hardly been a method to measure and model the high-dimensional 3D shapes of soft bodies with internal sensors. We propose a framework to measure the high-resolution 3D shapes of soft bodies in real-time with embedded cameras. The cameras capture visual patterns inside a soft body, and convolutional neural network (CNN) produces a latent code representing the deformation state, which can then be used to reconstruct the body’s 3D shape using another neural network. We test the framework on various soft bodies, such as a Baymax-shaped toy, a latex balloon, and some soft robot fingers, and achieve real-time computation (≤2.5 ms/frame) for robust shape estimation with high precision (≤1% relative error) and high resolution. We believe the method could be applied to soft robotics and human-robot interaction for proprioceptive shape sensing.

Top row: Predicted 3D shape Bottom row: Ground truth 3D shape

A video demo is provided thourgh this link.

The code of this project is released on our GitHub repository.

We recommand to use conda to install the required packages.

python>=3.6

pytorch>=1.4

pytorch3d>=0.2.0

open3d>=0.10.0

Please check https://github.com/facebookresearch/pytorch3d/blob/master/INSTALL.md for pytorch3d installation.

- Download the dataset: https://drive.google.com/file/d/1mrsSqivo2GCJ_frP_ehMg5cE5K0Yj48y/view?usp=sharing

- unzip the BaymaxData.zip.

python train_baymax.py -d ${PATH_TO_DATASET}/BaymaxData/train -o ${PATH_TO_OUTPUTS}

python test_baymax.py -d ${PATH_TO_DATASET}/BaymaxData/test -m ${PATH_TO_OUTPUTS}/params/ep_${EPOCH_INDEX}.pth

If you find DeepSoRo useful in your research, please cite:

@article{wang2019real,

title={Real-time Soft Robot 3D Proprioception via Deep Vision-based Sensing},

author={Wang, Ruoyu and Wang, Shiheng and Du, Songyu and Xiao, Erdong and Yuan, Wenzhen and Feng, Chen},

journal={arXiv preprint arXiv:1904.03820},

year={2019}

}