-

Notifications

You must be signed in to change notification settings - Fork 84

Networking

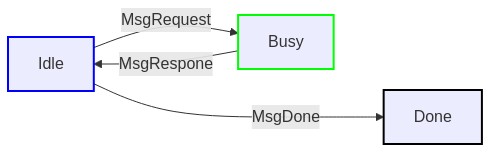

blue = client can send (client has agency), green = server can send (server has agency)

- RequestResponse protocol

- FireForget protocol

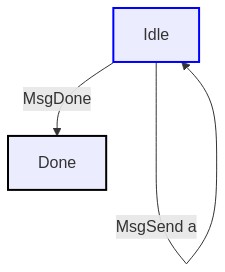

- Happy case, single tx between 3 nodes

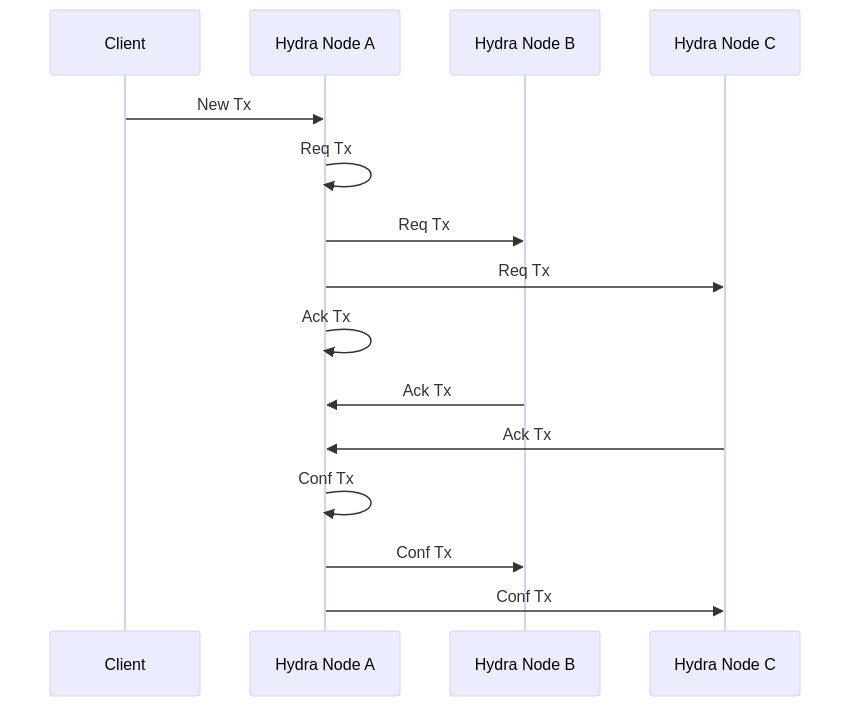

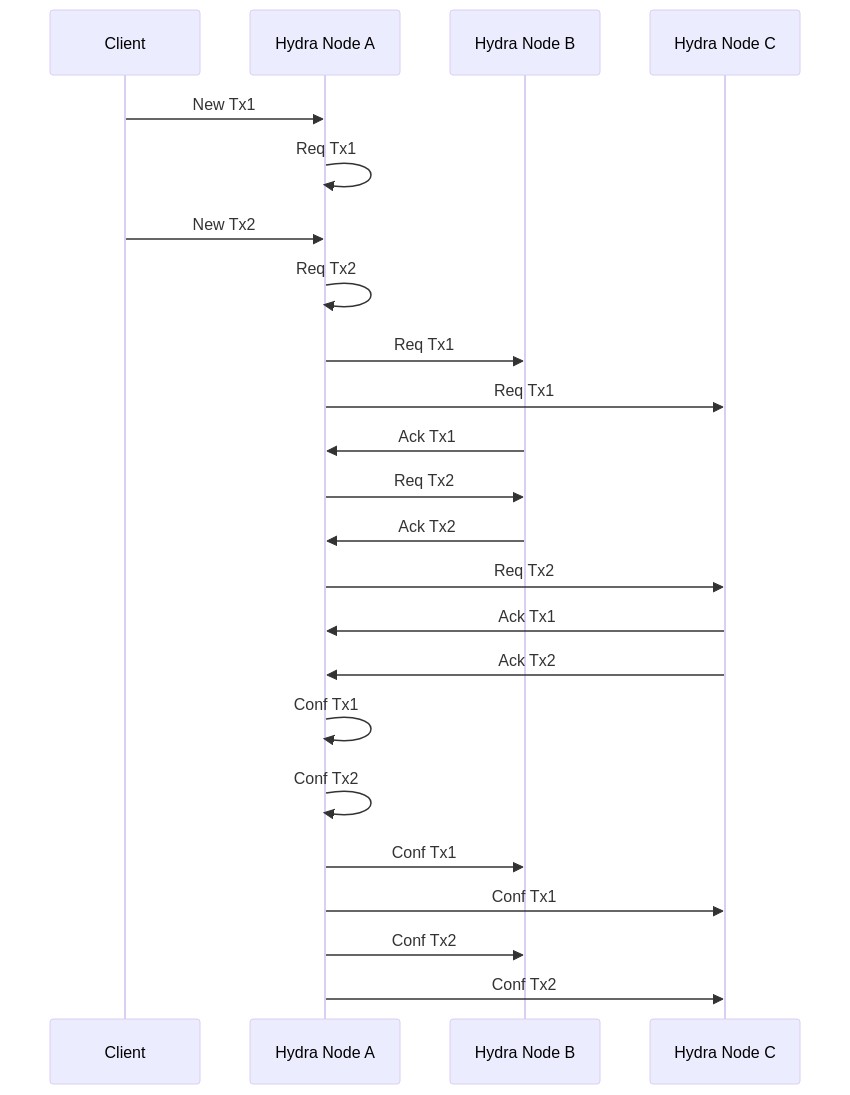

- Two concurrent tx (disjoint) by different parties

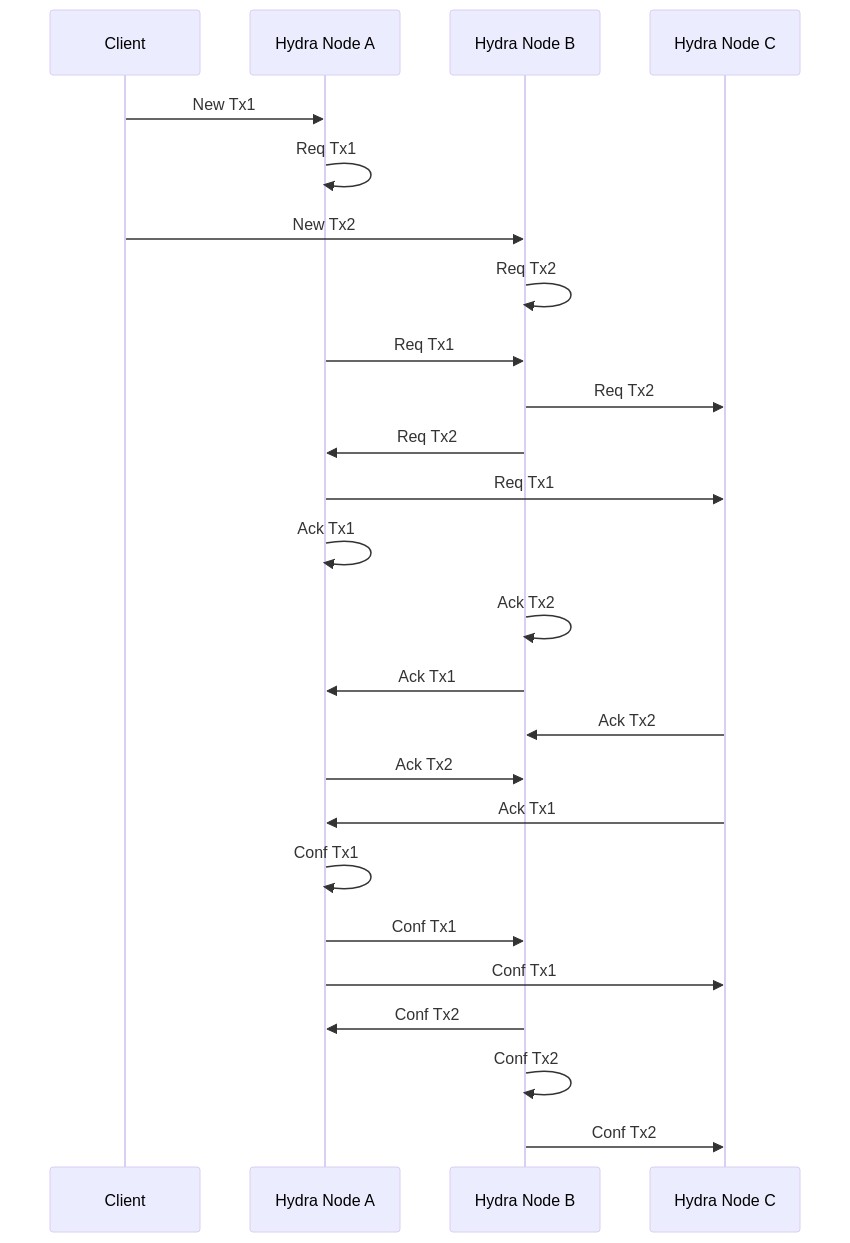

- Two concurrent tx (disjoint) sent by the same party / interleaved req

- NOTE: If everything is broadcast we do might not need the confTx round (each does it on their own)

This is mostly paraphrasing/summarizing the document the way I (AB) understood it.

- The document goes into great details, sometimes repeating itself in different places, on the rationale behind the decisions made while building the network/consensus layer of Shelley

- The key constraints arise from the PoS protocol itself:

- Nodes participating in the consensus protocol forge blocks according to their stakes, eg. amount of ADA they own, taking turn in a way that's deterministically computed from the current state of the chain

- This means that nodes need to have an up-to-date chain, hence blocks dissemination must happen fast enough to reach all nodes participating in the consensus before the next block is forged in order to prevent unwanted forks

- Current requirements are that blocks be created every 20s (this is a function of slot length and percentage of productive slots)

- And on top of that are strong security constraints to prevent things like:

- Eclipse attack: A node is cut from the mainchain by a network of attackers which feed it malignant information

- Sybil attack: similar to the above?

- DOS attack: adversarial nodes overloading a node's resources to prevent it to participate in the consensus

- splitbrain

- ...

- The connections need to be stateful in order for the node to adapt its behaviour to the state of its connections with its peers

- It's also pull-based: A node connects to a peer and request information from it depending on decisions it takes locally. This also allows by-passing firewall restrictions

- Peers are untrusted, so everything they send should be verified

- It's infeasible to implement what's required by the consensus theoretical protocol, namely multicast/broadcast diffusion. In practice, information is sent to a limited set of peers which themselves send them to their own peers. It is expected the set of active connections makes the network fully connected with a limited diameter (5-6 ?)

- There might be interesting links to be made with network science as the topology of the network is unlikely to be a random graph, but more probably a graph following a power law distribution (eg. a small number of highly connected nodes with a long tail of nodes with low connections) see https://barabasi.com/book/network-science

- Nodes can act merely as relays, eg. to protect sensitive PoS participating nodes from being exposed to the internet: The PoS nodes connect to the relay node which is visible

- The network stack is implemented on top of TCP to provide ordering and (more) secure delivery of messages. It basically goes that way:

- node gets an initial list of peers to connect to

- it connects to those peers and maintains state about those peers locally (that's the stateful part of the cardano networking)

- on top of each connection, it runs a multiplexer that itself handles several typed protocols instances

- peers are classified in different sets: cold, warm and hot, depending on various QoS and observations made by the node while communicating with them. This allows the node to adapt its connections and resousrces consumption level, for example dropping unresponsive or faulty nodes

- Network code can "transparently" provide pipelining on top of an existing protocol type thus speeding up delivery of messages and increasing throughput even with high latency.

- note that HTTP/2 provides multiplexing which is similar

- We could reuse the same networking layer for Hydra when interconnecting hydra nodes

- OTOH, given Hydra would be closer to applications than the mainchain and requires secured communication channels, it might make sense to reuse a more standard protocol, eg. HTTP with TLS

- The typed protocols seem very useful as a way to embed the details of the protocol in the type system without dealing with nitty-gritty details of actual communications

Looking at ourobouros-network protocol tests exampels to understand how they are structured and how we could get inspiration to structure our own testing

-

There's a

Directimplementation in the tests which run client and servers in a pure setting: What for? Is this the actual "model" of the protocol? For example: https://github.com/input-output-hk/ouroboros-network/blob/master/ouroboros-network/protocol-tests/Ouroboros/Network/Protocol/BlockFetch/Direct.hs -

Prop tests are run through

IOSimmonad but also using IO monads, connecting peers through local pipes: https://github.com/input-output-hk/ouroboros-network/blob/master/ouroboros-network/protocol-tests/Ouroboros/Network/Protocol/BlockFetch/Test.hs#L304 -

the

Network.TypedProtocolsstuff is very clever, indeed. Lots of interesting type-level hackery to mimic dependently-typed programming techniques, or so it seems? Question is: Why not encode it in a total language (eg. Coq?) and just generate the Haskell code if we want to state proofs about protocols?- prove things in the formal language

- generate tests for any other languages, including Haskell

-

Snockettype which represents different types of "sockets" -

IOManageris need for Win32 to do async I/O operations but is a no-op on Unix systems- this runs an OS-level thread that's connected to a completion port and reads completion packets from a queue

- lots of

#ifdef/#else/#endifin the code to cope with Win32 stuff, wondering if having them in different modules with only CPP macros for imports would not make things clearer?

-

There's

demodirectory inourobouros-network-frameworkthat demonstrates how to tie the whole stuff together -

looking at

network-muxpackage which is low-level stuff about multiplexing protocols over a single connection- each protocol is associated with 2 threads and channels for egress and ingress traffic

- there's a pool of jobs created when starting a mux to handle all this stuff

- this whole stuff is not trivial... I got the high-level idea but the details are rather complicated esp. as it seems to optimise starting threads on demand

The name of the Wireguard tool came up several times while discussing options for Hydra networking. While it does not seem to be the right fit for our case given it's a pretty low-level tool, the ideas it implements might be interesting. Here is a quick summary of what it is and how it works.

- Wireguard is a kernel-level VPN solution that aims to replace IPSec or OpenVPN: It provides a network interface abstraction (eg.

wgXdevice), interconnecting peers through encrypted channels, which allows applications to securely connect to other parties - Each wireguard interface is identified by a public key and communicates with peers through via a defined UDP interface. "Routes" to peers are established via a handshake protocol using Noise and are managed dynamically, associating their public keys with allowed (inner) source IPs

- Handshake serves to establish a symetric key between 2 peers that is regularly rotated and is used to encrypt all encapsulated packets: When the

wgXinterface needs to send an IP packet, it encrypts and wraps it in an UDP packet before sending it to peer.- Being UDP based means messages can be lost or reordered, wireguard maintains a window-based message tracking logic for each peer to ensure proper delivery.

- Setting up and configuring wireguard is relatively straightforward and can all be done through config files

- It took me 10-15 minutes to configure a tunnel between my development VM and my laptop

- Wireguard can handle "roaming" usage transparently by allowing peers to change IP over time

- What's interesting in Wireguard's design is that:

- it's UDP based, hence does not require potentially expensive and cumbersome ptp connections

- it is simple

- it's flexible, allowing peers to be added/removed and topology of network to change

- it provides strong and efficient end-to-end encryption with state-of-the-art protocols

- I wonder if it could not form the basis of a flexible broadcasting infrastructure for Hydra networks