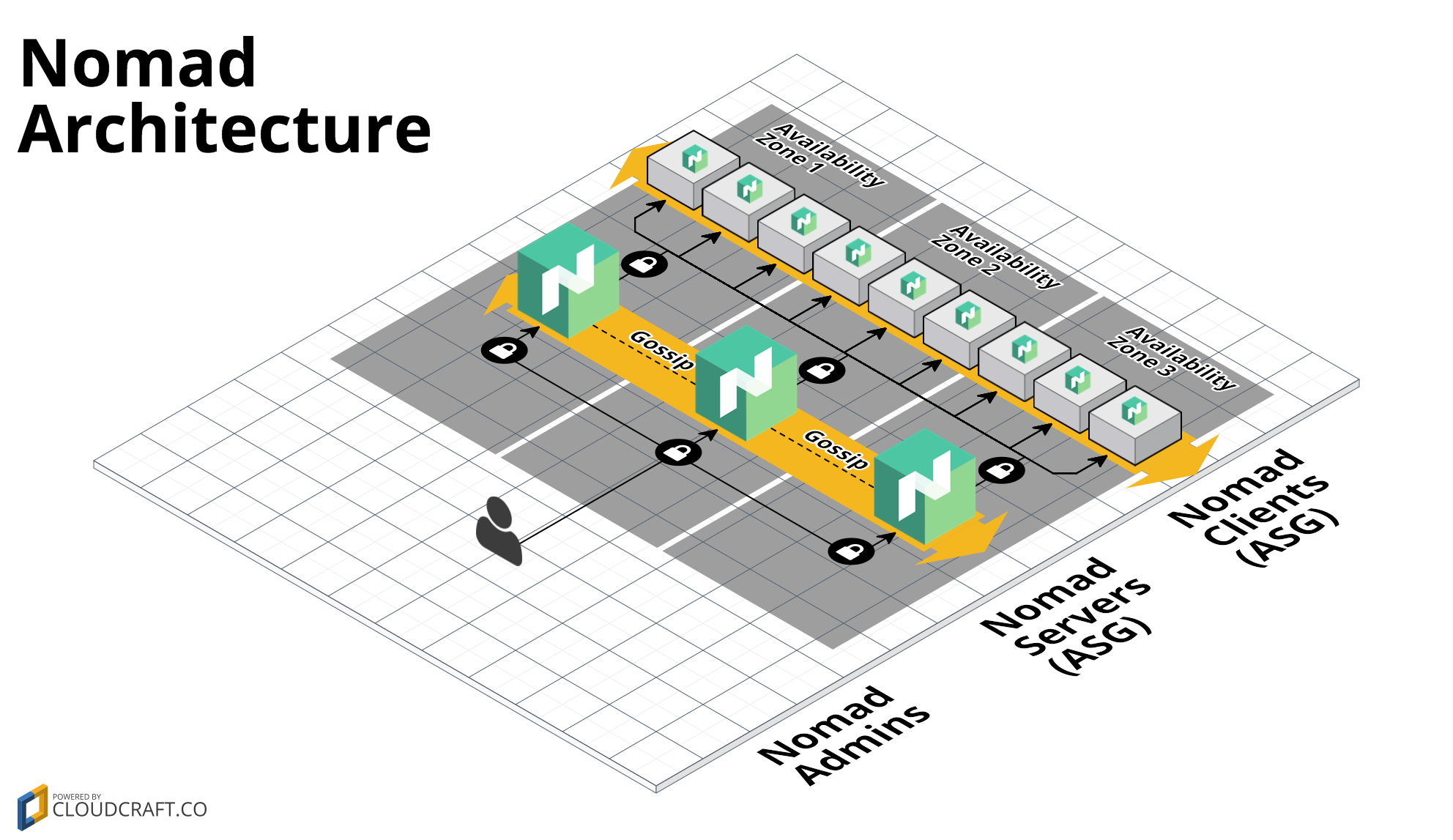

This repo contains a set of modules in the modules folder for deploying a Nomad cluster on AWS using Terraform. Nomad is a distributed, highly-available data-center aware scheduler. A Nomad cluster typically includes a small number of server nodes, which are responsible for being part of the consensus protocol, and a larger number of client nodes, which are used for running jobs:

This repo includes:

-

install-nomad: This module can be used to install Nomad. It can be used in a Packer template to create a Nomad Amazon Machine Image (AMI).

-

run-nomad: This module can be used to configure and run Nomad. It can be used in a User Data script to fire up Nomad while the server is booting.

-

nomad-cluster: Terraform code to deploy a cluster of Nomad servers using an Auto Scaling Group.

This repo has the following folder structure:

- modules: This folder contains several standalone, reusable, production-grade modules that you can use to deploy Nomad.

- examples: This folder shows examples of different ways to combine the modules in the

modulesfolder to deploy Vault. - test: Automated tests for the modules and examples.

- root: The root folder is an example of how to use the nomad-cluster module module to deploy a Nomad cluster in AWS. The Terraform Registry requires the root of every repo to contain Terraform code, so we've put one of the examples there. This example is great for learning and experimenting, but for production use, please use the underlying modules in the modules folder directly.

To run a production Nomad cluster, you need to deploy a small number of server nodes (typically 3), which are responsible for being part of the consensus protocol, and a larger number of client nodes, which are used for running jobs. You must also have a Consul cluster deployed (see the Consul AWS Module) in one of the following configurations:

-

Use the install-consul module from the Consul AWS Module and the install-nomad module from this Module in a Packer template to create an AMI with Consul and Nomad.

If you are just experimenting with this Module, you may find it more convenient to use one of our official public AMIs:

WARNING! Do NOT use these AMIs in your production setup. In production, you should build your own AMIs in your own AWS account.

-

Deploy a small number of server nodes (typically, 3) using the consul-cluster module. Execute the run-consul script and the run-nomad script on each node during boot, setting the

--serverflag in both scripts. -

Deploy as many client nodes as you need using the nomad-cluster module. Execute the run-consul script and the run-nomad script on each node during boot, setting the

--clientflag in both scripts.

Check out the nomad-consul-colocated-cluster example for working sample code.

A Module is a canonical, reusable, best-practices definition for how to run a single piece of infrastructure, such as a database or server cluster. Each Module is created primarily using Terraform, includes automated tests, examples, and documentation, and is maintained both by the open source community and companies that provide commercial support.

Instead of having to figure out the details of how to run a piece of infrastructure from scratch, you can reuse existing code that has been proven in production. And instead of maintaining all that infrastructure code yourself, you can leverage the work of the Module community and maintainers, and pick up infrastructure improvements through a version number bump.

This Module is maintained by Gruntwork. If you're looking for help or commercial support, send an email to modules@gruntwork.io. Gruntwork can help with:

- Setup, customization, and support for this Module.

- Modules for other types of infrastructure, such as VPCs, Docker clusters, databases, and continuous integration.

- Modules that meet compliance requirements, such as HIPAA.

- Consulting & Training on AWS, Terraform, and DevOps.

- Deploy a standalone Consul cluster by following the instructions in the Consul AWS Module.

- Use the scripts from the install-nomad module in a Packer template to create a Nomad AMI.

- Deploy a small number of server nodes (typically, 3) using the nomad-cluster module. Execute the

run-nomad script on each node during boot, setting the--serverflag. You will need to configure each node with the connection details for your standalone Consul cluster. - Deploy as many client nodes as you need using the nomad-cluster module. Execute the

run-nomad script on each node during boot, setting the

--clientflag.

Check out the nomad-consul-separate-cluster example for working sample code.

Contributions are very welcome! Check out the Contribution Guidelines for instructions.

This Module follows the principles of Semantic Versioning. You can find each new release, along with the changelog, in the Releases Page.

During initial development, the major version will be 0 (e.g., 0.x.y), which indicates the code does not yet have a

stable API. Once we hit 1.0.0, we will make every effort to maintain a backwards compatible API and use the MAJOR,

MINOR, and PATCH versions on each release to indicate any incompatibilities.

This code is released under the Apache 2.0 License. Please see LICENSE and NOTICE for more details.

Copyright © 2017 Gruntwork, Inc.