diff --git a/README.md b/README.md

index 6923d511..d8db745b 100644

--- a/README.md

+++ b/README.md

@@ -101,14 +101,14 @@ See a few examples of the README-AI customization options below:

-

+

--emojis --image custom --badge-color DE3163 --header-style compact --toc-style links

|

-

+

--image cloud --header-style compact --toc-style fold

|

@@ -148,21 +148,21 @@ See a few examples of the README-AI customization options below:

-

+

--image custom --badge-color 00ffe9 --badge-style flat-square --header-style classic

|

-

+

--image llm --badge-style plastic --header-style classic

|

-

+

--image custom --badge-color BA0098 --badge-style flat-square --header-style modern --toc-style fold

|

@@ -279,7 +279,7 @@ See the  +

+  |

Additional Sections

@@ -291,7 +291,7 @@ See the  |

+  |

diff --git a/docs/docs/cli_commands.md b/docs/docs/cli_commands.md

deleted file mode 100644

index 4fe507ba..00000000

--- a/docs/docs/cli_commands.md

+++ /dev/null

@@ -1,155 +0,0 @@

-## Command Line Interface

-

-## 🔧 Configuration

-

-Customize your README generation using these CLI options:

-

-| Option | Description | Default |

-|--------|-------------|---------|

-| `--align` | Text align in header | `center` |

-| `--api` | LLM API service (openai, ollama, offline) | `offline` |

-| `--badge-color` | Badge color name or hex code | `0080ff` |

-| `--badge-style` | Badge icon style type | `flat` |

-| `--base-url` | Base URL for the repository | `v1/chat/completions` |

-| `--context-window` | Maximum context window of the LLM API | `3999` |

-| `--emojis` | Adds emojis to the README header sections | `False` |

-| `--header-style` | Header style for the README file | `default` |

-| `--image` | Project logo image | `blue` |

-| `--model` | Specific LLM model to use | `gpt-3.5-turbo` |

-| `--output` | Output filename | `readme-ai.md` |

-| `--rate-limit` | Maximum API requests per minute | `5` |

-| `--repository` | Repository URL or local directory path | `None` |

-| `--temperature` | Creativity level for content generation | `0.9` |

-| `--top-p` | Probability of the top-p sampling method | `0.9` |

-| `--tree-depth` | Maximum depth of the directory tree structure | `2` |

-

-> [!TIP]

-> For a full list of options, run `readmeai --help` in your terminal.

-> See the official documentation for more details on [CLI options](https://eli64s.github.io/readme-ai/cli-options).

-

----

-

-### Badge Customization

-

-The `--badge-style` option lets you select the style of the default badge set.

-

-

-

- | Style |

- Preview |

-

-

- | default |

-     |

-

-

- | flat |

-  |

-

-

- | flat-square |

-  |

-

-

- | for-the-badge |

-  |

-

-

- | plastic |

-  |

-

-

- | skills |

-  |

-

-

- | skills-light |

-  |

-

-

- | social |

-  |

-

-

-

-When providing the `--badge-style` option, readme-ai does two things:

-

-1. Formats the default badge set to match the selection (i.e. flat, flat-square, etc.).

-2. Generates an additional badge set representing your projects dependencies and tech stack (i.e. Python, Docker, etc.)

-

-#### Example

->

-> ```sh

-> ❯ readmeai --badge-style flat-square --repository https://github.com/eli64s/readme-ai

-> ```

->

-

-#### Output

->

-> {... project logo ...}

->

-> {... project name ...}

->

-> {...project slogan...}

->

->  ->

->  ->

->  ->

->  ->

->

->

->

->

-> *Developed with the software and tools below.*

->

->  ->

->  ->

->  ->

->  ->

->  ->

->  ->

->

->  ->

->  ->

->  ->

->  ->

->  ->

->

->

->

->

-> {... end of header ...}

->

-

----

-

-### Project Logo

-

-Select a project logo using the `--image` option.

-

-

-

- | blue |

- gradient |

- black |

-

-

-  |

-  |

-  |

-

-

- | cloud |

- purple |

- grey |

-

-

-  |

-  |

-  |

-

-

-

-For custom images, see the following options:

-* Use `--image custom` to invoke a prompt to upload a local image file path or URL.

-* Use `--image llm` to generate a project logo using a LLM API (OpenAI only).

-

----

diff --git a/docs/docs/concepts.md b/docs/docs/concepts.md

deleted file mode 100644

index a524e070..00000000

--- a/docs/docs/concepts.md

+++ /dev/null

@@ -1,188 +0,0 @@

-

-

-# Concepts

-

-The readme-ai CLI tool provides several configurations that control how it operates and generates README.md files. We will explore these configurations and settings in detail in this document.

-

-## Core Components

-

-At the heart of the readme-ai CLI tool lies the main `AppConfig` class, responsible for storing all configuration settings related to the CLI. Additionally, there is a nested `AppConfigModel` class that extends Pydantic's `BaseModel` to add validation capabilities.

-

-### AppConfig

-

-The `AppConfig` class stores primary configuration categories, namely `cli`, `files`, `git`, `llm`, `md`, and `prompts`. Each category contains specific settings controlling various parts of the tool. Let's discuss each category individually.

-

-#### Cli

-

-The `cli` category holds boolean settings related to the CLI itself, primarily `emojis` and `offline`. The `emojis` flag determines whether emojis appear alongside the section titles within the README.md file. Meanwhile, activating the `offline` flag enables the generation of a README.md file without requiring API calls, providing placeholders where LLM-generated content would normally go.

-

-#### Files

-

-The `files` category manages various file paths used in the application. Specific properties include `dependency_files`, `identifiers`, `ignore_files`, `language_names`, `language_setup`, `output`, `shieldsio_icons`, and `skill_icons`. All these fields hold either absolute file paths or relative references to files managed by the application.

-

-#### Git

-

-The `git` category captures the necessary repository settings, ensuring correct interaction between the readme-ai CLI tool and the target repository. Properties consist of `repository`, `full_name`, `host`, `name`, and `source`. The latter three optional fields get populated during validation performed by the `RepositoryValidator` class.

-

-#### Llm

-

-The `llm` category houses settings pertinent to the LLM API employed by the tool. Fields like `content`, `endpoint`, `encoding`, `model`, `temperature`, `tokens`, `tokens_max`, and `rate_limit` define the behavior of the LLM API during execution.

-

-#### Md

-

-The `md` category groups together Markdown template blocks utilized in constructing the final README.md file. Examples include `align`, `default`, `badge_color`, `badge_style`, `badges_software`, `badges_shields`, `badges_skills`, `contribute`, `features`, `header`, `image`, `modules`, `modules_widget`, `overview`, `quickstart`, `slogan`, `tables`, `toc`, `tree`, among others.

-

-#### Prompts

-

-Finally, the `prompts` category maintains the prompt templates critical in generating appropriate text for the README.md file. Included fields cover `features`, `overview`, `slogan`, and `summaries`.

-

-### Config Helper

-

-The `ConfigHelper` class plays a crucial role as a helper module for managing extra configuration files beyond the primary `AppConfigModel`. Using a secondary `FileHandler` object, the `ConfigHelper` loads multiple configuration files simultaneously, merging them seamlessly into the existing configuration hierarchy.

-

-#### Dependency Files

-

-Dependency files referenced within the `dependency_files` property indicate external configuration files holding important values affecting the overall behavior of the readme-ai CLI tool. When present, the `ConfigHelper` consolidates these dependencies within a dedicated field called `dependency_files`.

-

-#### Ignore Files

-

-Similarly, ignore files listed in the `ignore_files` property specify particular patterns matching files to exclude during certain operations carried out by the readme-ai CLI tool. Analogous to the `dependency_files` treatment, `ignore_files` gets combined into a corresponding field within the `ConfigHelper` class.

-

-#### Language Names

-

-`language_names` consists of mappings associating specific programming languages with human-friendly labels presented in the README.md file. For instance, a label such as "Python" could correspond to the programming language identifier "py".

-

-#### Language Setup

-

-Lastly, `language_setup` comprises instructions describing how to initialize diverse environments supporting various programming languages. By combining these directives, developers can effortlessly prepare suitable platforms for executing code samples featured in the README.md file.

-

-## Validators

-

-Validators serve as specialized utility functions tasked with verifying consistency across distinct elements comprising the larger configuration schema. They perform checks ranging from presence confirmation to compatibility assessment, guaranteeing reliable interactions amongst interconnected entities.

-

-### RepositoryValidator

-

-One prominent validator is the `RepositoryValidator` class, responsible for examining fundamental attributes linked to a given repository. Notably, it ensures correct population of fields like `full_name`, `host`, `name`, and `source` depending on the supplied `repository` value. Furthermore, the `RepositoryValidator` performs mandatory sanity checks concerning the availability and accessibility of the designated repository prior to proceeding with subsequent stages of the configuration process.

-

-## Enums

-

-Enums, short for enumerations, constitute a collection of symbolic names representing distinct constant values. Within the readme-ai CLI tool, enums play an integral part in standardizing choices offered to end-users interacting with the CLI. Three notable enums stand out:

-

-### GitService

-

-`GitService` offers a concise means of specifying popular Git hosting services utilizing descriptive terms familiar to most developers. Supported options include Local, GitHub, GitLab, and Bitbucket.

-

-### BadgeOptions

-

-`BadgeOptions` presents a range of possible styles applicable to README.md file badges. Choices vary from plain representations to visually rich alternatives adorned with gradients and borders.

-

-### ImageOptions

-

-Lastly, `ImageOptions` delineates an array of plausible project logo candidates, facilitating easy selection of appealing visual assets accompanying the README.md file header. Users enjoy the convenience of choosing between preset imagery or supplying custom URLs pointing towards alternative logos.

-

----

diff --git a/docs/docs/configuration.md b/docs/docs/configuration.md

new file mode 100644

index 00000000..389b6693

--- /dev/null

+++ b/docs/docs/configuration.md

@@ -0,0 +1,65 @@

+# Configuration

+

+Readme-ai offers a wide range of configuration options to customize your README generation. This page provides a comprehensive list of all available options with detailed explanations.

+

+## CLI Options

+

+| Option | Description | Default | Impact |

+|--------|-------------|---------|--------|

+| `--align` | Text alignment in header | `center` | Affects the visual layout of the README header |

+| `--api` | LLM API service | `offline` | Determines which AI service is used for content generation |

+| `--badge-color` | Badge color (name or hex) | `0080ff` | Customizes the color of status badges in the README |

+| `--badge-style` | Badge icon style type | `flat` | Changes the visual style of status badges |

+| `--base-url` | Base URL for the repository | `v1/chat/completions` | Used for API requests to the chosen LLM service |

+| `--context-window` | Max context window of LLM API | `3999` | Limits the amount of context provided to the LLM |

+| `--emojis` | Add emojis to README sections | `False` | Adds visual flair to section headers |

+| `--header-style` | Header template style | `default` | Changes the overall look of the README header |

+| `--image` | Project logo image | `blue` | Sets the main image displayed in the README |

+| `--model` | Specific LLM model to use | `gpt-3.5-turbo` | Chooses the AI model for content generation |

+| `--output` | Output filename | `readme-ai.md` | Specifies the name of the generated README file |

+| `--rate-limit` | Max API requests per minute | `5` | Prevents exceeding API rate limits |

+| `--repository` | Repository URL or local path | `None` | Specifies the project to analyze |

+| `--temperature` | Creativity level for generation | `0.9` | Controls the randomness of the AI's output |

+| `--toc-style` | Table of contents style | `bullets` | Changes the format of the table of contents |

+| `--top-p` | Top-p sampling probability | `0.9` | Fine-tunes the AI's output diversity |

+| `--tree-depth` | Max depth of directory tree | `2` | Controls the detail level of the project structure |

+

+## Detailed Option Explanations

+

+### --api

+- Options: `openai`, `ollama`, `gemini`, `offline`

+- Impact: Determines the AI service used for generating README content. Each service has its own strengths and may produce slightly different results.

+

+### --badge-style

+- Options: `default`, `flat`, `flat-square`, `for-the-badge`, `plastic`, `skills`, `skills-light`, `social`

+- Impact: Changes the visual appearance of status badges in the README. Different styles can better match your project's aesthetic.

+

+### --header-style

+- Options: `default`, `classic`, `modern`, `compact`

+- Impact: Alters the layout and design of the README header, including how the project title, description, and badges are displayed.

+

+### --image

+- Options: `blue`, `gradient`, `black`, `cloud`, `purple`, `grey`, `custom`, `llm`

+- Impact: Sets the main visual element of your README. Using `custom` allows you to specify your own image, while `llm` generates an image using AI.

+

+### --model

+- Options vary by API service

+- Impact: Different models have varying capabilities and may produce different quality or style of content. Higher-tier models (e.g., GPT-4) generally produce better results but may be slower or more expensive.

+

+### --temperature

+- Range: 0.0 to 1.0

+- Impact: Lower values produce more focused and deterministic output, while higher values increase creativity and randomness.

+

+### --toc-style

+- Options: `bullets`, `numbers`, `fold`

+- Impact: Changes how the table of contents is formatted. The `fold` option creates a collapsible ToC, which can be useful for longer READMEs.

+

+## Best Practices

+

+1. **Start with defaults**: Begin with the default options and gradually customize to find the best fit for your project.

+2. **Match your project's style**: Use badge and header styles that complement your project's branding or purpose.

+3. **Balance detail and brevity**: Adjust `tree-depth` based on your project's complexity. Deeper trees provide more detail but can make the README lengthy.

+4. **Experiment with LLM settings**: Try different combinations of `temperature` and `top-p` to find the right balance of creativity and coherence.

+5. **Consider API usage**: If using paid API services, be mindful of `rate-limit` and choose models that balance quality and cost.

+

+By carefully configuring these options, you can generate READMEs that are not only informative but also visually appealing and perfectly tailored to your project's needs.

diff --git a/docs/docs/examples.md b/docs/docs/examples.md

index 2d686197..6a67d9d9 100644

--- a/docs/docs/examples.md

+++ b/docs/docs/examples.md

@@ -1,22 +1,62 @@

+# Basic Usage Examples

-## 🎨 Examples

-

-| Language/Framework | Output File | Input Repository | Description |

-|--------------------|-------------|------------------|-------------|

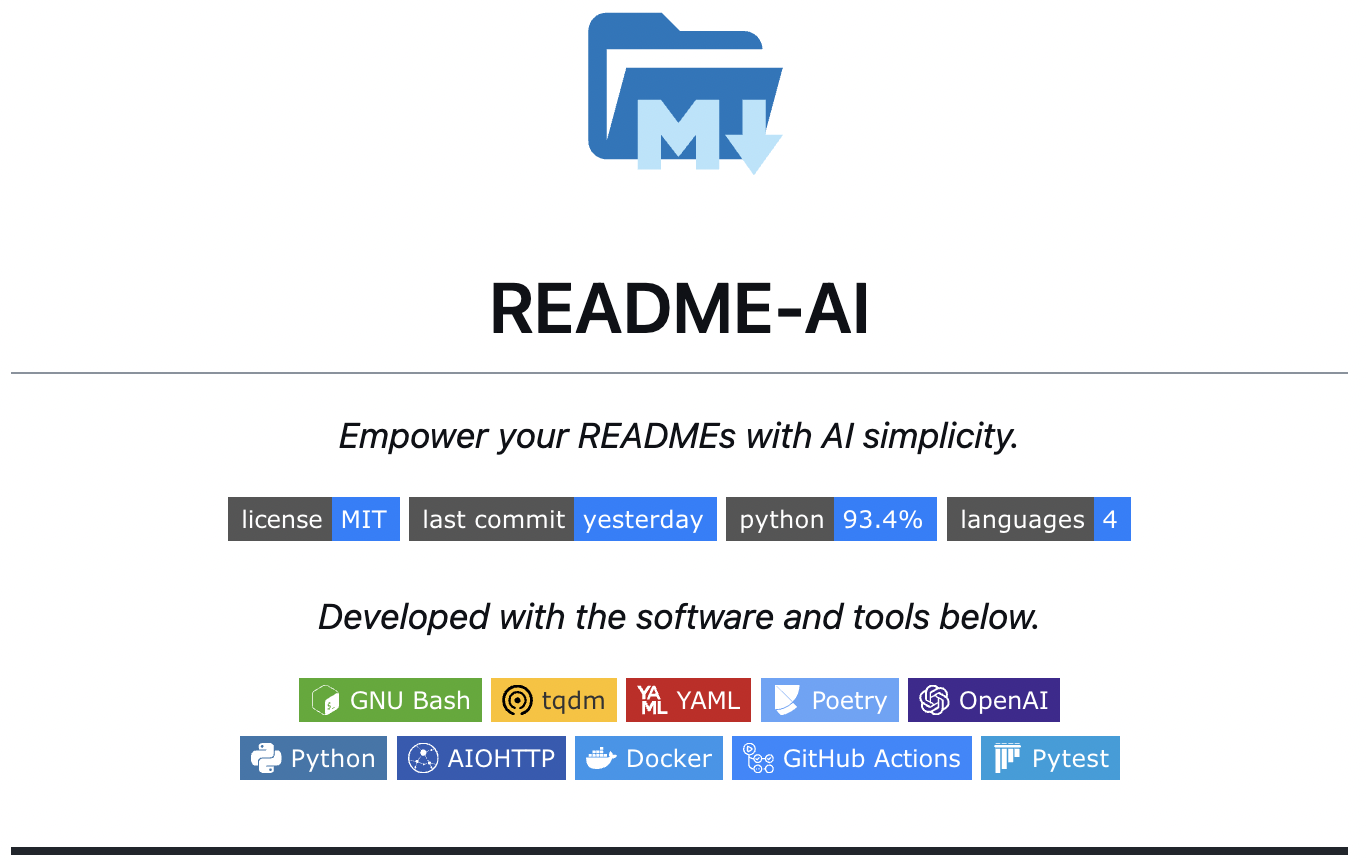

-| Python | [readme-python.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-python.md) | [readme-ai](https://github.com/eli64s/readme-ai) | Core readme-ai project |

-| Python (Gemini) | [readme-google-gemini.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-gemini.md) | [readme-ai](https://github.com/eli64s/readme-ai) | Using Google's Gemini model |

-| TypeScript & React | [readme-typescript.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-typescript.md) | [ChatGPT App](https://github.com/Yuberley/ChatGPT-App-React-Native-TypeScript) | React Native ChatGPT app |

-| PostgreSQL & DuckDB | [readme-postgres.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-postgres.md) | [Buenavista](https://github.com/jwills/buenavista) | Postgres proxy server |

-| Kotlin & Android | [readme-kotlin.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-kotlin.md) | [file.io Client](https://github.com/rumaan/file.io-Android-Client) | Android file sharing app |

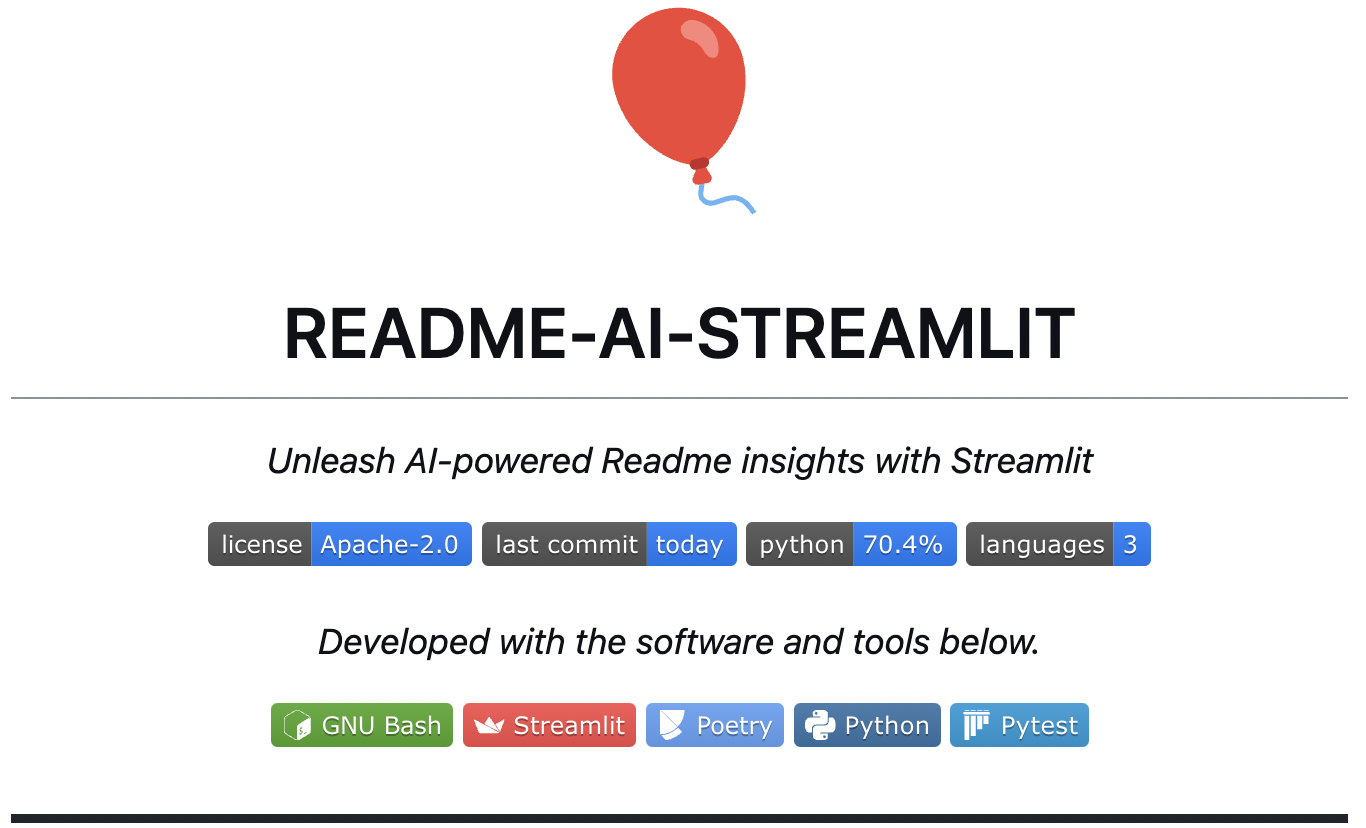

-| Python & Streamlit | [readme-streamlit.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-streamlit.md) | [readme-ai-streamlit](https://github.com/eli64s/readme-ai-streamlit) | Streamlit UI for readme-ai |

-| Rust & C | [readme-rust-c.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-rust-c.md) | [CallMon](https://github.com/DownWithUp/CallMon) | System call monitoring tool |

-| Go | [readme-go.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-go.md) | [docker-gs-ping](https://github.com/olliefr/docker-gs-ping) | Dockerized Go app |

-| Java | [readme-java.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-java.md) | [Minimal-Todo](https://github.com/avjinder/Minimal-Todo) | Minimalist todo app |

-| FastAPI & Redis | [readme-fastapi-redis.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-fastapi-redis.md) | [async-ml-inference](https://github.com/FerrariDG/async-ml-inference) | Async ML inference service |

-| Python & Jupyter | [readme-mlops.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-mlops.md) | [mlops-course](https://github.com/GokuMohandas/mlops-course) | MLOps course materials |

-| Flink & Python | [readme-local.md](https://github.com/eli64s/readme-ai/blob/main/examples/markdown/readme-local.md) | Local Directory | Example using local files |

-

-> [!NOTE]

-> See additional README file examples [here](https://github.com/eli64s/readme-ai/tree/main/examples/markdown).

-

----

+This page provides simple examples of using readme-ai with different LLM services and basic configurations.

+

+## Generate README with OpenAI

+

+```sh

+readmeai --repository https://github.com/username/project \

+ --api openai \

+ --model gpt-3.5-turbo

+```

+

+## Generate README with Ollama

+

+```sh

+readmeai --repository https://github.com/username/project \

+ --api ollama \

+ --model mistral

+```

+

+## Generate README with Google Gemini

+

+```sh

+readmeai --repository https://github.com/username/project \

+ --api gemini

+```

+

+## Generate README in Offline Mode

+

+```sh

+readmeai --repository https://github.com/username/project \

+ --api offline

+```

+

+## Customize Badge Style and Color

+

+```sh

+readmeai --repository https://github.com/username/project \

+ --api openai \

+ --badge-style flat-square \

+ --badge-color "#FF5733"

+```

+

+## Use Custom Project Logo

+

+```sh

+readmeai --repository https://github.com/username/project \

+ --api openai \

+ --image custom

+```

+

+When prompted, enter the path or URL to your custom logo image.

+

+## Generate README with Emojis

+

+```sh

+readmeai --repository https://github.com/username/project \

+ --api openai \

+ --emojis

+```

+

+These examples demonstrate basic usage of readme-ai. For more advanced configurations and options, see the [Advanced Configurations](advanced-configurations.md) page.

diff --git a/docs/docs/features.md b/docs/docs/features.md

deleted file mode 100644

index 9ea6e79d..00000000

--- a/docs/docs/features.md

+++ /dev/null

@@ -1,198 +0,0 @@

----

-title: Lorem ipsum dolor sit amet

----

-

-# Features

-

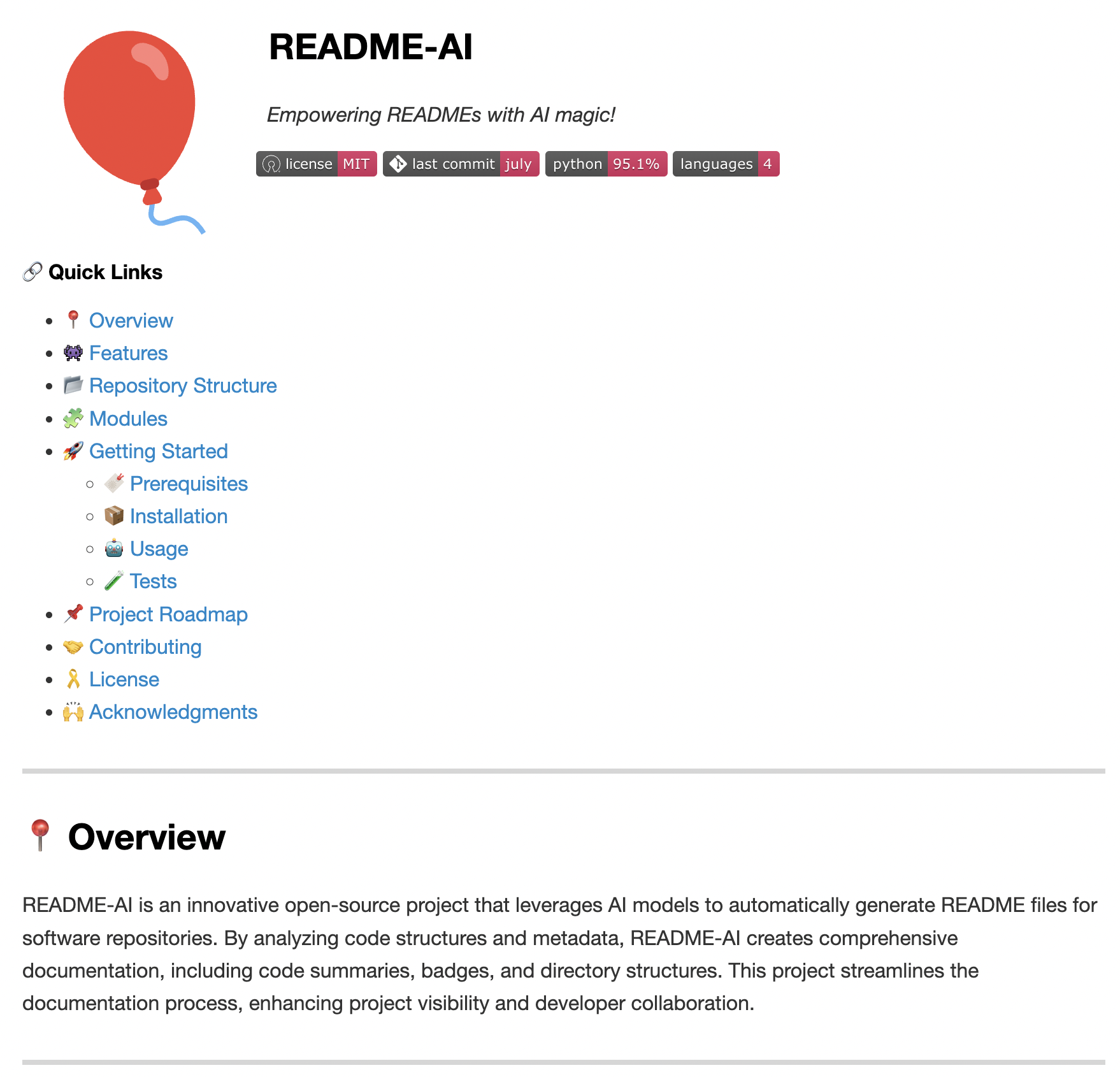

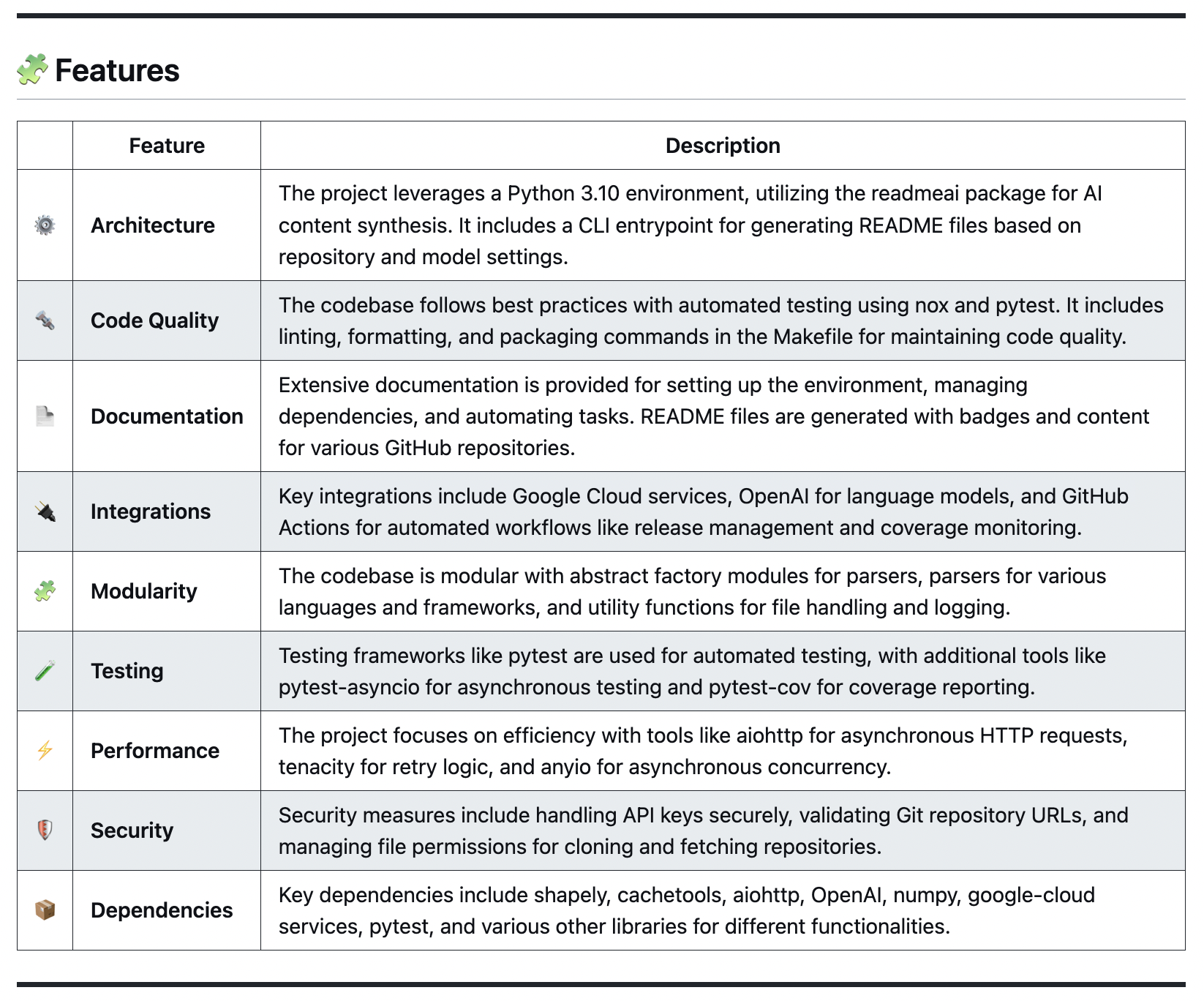

-Built with flexibility in mind, readme-ai allows users to customize various aspects of the README using CLI options and configuration settings. Content is generated using a combination of data extraction and making a few calls to LLM APIs.

-

-Currently, readme-ai uses generative ai to create four sections of the README file.

-

-* **Header**: Project slogan that describes the repository in an engaging way.

-* **Overview**: Provides an intro to the project's core use-case and value proposition.

-* **Features**: Markdown table containing details about the project's technical components.

-* **Modules**: Codebase file summaries are generated and formatted into markdown tables.

-

-All other content is extracted from processing and analyzing repository metadata and files.

-

----

-

-## Customizable Header

-

-The header section is built using repository metadata and CLI options. Key features include:

-- **Badges**: Svg icons that represent codebase metadata, provided by [shields.io](https://shields.io/) and [skill-icons](https://github.com/tandpfun/skill-icons).

-- **Project Logo**: Select a project logo image from the base set or provide your image.

-- **Project Slogan**: Catch phrase that describes the project, generated by generative ai.

-- **Table of Contents/Quick Links**: Links to the different sections of the README file.

-

-See a few examples headers generated by *readme-ai* below.

-

-

-

-

-

- default output (no options provided to cli)

- |

-

-

-

-

-

- --align left --badges flat-square --image cloud

- |

-

-

- --align left --badges flat --image gradient

- |

-

-

-

-

-

- --badges flat --image custom

- |

-

-

- --badges skills-light --image grey

- |

-

-

-

-

-

- --badges flat-square

- |

-

-

- --badges flat --image black

- |

-

-

-

-See the Configuration section below for the complete list of CLI options and settings.

-

-## Codebase Summaries

-

----

-

-

-

-

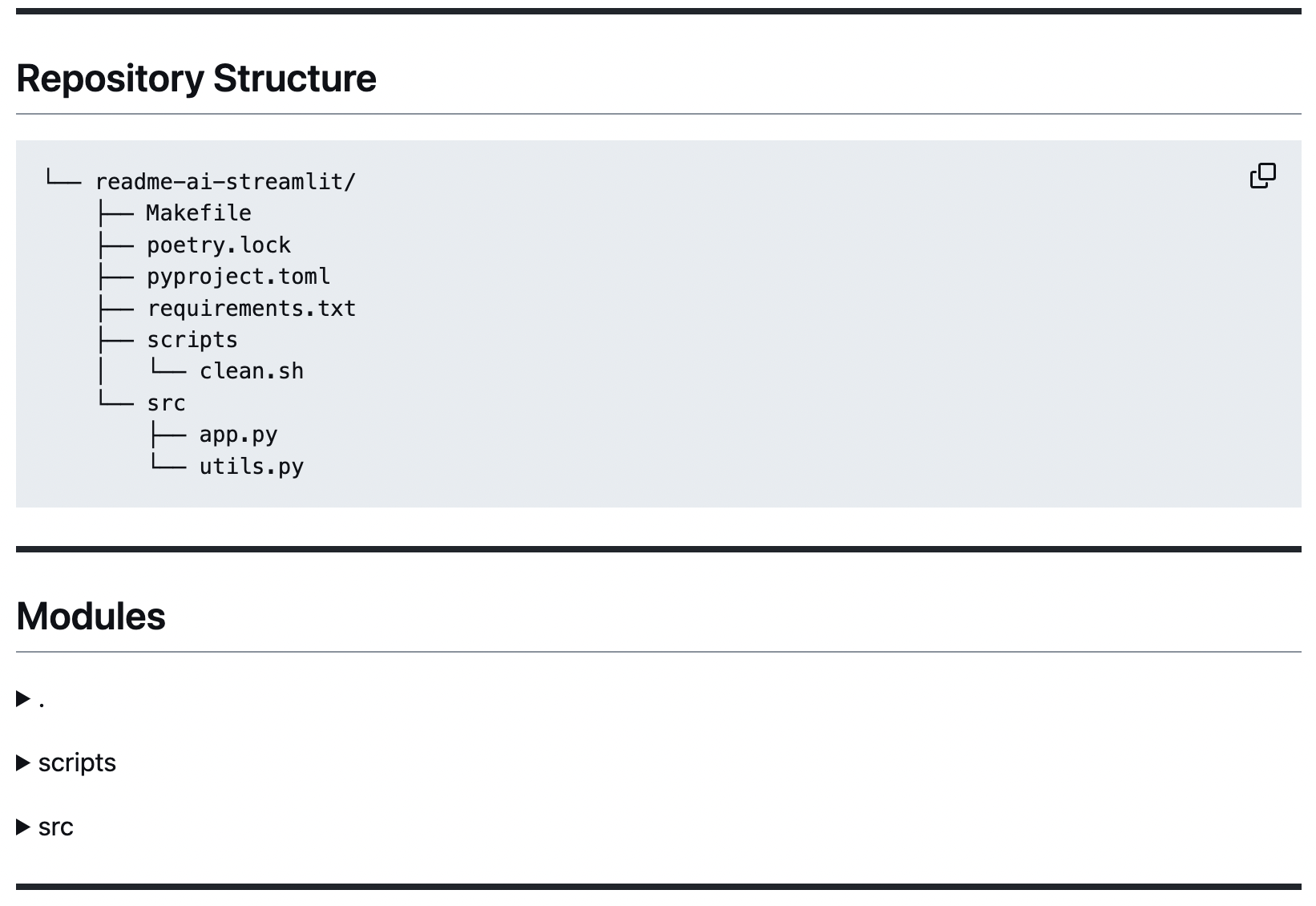

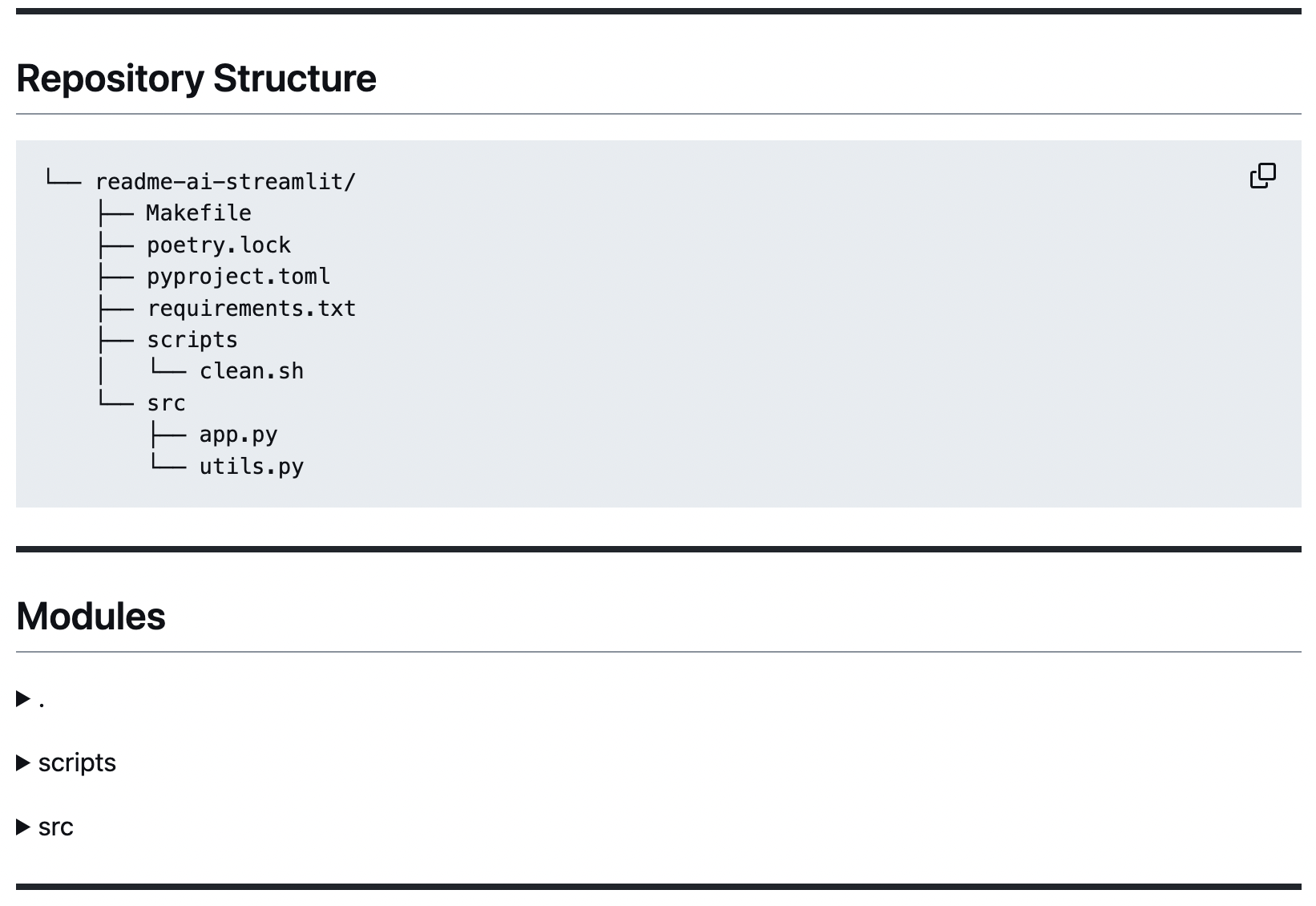

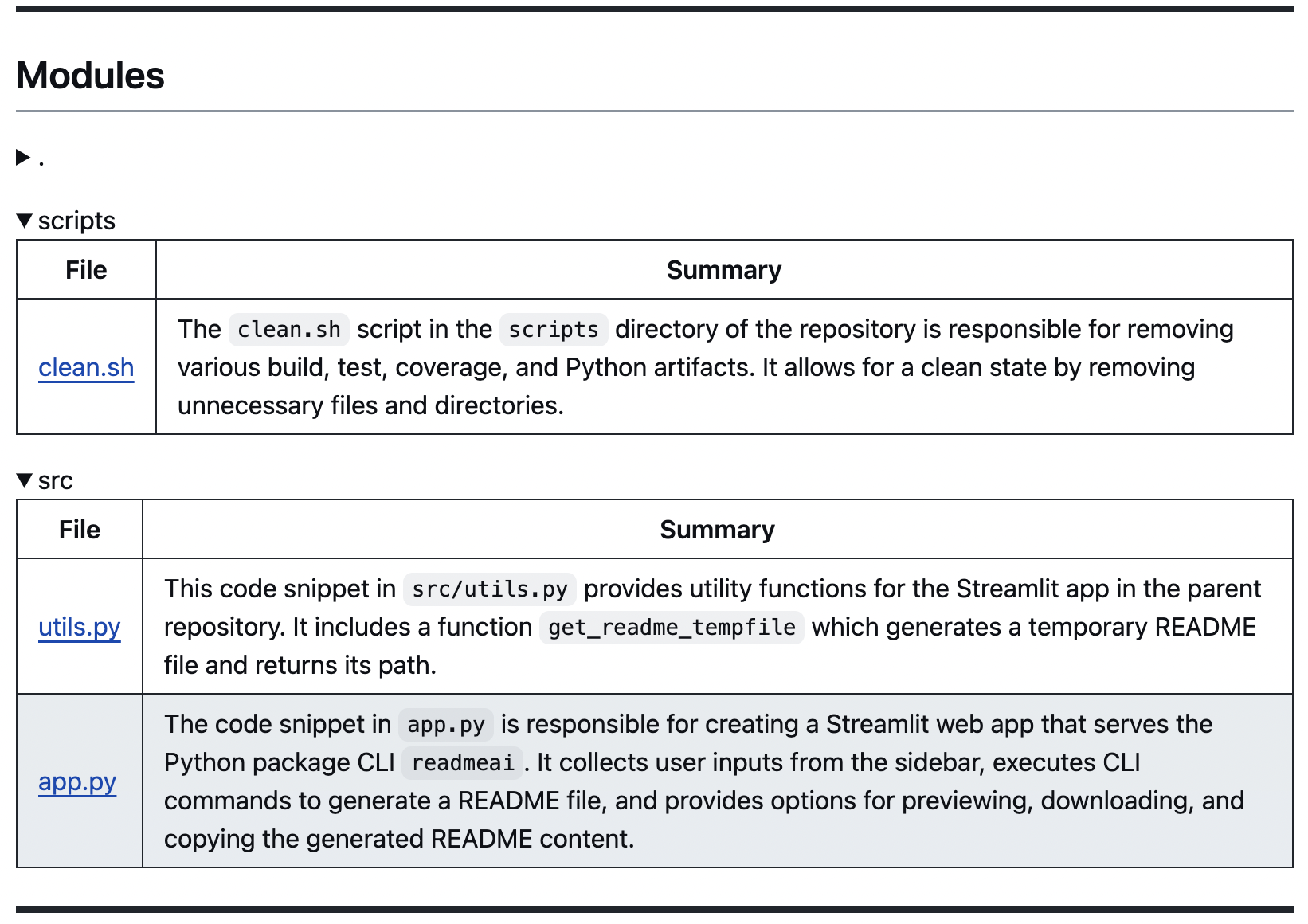

- Repository Structure

- A directory tree structure is created and displayed in the README. Implemented using pure Python (tree.py).

- |

-

-

-

-  -

- |

-

-

-

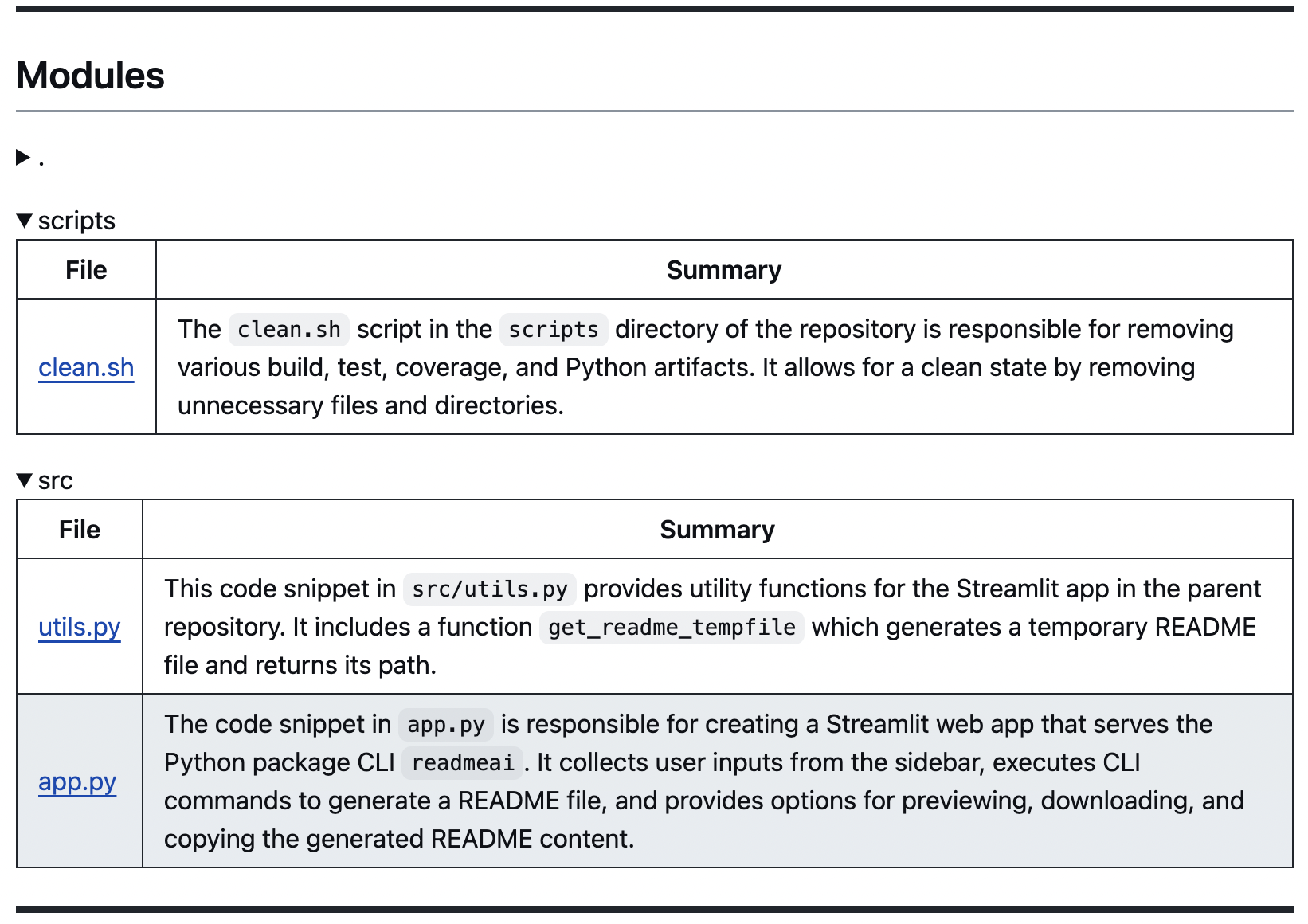

- Codebase Summaries

-

- File summaries generated using LLM APIs, and are formatted and grouped by directory in markdown tables.

- |

-

-

-

-  -

- |

-

-

-

----

-

-## Overview and Features

-

-The overview and features sections are generated using OpenAI's API. Structured prompt templates are injected with repository metadata to help produce more accurate and relevant content.

-

-

-

- Overview

- High-level introduction of the project, focused on the value proposition and use-cases, rather than technical aspects.

- |

-

-

-  |

-

-

- Features Table

- Describes technical components of the codebase, including architecture, dependencies, testing, integrations, and more.

- |

-

-

-  |

-

-

-

----

-

-## Quick Start

-

-

-

- Getting Started or Quick Start

- Generates structured guides for installing, running, and testing your project. These steps are created by identifying dependencies and languages used in the codebase, and mapping this data to configuration files such as the language_setup.toml file.

- |

-

-

-  -

- |

-

-

-

----

-

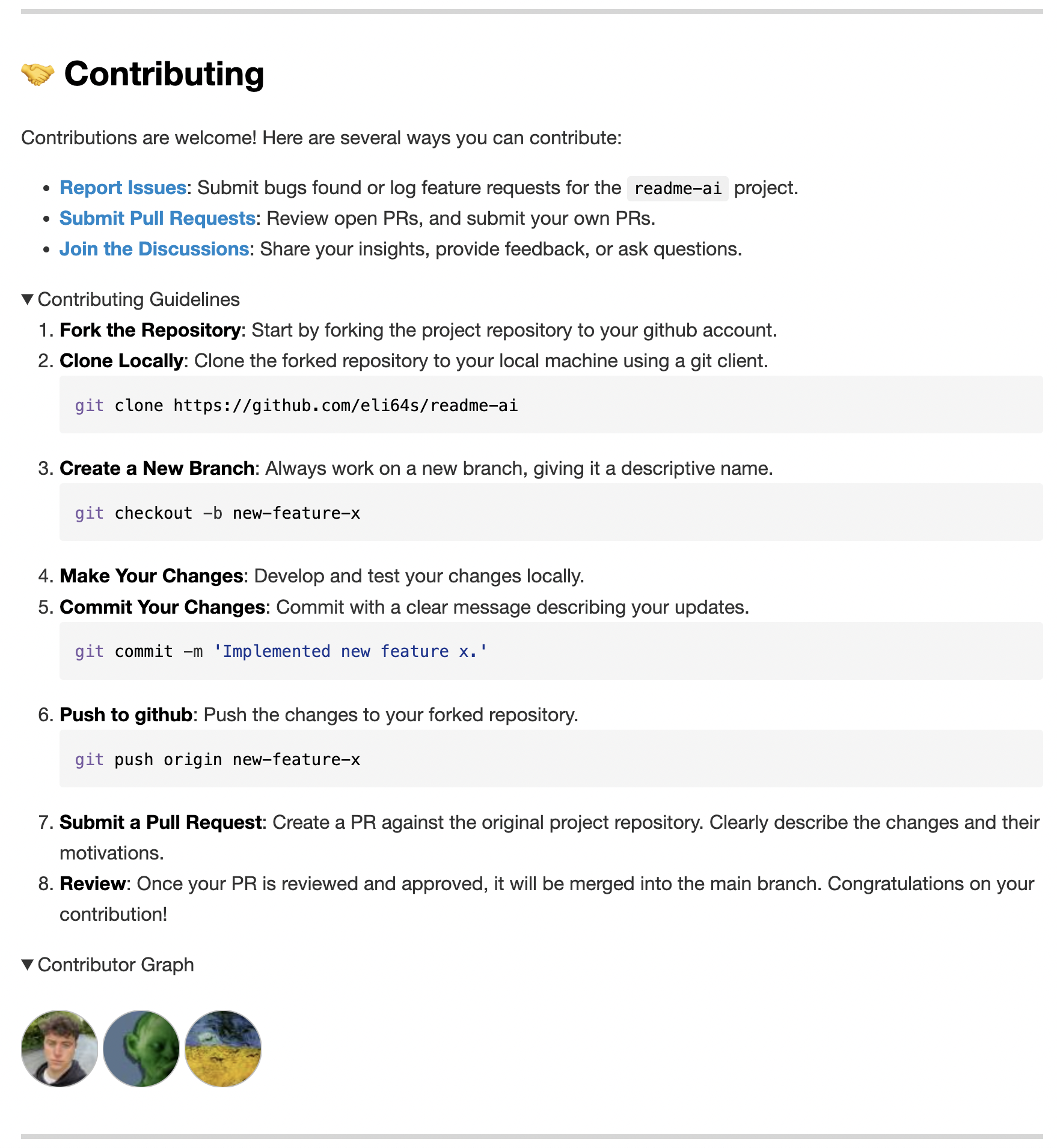

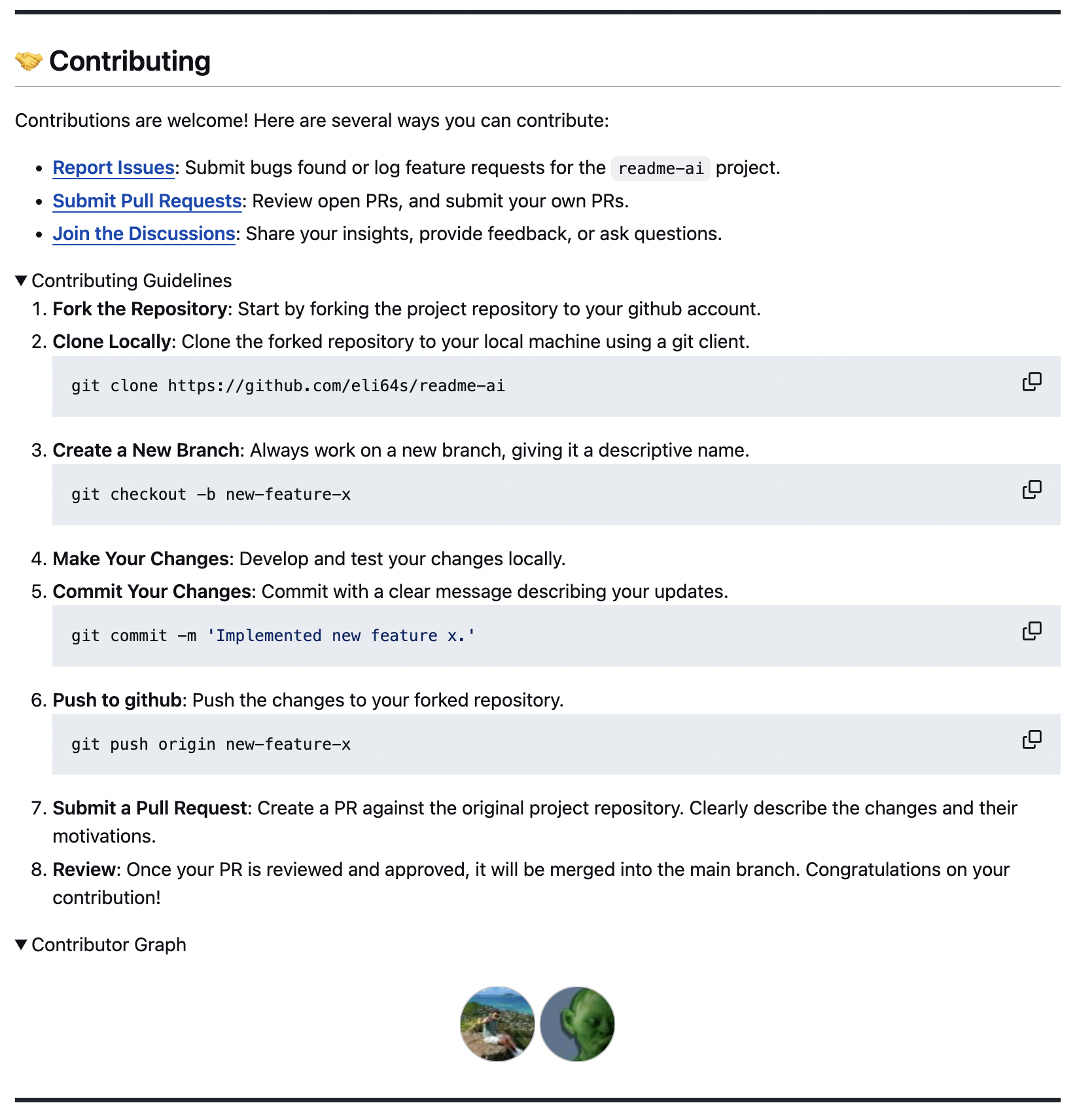

-## Contributing Guidelines & More

-

-

-

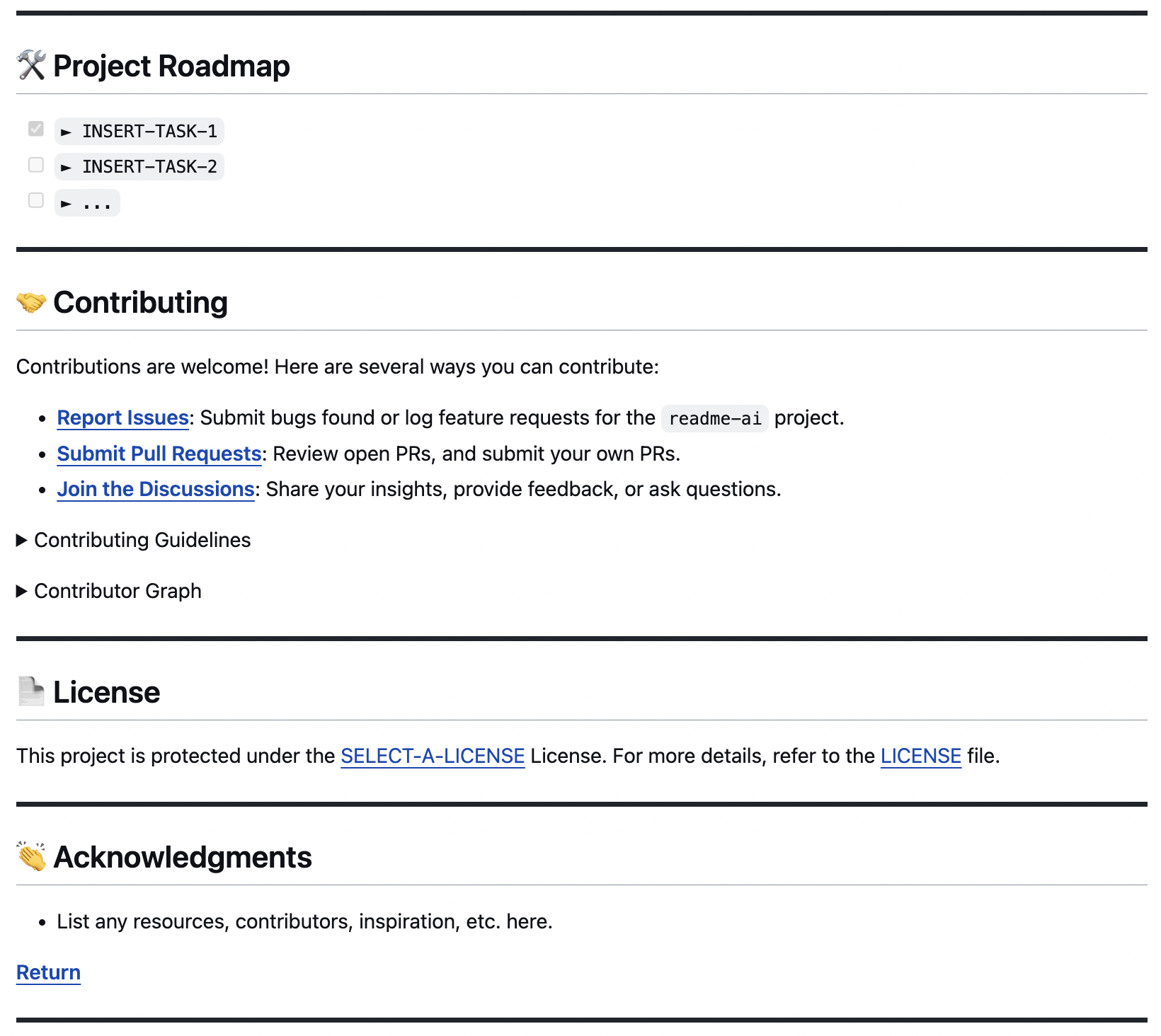

- Additional Sections

- The remaining README sections are built from a baseline template that includes common sections such as Project Roadmap, Contributing Guidelines, License, and Acknowledgements.

- |

-

-

-  |

-

-

- Contributing Guidelines

- The contributing guidelines has a dropdown that outlines a general process for contributing to your project.

- |

-

-

-  |

-

-

-

----

-

-## Template READMEs

-

-This feature is currently under development. The template system will allow users to generate README files in different flavors, such as ai, data, web development, etc.

-

-

-

-

- README Template for ML & Data

-

- - Overview: Project objectives, scope, outcomes.

- - Project Structure: Organization and components.

- - Data Preprocessing: Data sources and methods.

- - Feature Engineering: Impact on model performance.

- - Model Architecture: Selection and development strategies.

- - Training: Procedures, tuning, strategies.

- - Testing and Evaluation: Results, analysis, benchmarks.

- - Deployment: System integration, APIs.

- - Usage and Maintenance: User guide, model upkeep.

- - Results and Discussion: Implications, future work.

- - Ethical Considerations: Ethics, privacy, fairness.

- - Contributing: Contribution guidelines.

- - Acknowledgements: Credits, resources used.

- - License: Usage rights, restrictions.

-

- |

-

-

-

----

diff --git a/docs/docs/how_it_works.md b/docs/docs/how_it_works.md

deleted file mode 100644

index 714cc053..00000000

--- a/docs/docs/how_it_works.md

+++ /dev/null

@@ -1,17 +0,0 @@

-The steps below outline the process of generating a README using the CLI.

-

-1. The user provides a GitHub repository URL to the CLI.

-2. The input is sanitized and validated.

-3. The codebase is cloned into the temporary directory.

-4. Each file in the codebase is parsed and metadata is extracted.

-5. The metadata is injected into prompt templates and sent to OpenAI's API.

-6. The generated content is injected into markdown code blocks and written to the README.

-

-

-The diagram below shows the high-level architecture of the project.

-

-

-

-

-  -

-  -

-

-README-AI

-

- Automated README file generator, powered by LLM APIs

-

-

-

-  -

-

-

-

-

-  -

-

-

-

-

-  -

-

-

-

-

-  -

-

-

-

-

-

- Objective

-

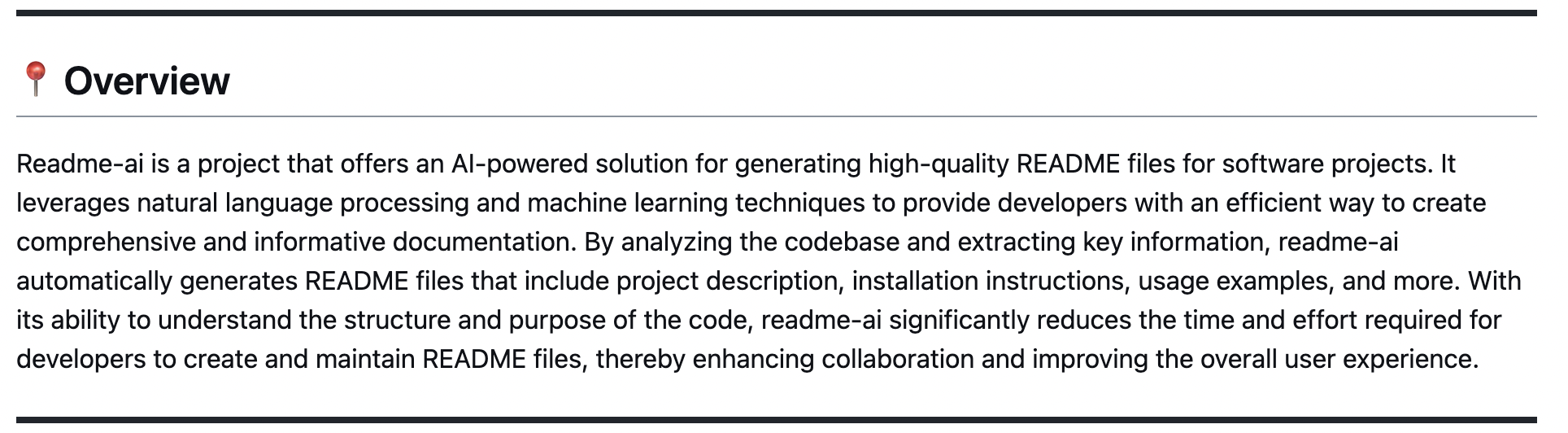

- Readme-ai is a developer tool that auto-generates README.md files using a combination of data extraction and generative ai. Simply provide a repository URL or local path to your codebase and a well-structured and detailed README file will be generated for you.

-

-

- Motivation

-

- Streamlines documentation creation and maintenance, enhancing developer productivity. This project aims to enable all skill levels, across all domains, to better understand, use, and contribute to open-source software.

-

+# Welcome to Readme-ai

+

+Readme-ai is an automated README file generator powered by large language model APIs. It streamlines documentation creation and maintenance, enhancing developer productivity across all skill levels and domains.

+

+## Quick Links

+

+- [Installation](installation.md)

+- [Usage](usage.md)

+- [Features](features/overview.md)

+- [Examples](examples/basic-usage.md)

+- [Contributing](contributing/guidelines.md)

+

+## Key Features

+

+- **Flexible README Generation**: Robust repository context extraction combined with generative AI.

+- **Multiple LLM Support**: Compatible with OpenAI, Ollama, Google Gemini, and Offline Mode.

+- **Customizable Output**: Dozens of CLI options for styling, badges, header designs, and more.

+- **Language Agnostic**: Works with a wide range of programming languages and project types.

+- **Offline Mode**: Generate a boilerplate README without calling an external API.

---

+

+For more information on getting started, check out our [Installation](installation.md) and [Usage](usage.md) guides.

diff --git a/docs/docs/installation.md b/docs/docs/installation.md

index 059bacf1..1bb9eb98 100644

--- a/docs/docs/installation.md

+++ b/docs/docs/installation.md

@@ -1,63 +1,56 @@

-#### Using `pip`

-

-> [](https://pypi.org/project/readmeai/)

->

-> ```sh

-> pip install readmeai

-> ```

-

-#### Using `docker`

-

-> [](https://hub.docker.com/r/zeroxeli/readme-ai)

->

-> ```sh

-> docker pull zeroxeli/readme-ai:latest

-> ```

-

-#### Using `conda`

-

-> [](https://anaconda.org/zeroxeli/readmeai)

->

-> ```sh

-> conda install -c conda-forge readmeai

-> ```

-

-

-

- From source

-

-

-> Clone repository and change directory.

->

-> ```console

-> $ git clone https://github.com/eli64s/readme-ai

->

-> $ cd readme-ai

-> ```

-

-#### Using `bash`

->

-> [](https://www.gnu.org/software/bash/)

->

-> ```console

-> $ bash setup/setup.sh

-> ```

-

-#### Using `poetry`

-> [](https://python-poetry.org/)

->

-> ```console

-> $ poetry install

-> ```

-

-* Similiary you can use `pipenv` or `pip` to install the requirements.txt.

-

-

-

-> [!TIP]

->

-> [](https://pipxproject.github.io/pipx/installation/)

->

-> Use [pipx](https://pipx.pypa.io/stable/installation/) to install and run Python command-line applications without causing dependency conflicts with other packages installed on the system.

-

----

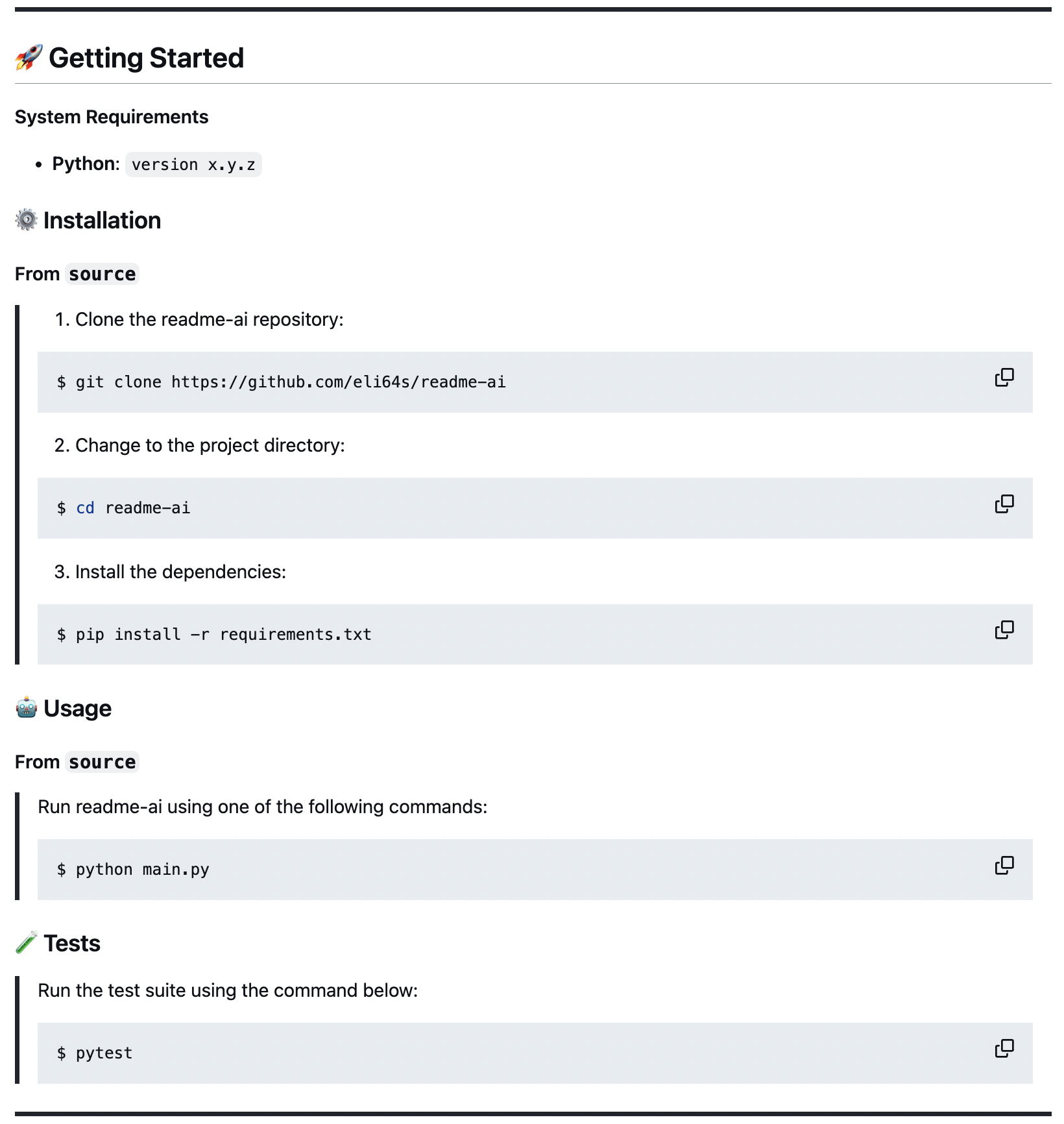

+# Installation

+

+Readme-ai can be installed using several methods. Choose the one that best fits your workflow.

+

+## Prerequisites

+

+- Python 3.9+

+- Package manager/Container: `pip`, `pipx`, `docker`

+

+## Using pip

+

+```sh

+pip install readmeai

+```

+

+## Using pipx

+

+[pipx](https://pipxproject.github.io/pipx/installation/) is recommended for installing Python CLI applications:

+

+```sh

+pipx install readmeai

+```

+

+## Using Docker

+

+```sh

+docker pull zeroxeli/readme-ai:latest

+```

+

+## From source

+

+1. Clone the repository:

+ ```sh

+ git clone https://github.com/eli64s/readme-ai

+ cd readme-ai

+ ```

+

+2. Install using one of the following methods:

+

+ Using bash:

+ ```sh

+ bash setup/setup.sh

+ ```

+

+ Using poetry:

+ ```sh

+ poetry install

+ ```

+

+After installation, verify that readme-ai is correctly installed by running:

+

+```sh

+readmeai --version

+```

+

+For usage instructions, see the [Usage](usage.md) guide.

diff --git a/docs/docs/llm-integrations.md b/docs/docs/llm-integrations.md

new file mode 100644

index 00000000..44e69163

--- /dev/null

+++ b/docs/docs/llm-integrations.md

@@ -0,0 +1,88 @@

+# LLM Integration

+

+Readme-ai integrates seamlessly with various Large Language Model (LLM) services to generate high-quality README content. This page details the supported LLM services and how to use them effectively.

+

+## Supported LLM Services

+

+### 1. OpenAI

+

+OpenAI's GPT models are known for their versatility and high-quality text generation.

+

+#### Configuration:

+```sh

+readmeai --api openai --model gpt-3.5-turbo

+```

+

+#### Available Models:

+- `gpt-3.5-turbo`

+- `gpt-4`

+- `gpt-4-turbo`

+

+#### Best Practices:

+- Use `gpt-3.5-turbo` for faster generation and lower costs.

+- Use `gpt-4` or `gpt-4-turbo` for more complex projects or when you need higher accuracy.

+

+### 2. Ollama

+

+Ollama provides locally-run, open-source language models.

+

+#### Configuration:

+```sh

+readmeai --api ollama --model llama2

+```

+

+#### Available Models:

+- `llama2`

+- `mistral`

+- `gemma`

+

+#### Best Practices:

+- Ensure Ollama is running locally before using this option.

+- Ollama models run offline, providing privacy and speed benefits.

+

+### 3. Google Gemini

+

+Google's Gemini models offer strong performance across a wide range of tasks.

+

+#### Configuration:

+```sh

+readmeai --api gemini --model gemini-pro

+```

+

+#### Available Models:

+- `gemini-pro`

+

+#### Best Practices:

+- Gemini models excel at understanding context and generating coherent text.

+- Ensure you have the necessary API credentials set up.

+

+### 4. Offline Mode

+

+Offline mode generates a basic README structure without using any LLM service.

+

+#### Configuration:

+```sh

+readmeai --api offline

+```

+

+#### Best Practices:

+- Use offline mode for quick boilerplate generation or when you don't have internet access.

+- Customize the generated README manually after generation.

+

+## Comparing LLM Services

+

+| Service | Pros | Cons |

+|---------|------|------|

+| OpenAI | High-quality output, Versatile | Requires API key, Costs associated |

+| Ollama | Free, Privacy-focused, Offline | May be slower, Requires local setup |

+| Gemini | Strong performance, Google integration | Requires API key |

+| Offline | No internet required, Fast | Basic output, Limited customization |

+

+## Tips for Optimal Results

+

+1. **Experiment with different models**: Try various LLM services and models to find the best fit for your project.

+2. **Provide clear context**: Ensure your repository has well-organized code and documentation to help the LLM generate more accurate content.

+3. **Fine-tune with CLI options**: Use readme-ai's CLI options to customize the output further after choosing your LLM service.

+4. **Review and edit**: Always review the generated README and make necessary edits to ensure accuracy and relevance to your project.

+

+By leveraging these LLM integrations effectively, you can generate comprehensive and accurate README files for your projects with minimal effort.

diff --git a/docs/docs/overview.md b/docs/docs/overview.md

new file mode 100644

index 00000000..53edf10e

--- /dev/null

+++ b/docs/docs/overview.md

@@ -0,0 +1,31 @@

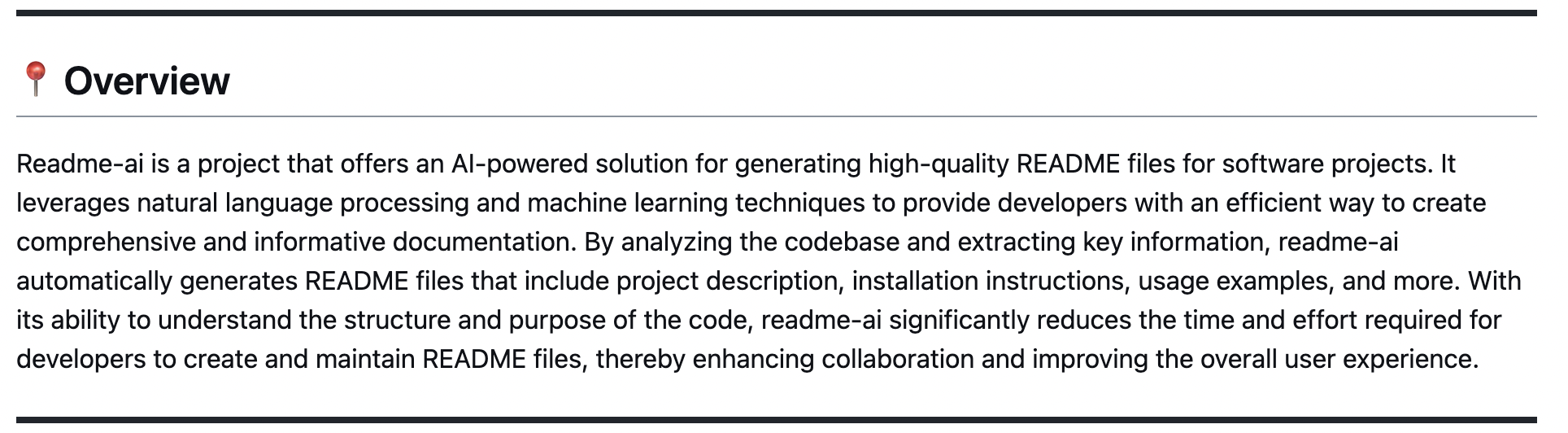

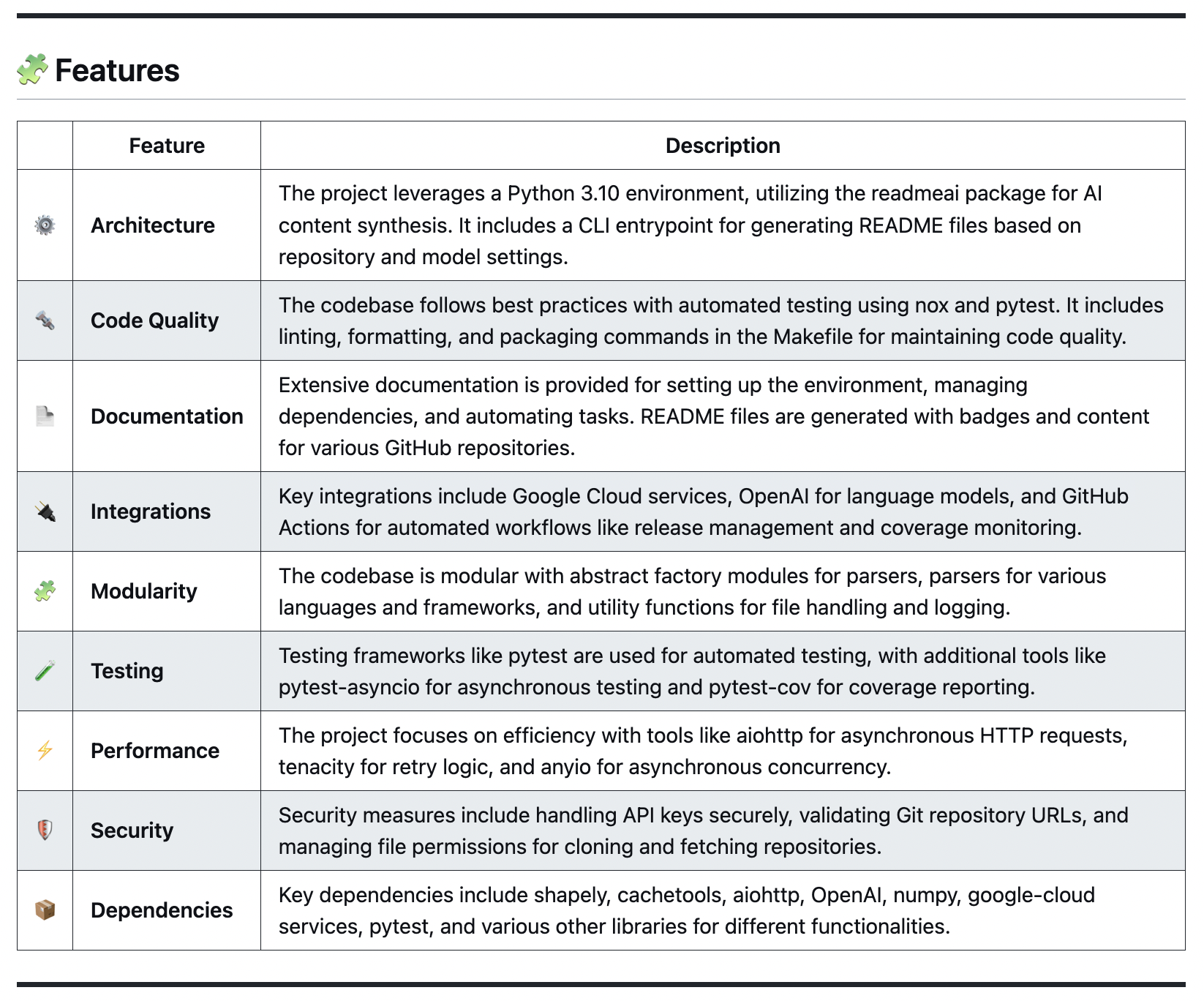

+# Features Overview

+

+Readme-ai offers a comprehensive set of features designed to streamline the creation of high-quality README files for your projects. Here's an overview of the key features:

+

+1. **Flexible README Generation**: Combines robust repository context extraction with generative AI to create detailed and accurate README files.

+

+2. **Multiple LLM Support**: Compatible with various language model APIs, including:

+ - OpenAI

+ - Ollama

+ - Google Gemini

+ - Offline Mode (for generating boilerplate READMEs without API calls)

+

+3. **Customizable Output**: Offers numerous CLI options for tailoring the README to your project's needs:

+ - Badge styles and colors

+ - Header designs

+ - Table of contents styles

+ - Project logos

+

+4. **Language Agnostic**: Works with a wide range of programming languages and project types, automatically detecting and summarizing key aspects of your codebase.

+

+5. **Project Analysis**: Automatically extracts and presents important information about your project:

+ - Directory structure

+ - File summaries

+ - Dependencies

+ - Setup instructions

+

+6. **Offline Mode**: Generate a basic README structure without requiring an internet connection or API calls.

+

+7. **Continuous Integration**: Can be integrated into CI/CD pipelines for automated README generation and updates.

+

+For more detailed information on each feature, please refer to the specific feature pages in the navigation menu.

diff --git a/docs/docs/pydantic_settings.md b/docs/docs/pydantic_settings.md

deleted file mode 100644

index 1ae1ab9c..00000000

--- a/docs/docs/pydantic_settings.md

+++ /dev/null

@@ -1,85 +0,0 @@

-# Configuration Models and Enums

-

-This page documents the data models and enums used for configuring the `readme-ai` CLI tool. These models are based on [Pydantic](https://pydantic-docs.helpmanual.io/), which allows for data parsing and validation through Python type annotations.

-

-## Git Settings

-

-`GitSettings` is used to represent and validate repository settings.

-

-### Fields

-

-- `repository`: A string or `Path` representing the location of the repository.

-- `full_name`: An optional string denoting the full name of the repository in 'username/repo' format.

-- `host`: An optional string representing the Git service host.

-- `name`: An optional string for the repository name.

-- `source`: An optional string representing the source Git service.

-

-### Validators

-

-Custom validators are used to ensure that the repository information is correct and that the `full_name`, `host`, `name`, and `source` fields are derived correctly from the `repository` field.

-

-## CLI Settings

-

-`CliSettings` manages the command-line interface options for the `readme-ai` application.

-

-### Fields

-

-- `emojis`: A boolean indicating whether to use emojis.

-- `offline`: A boolean specifying if the tool should run in offline mode.

-

-## File Settings

-

-`FileSettings` defines paths related to different configurations and output.

-

-### Fields

-

-- `dependency_files`: The path to the file containing dependency configurations.

-- `identifiers`: The path to the file with identifiers.

-- `ignore_files`: The path to the file listing files to ignore.

-- `language_names`: The path to the file with language names mapping.

-- `language_setup`: The path to the file with language setup instructions.

-- `output`: The path for the output file (e.g., `README.md`).

-- `shields_icons`: The path to the file with shield icon configurations.

-- `skill_icons`: The path to the file with skill icon configurations.

-

-## Enums

-

-The application uses several `Enum` classes to represent various configurations.

-

-### GitService

-

-`GitService` is an enum that includes:

-

-- `LOCAL`: Local repository.

-- `GITHUB`: GitHub repository.

-- `GITLAB`: GitLab repository.

-- `BITBUCKET`: Bitbucket repository.

-

-Each service has properties for `api_url` and `file_url_template` to facilitate integration with the respective Git service.

-

-### BadgeOptions

-

-`BadgeOptions` represents the available styles for badges in the README files, such as:

-

-- `DEFAULT`

-- `FLAT`

-- `FLAT_SQUARE`

-- `FOR_THE_BADGE`

-- `PLASTIC`

-- `SKILLS`

-- `SKILLS_LIGHT`

-- `SOCIAL`

-

-### ImageOptions

-

-`ImageOptions` enumerates the available choices for images used in the README header, including:

-

-- `CUSTOM`

-- `DEFAULT`

-- `BLACK`

-- `GREY`

-- `PURPLE`

-- `YELLOW`

-- `CLOUD`

-

----

diff --git a/docs/docs/usage.md b/docs/docs/usage.md

index 33bc1f6e..f3d4b21b 100644

--- a/docs/docs/usage.md

+++ b/docs/docs/usage.md

@@ -1,79 +1,72 @@

-### ► Running `readme-ai`

+# Usage

-Ensure your LLM API key is set as an environment variable.

+This guide covers the basic usage of readme-ai and provides examples for different LLM services.

-`Linux / macOS:`

-```console

-$ export OPENAI_API_KEY=

-```

-

-`Windows`

-```console

-$ set OPENAI_API_KEY=

-```

+## Basic Usage

-#### Using `pip`

-[](https://pypi.org/project/readmeai/)

+The general syntax for using readme-ai is:

```sh

-readmeai --repository https://github.com/eli64s/readme-ai

+readmeai --repository --api [OPTIONS]

```

-#### Using `docker`

-[](https://hub.docker.com/r/zeroxeli/readme-ai)

+Replace `` with your repository URL or local path, and `` with your chosen LLM service (openai, ollama, gemini, or offline).

-```sh

-docker run -it \

--e OPENAI_API_KEY=$OPENAI_API_KEY \

--v "$(pwd)":/app zeroxeli/readme-ai:latest \

--r https://github.com/eli64s/readme-ai

-```

+## Examples

-#### Using `streamlit`

+### Using OpenAI

-* [](https://readme-ai.streamlit.app/)

-

-Use the app directly in your browser on Streamlit, no installation required! More details about the web app can be found here.

+```sh

+readmeai --repository https://github.com/eli64s/readme-ai \

+ --api openai \

+ --model gpt-3.5-turbo

+```

-

-

- From source

-

+### Using Ollama

-#### Using `bash`

+```sh

+readmeai --repository https://github.com/eli64s/readme-ai \

+ --api ollama \

+ --model llama3

+```

-[](https://www.gnu.org/software/bash/)

+### Using Google Gemini

-```console

-$ conda activate readmeai

-$ python3 -m readmeai.cli.commands -r https://github.com/eli64s/readme-ai

+```sh

+readmeai --repository https://github.com/eli64s/readme-ai \

+ --api gemini \

+ --model gemini-1.5-flash

```

-#### Using `poetry`

-[](https://python-poetry.org/)

+### Offline Mode

-```console

-$ poetry shell

-$ python3 -m readmeai.cli.commands -r https://github.com/eli64s/readme-ai

+```sh

+readmeai --repository https://github.com/eli64s/readme-ai \

+ --api offline

```

-

-

----

+## Advanced Usage

-### 🧪 Tests

+You can customize the output using various options:

-#### Using `pytest`

-[](https://docs.pytest.org/en/7.1.x/contents.html)

-```console

-$ make pytest

+```sh

+readmeai --repository https://github.com/eli64s/readme-ai \

+ --api openai \

+ --model gpt-4-turbo \

+ --badge-color blueviolet \

+ --badge-style flat-square \

+ --header-style compact \

+ --toc-style fold \

+ --temperature 0.1 \

+ --tree-depth 2 \

+ --image LLM \

+ --emojis

```

-#### Using `nox`

-```console

-$ nox -f noxfile.py

-```

+For a full list of options, run:

-Use [nox](https://nox.thea.codes/en/stable/) to test application against multiple Python environments and dependencies!

+```sh

+readmeai --help

+```

----

+See the [Configuration](configuration.md) page for detailed information on all available options.

diff --git a/mkdocs.yml b/mkdocs.yml

index da4a6a92..5c4490fb 100644

--- a/mkdocs.yml

+++ b/mkdocs.yml

@@ -1,7 +1,7 @@

# Readme-ai MkDocs Site Configuration

-site_name: Readme-ai Docs

-site_description: "🎈 Automated README file generator, powered by GPT language model APIs."

+site_name: README-AI Docs

+site_description: "README file generator, powered by large language model APIs 👾"

site_url: https://eli64s.github.io/readme-ai/

site_author: readme-ai

repo_url: https://github.com/eli64s/readme-ai

@@ -39,20 +39,17 @@ theme:

- navigation.indexes

- navigation.sections

-

nav:

- Home: index.md

- - Installation: installation.md

- Getting Started:

- - "Prerequisites": prerequisites.md

- - "Running the CLI": usage.md

- - "CLI Commands": cli_commands.md

- - Features: features.md

+ - "System Requirements": prerequisites.md

+ - "Installation": installation.md

+ - "Usage": usage.md

+ - "Configuration": configuration.md

+ - Features:

+ - "Overview": overview.md

+ - "LLM Integrations": llm_integrations.md

- Examples: examples.md

- - Concepts:

- - "Core Concepts": concepts.md

- - "How It Works": how_it_works.md

- - "Pydantic Models": pydantic_models.md

- Contributing: contributing.md

markdown_extensions:

diff --git a/pyproject.toml b/pyproject.toml

index 1179211a..e32300a0 100644

--- a/pyproject.toml

+++ b/pyproject.toml

@@ -1,6 +1,6 @@

[tool.poetry]

name = "readmeai"

-version = "0.5.082"

+version = "0.5.083"

description = "README file generator, powered by large language model APIs 👾"

authors = ["Eli "]

license = "MIT"

diff --git a/readmeai/templates/header.py b/readmeai/templates/header.py

index 8bcf36e6..70d8fa02 100644

--- a/readmeai/templates/header.py

+++ b/readmeai/templates/header.py

@@ -53,8 +53,8 @@ class HeaderTemplate(BaseTemplate):

#### {slogan}

-\n\t{shields_icons}

-\n\t{badge_icons}

+\n\t{shields_icons}

+\n\t{badge_icons}

""",

+

+

->

->  ->

->  ->

->  ->

->

->

->  ->

->  ->

->  ->

->  ->

->  ->

->  ->

->  ->

->  ->

->  ->

->  ->

->  ->

->

->

->

-

-  -

-

-

-

-

-

-

-