diff --git a/.dockerignore b/.dockerignore

index 22ec965249..b9f228c009 100644

--- a/.dockerignore

+++ b/.dockerignore

@@ -11,3 +11,11 @@ python/flexflow/core/legion_cffi_header.py

*.pb.h

*.o

*.a

+

+# Ignore inference assets

+/inference/weights/*

+/inference/tokenizer/*

+/inference/prompt/*

+/inference/output/*

+

+/tests/inference/python_test_configs/*.json

diff --git a/.github/PULL_REQUEST_TEMPLATE.md b/.github/PULL_REQUEST_TEMPLATE.md

index 183028b022..e8177cd9b7 100644

--- a/.github/PULL_REQUEST_TEMPLATE.md

+++ b/.github/PULL_REQUEST_TEMPLATE.md

@@ -10,6 +10,3 @@ Linked Issues:

Issues closed by this PR:

- Closes #

-**Before merging:**

-

-- [ ] Did you update the [flexflow-third-party](https://github.com/flexflow/flexflow-third-party) repo, if modifying any of the Cmake files, the build configs, or the submodules?

diff --git a/.github/README.md b/.github/README.md

new file mode 100644

index 0000000000..5aba2295d5

--- /dev/null

+++ b/.github/README.md

@@ -0,0 +1,255 @@

+# FlexFlow Serve: Low-Latency, High-Performance LLM Serving

+       [](https://flexflow.readthedocs.io/en/latest/?badge=latest)

+

+

+---

+

+## What is FlexFlow Serve

+

+The high computational and memory requirements of generative large language

+models (LLMs) make it challenging to serve them quickly and cheaply.

+FlexFlow Serve is an open-source compiler and distributed system for

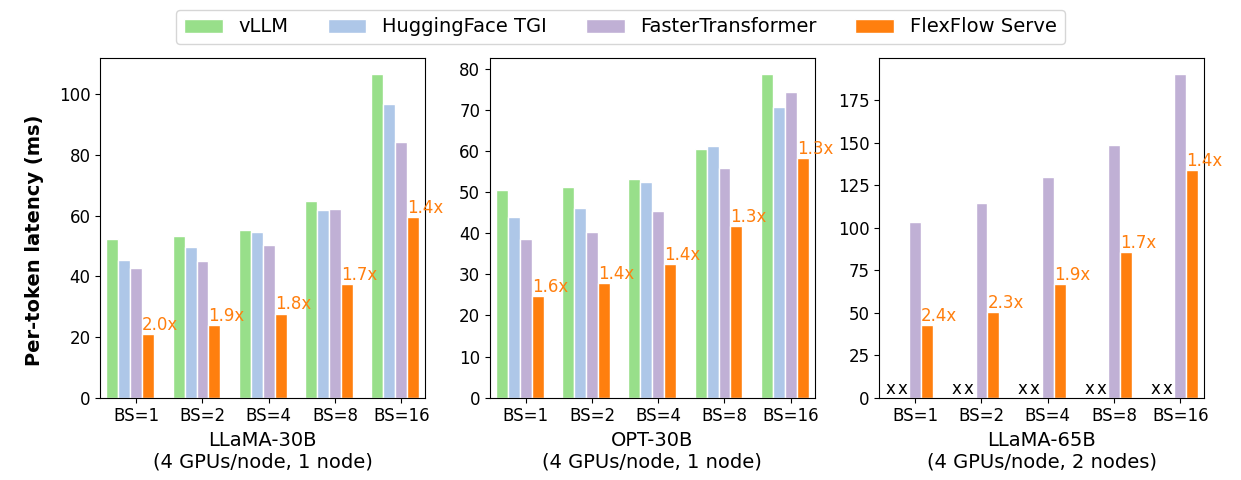

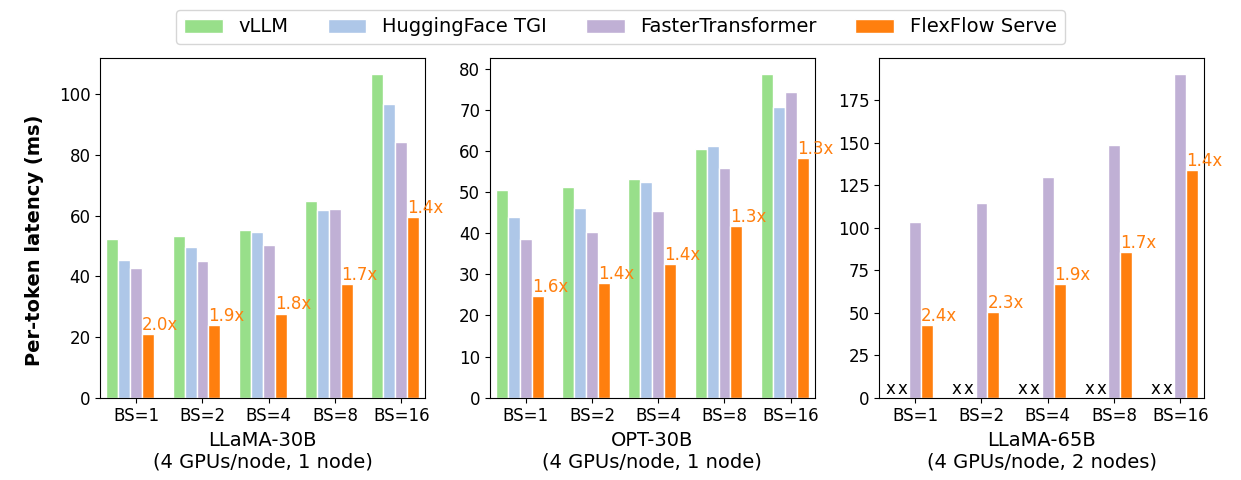

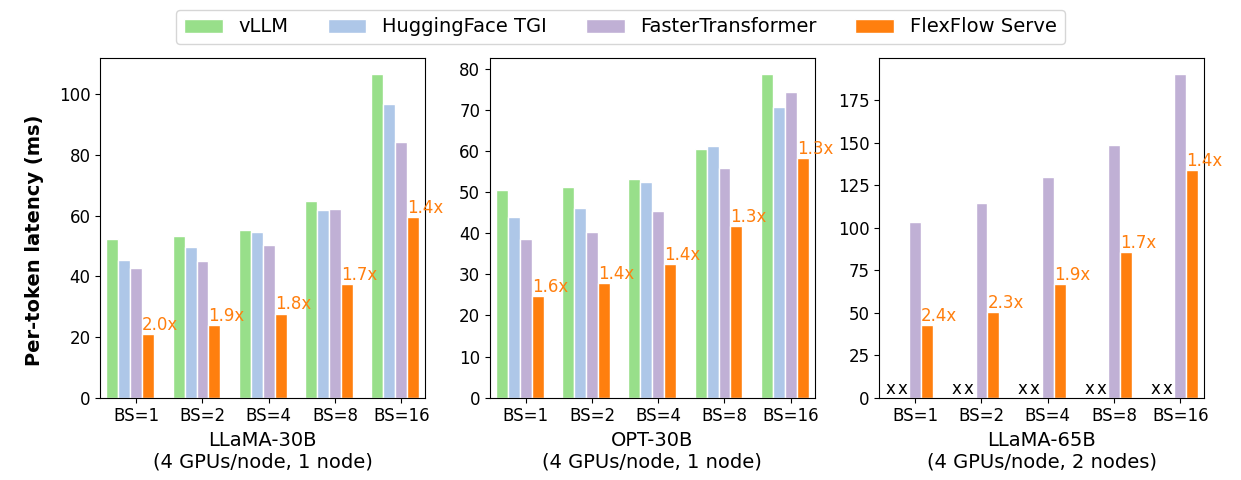

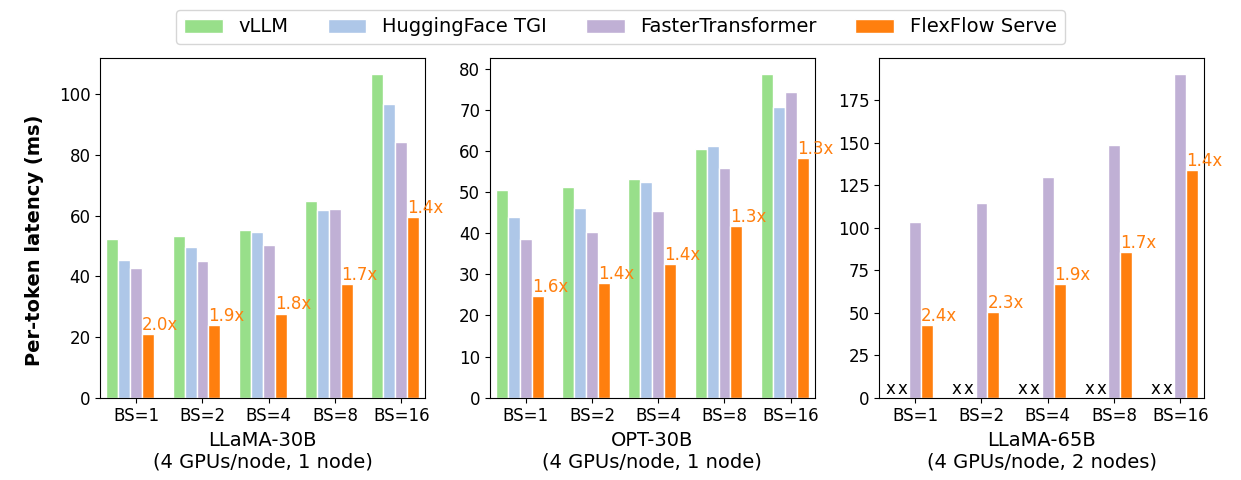

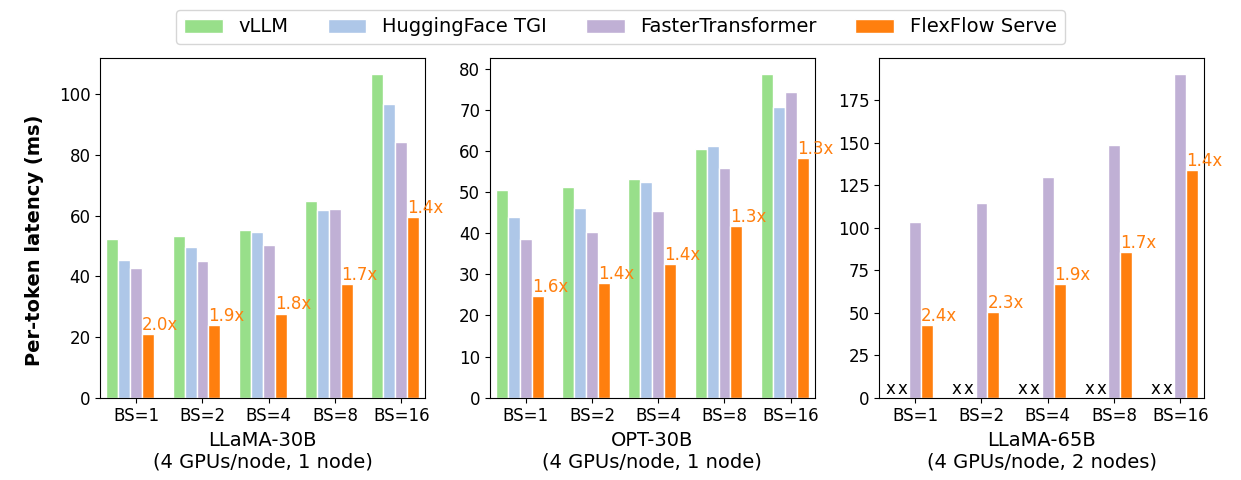

+__low latency__, __high performance__ LLM serving. FlexFlow Serve outperforms

+existing systems by 1.3-2.0x for single-node, multi-GPU inference and by

+1.4-2.4x for multi-node, multi-GPU inference.

+

+

+ +

+

+

+

+## Install FlexFlow Serve

+

+

+### Requirements

+* OS: Linux

+* GPU backend: Hip-ROCm or CUDA

+ * CUDA version: 10.2 – 12.0

+ * NVIDIA compute capability: 6.0 or higher

+* Python: 3.6 or higher

+* Package dependencies: [see here](https://github.com/flexflow/FlexFlow/blob/inference/requirements.txt)

+

+### Install with pip

+You can install FlexFlow Serve using pip:

+

+```bash

+pip install flexflow

+```

+

+### Try it in Docker

+If you run into any issue during the install, or if you would like to use the C++ API without needing to install from source, you can also use our pre-built Docker package for different CUDA versions (NVIDIA backend) and multiple ROCM versions (AMD backend). To download and run our pre-built Docker container:

+

+```bash

+docker run --gpus all -it --rm --shm-size=8g ghcr.io/flexflow/flexflow-cuda-12.0:latest

+```

+

+To download a Docker container for a backend other than CUDA v12.0, you can replace the `cuda-12.0` suffix with any of the following backends: `cuda-11.1`, `cuda-11.2`, `cuda-11.3`, `cuda-11.4`, `cuda-11.5`, `cuda-11.6`, `cuda-11.7`, `cuda-11.8`, and `hip_rocm-5.3`, `hip_rocm-5.4`, `hip_rocm-5.5`, `hip_rocm-5.6`). More info on the Docker images, with instructions to build a new image from source, or run with additional configurations, can be found [here](../docker/README.md).

+

+### Build from source

+

+You can install FlexFlow Serve from source code by building the inference branch of FlexFlow. Please follow these [instructions](https://flexflow.readthedocs.io/en/latest/installation.html).

+

+## Quickstart

+The following example shows how to deploy an LLM using FlexFlow Serve and accelerate its serving using [speculative inference](#speculative-inference). First, we import `flexflow.serve` and initialize the FlexFlow Serve runtime. Note that `memory_per_gpu` and `zero_copy_memory_per_node` specify the size of device memory on each GPU (in MB) and zero-copy memory on each node (in MB), respectively.

+We need to make sure the aggregated GPU memory and zero-copy memory are **both** sufficient to store LLM parameters in non-offloading serving. FlexFlow Serve combines tensor and pipeline model parallelism for LLM serving.

+```python

+import flexflow.serve as ff

+

+ff.init(

+ num_gpus=4,

+ memory_per_gpu=14000,

+ zero_copy_memory_per_node=30000,

+ tensor_parallelism_degree=4,

+ pipeline_parallelism_degree=1

+ )

+```

+Second, we specify the LLM to serve and the SSM(s) used to accelerate LLM serving. The list of supported LLMs and SSMs is available at [supported models](#supported-llms-and-ssms).

+```python

+# Specify the LLM

+llm = ff.LLM("meta-llama/Llama-2-7b-hf")

+

+# Specify a list of SSMs (just one in this case)

+ssms=[]

+ssm = ff.SSM("JackFram/llama-68m")

+ssms.append(ssm)

+```

+Next, we declare the generation configuration and compile both the LLM and SSMs. Note that all SSMs should run in the **beam search** mode, and the LLM should run in the **tree verification** mode to verify the speculated tokens from SSMs. You can also use the following arguments to specify serving configuration when compiling LLMs and SSMs:

+

+* max\_requests\_per\_batch: the maximum number of requests to serve in a batch (default: 16)

+* max\_seq\_length: the maximum number of tokens in a request (default: 256)

+* max\_tokens\_per\_batch: the maximum number of tokens to process in a batch (default: 128)

+

+```python

+# Create the sampling configs

+generation_config = ff.GenerationConfig(

+ do_sample=False, temperature=0.9, topp=0.8, topk=1

+)

+

+# Compile the SSMs for inference and load the weights into memory

+for ssm in ssms:

+ ssm.compile(generation_config)

+

+# Compile the LLM for inference and load the weights into memory

+llm.compile(generation_config,

+ max_requests_per_batch = 16,

+ max_seq_length = 256,

+ max_tokens_per_batch = 128,

+ ssms=ssms)

+```

+Next, we call `llm.start_server()` to start an LLM server running on a seperate background thread, which allows users to perform computations in parallel with LLM serving. Finally, we call `llm.generate` to generate the output, which is organized as a list of `GenerationResult`, which include the output tokens and text. After all serving requests are processed, you can either call `llm.stop_server()` to terminate the background thread or directly exit the python program, which will automatically terminate the background server thread.

+```python

+llm.start_server()

+result = llm.generate("Here are some travel tips for Tokyo:\n")

+llm.stop_server() # This invocation is optional

+```

+

+### Incremental decoding

+

+Expand here

+

+

+```python

+import flexflow.serve as ff

+

+# Initialize the FlexFlow runtime. ff.init() takes a dictionary or the path to a JSON file with the configs

+ff.init(

+ num_gpus=4,

+ memory_per_gpu=14000,

+ zero_copy_memory_per_node=30000,

+ tensor_parallelism_degree=4,

+ pipeline_parallelism_degree=1

+ )

+

+# Create the FlexFlow LLM

+llm = ff.LLM("meta-llama/Llama-2-7b-hf")

+

+# Create the sampling configs

+generation_config = ff.GenerationConfig(

+ do_sample=True, temperature=0.9, topp=0.8, topk=1

+)

+

+# Compile the LLM for inference and load the weights into memory

+llm.compile(generation_config,

+ max_requests_per_batch = 16,

+ max_seq_length = 256,

+ max_tokens_per_batch = 128)

+

+# Generation begins!

+llm.start_server()

+result = llm.generate("Here are some travel tips for Tokyo:\n")

+llm.stop_server() # This invocation is optional

+```

+

+

+

+### C++ interface

+If you'd like to use the C++ interface (mostly used for development and benchmarking purposes), you should install from source, and follow the instructions below.

+

+

+Expand here

+

+

+#### Downloading models

+Before running FlexFlow Serve, you should manually download the LLM and SSM(s) model of interest using the [inference/utils/download_hf_model.py](https://github.com/flexflow/FlexFlow/blob/inference/inference/utils/download_hf_model.py) script (see example below). By default, the script will download all of a model's assets (weights, configs, tokenizer files, etc...) into the cache folder `~/.cache/flexflow`. If you would like to use a different folder, you can request that via the parameter `--cache-folder`.

+

+```bash

+python3 ./inference/utils/download_hf_model.py ...

+```

+

+#### Running the C++ examples

+A C++ example is available at [this folder](../inference/spec_infer/). After building FlexFlow Serve, the executable will be available at `/build_dir/inference/spec_infer/spec_infer`. You can use the following command-line arguments to run FlexFlow Serve:

+

+* `-ll:gpu`: number of GPU processors to use on each node for serving an LLM (default: 0)

+* `-ll:fsize`: size of device memory on each GPU in MB

+* `-ll:zsize`: size of zero-copy memory (pinned DRAM with direct GPU access) in MB. FlexFlow Serve keeps a replica of the LLM parameters on zero-copy memory, and therefore requires that the zero-copy memory is sufficient for storing the LLM parameters.

+* `-llm-model`: the LLM model ID from HuggingFace (e.g. "meta-llama/Llama-2-7b-hf")

+* `-ssm-model`: the SSM model ID from HuggingFace (e.g. "JackFram/llama-160m"). You can use multiple `-ssm-model`s in the command line to launch multiple SSMs.

+* `-cache-folder`: the folder

+* `-data-parallelism-degree`, `-tensor-parallelism-degree` and `-pipeline-parallelism-degree`: parallelization degrees in the data, tensor, and pipeline dimensions. Their product must equal the number of GPUs available on the machine. When any of the three parallelism degree arguments is omitted, a default value of 1 will be used.

+* `-prompt`: (optional) path to the prompt file. FlexFlow Serve expects a json format file for prompts. In addition, users can also use the following API for registering requests:

+* `-output-file`: (optional) filepath to use to save the output of the model, together with the generation latency

+

+For example, you can use the following command line to serve a LLaMA-7B or LLaMA-13B model on 4 GPUs and use two collectively boost-tuned LLaMA-68M models for speculative inference.

+

+```bash

+./inference/spec_infer/spec_infer -ll:gpu 4 -ll:cpu 4 -ll:fsize 14000 -ll:zsize 30000 -llm-model meta-llama/Llama-2-7b-hf -ssm-model JackFram/llama-68m -prompt /path/to/prompt.json -tensor-parallelism-degree 4 --fusion

+```

+

+

+## Speculative Inference

+A key technique that enables FlexFlow Serve to accelerate LLM serving is speculative

+inference, which combines various collectively boost-tuned small speculative

+models (SSMs) to jointly predict the LLM’s outputs; the predictions are organized as a

+token tree, whose nodes each represent a candidate token sequence. The correctness

+of all candidate token sequences represented by a token tree is verified against the

+LLM’s output in parallel using a novel tree-based parallel decoding mechanism.

+FlexFlow Serve uses an LLM as a token tree verifier instead of an incremental decoder,

+which largely reduces the end-to-end inference latency and computational requirement

+for serving generative LLMs while provably preserving model quality.

+

+

+ +

+

+

+### Supported LLMs and SSMs

+

+FlexFlow Serve currently supports all HuggingFace models with the following architectures:

+* `LlamaForCausalLM` / `LLaMAForCausalLM` (e.g. LLaMA/LLaMA-2, Guanaco, Vicuna, Alpaca, ...)

+* `OPTForCausalLM` (models from the OPT family)

+* `RWForCausalLM` (models from the Falcon family)

+* `GPTBigCodeForCausalLM` (models from the Starcoder family)

+

+Below is a list of models that we have explicitly tested and for which a SSM may be available:

+

+| Model | Model id on HuggingFace | Boost-tuned SSMs |

+| :---- | :---- | :---- |

+| LLaMA-7B | meta-llama/Llama-2-7b-hf | [LLaMA-68M](https://huggingface.co/JackFram/llama-68m) , [LLaMA-160M](https://huggingface.co/JackFram/llama-160m) |

+| LLaMA-13B | decapoda-research/llama-13b-hf | [LLaMA-68M](https://huggingface.co/JackFram/llama-68m) , [LLaMA-160M](https://huggingface.co/JackFram/llama-160m) |

+| LLaMA-30B | decapoda-research/llama-30b-hf | [LLaMA-68M](https://huggingface.co/JackFram/llama-68m) , [LLaMA-160M](https://huggingface.co/JackFram/llama-160m) |

+| LLaMA-65B | decapoda-research/llama-65b-hf | [LLaMA-68M](https://huggingface.co/JackFram/llama-68m) , [LLaMA-160M](https://huggingface.co/JackFram/llama-160m) |

+| LLaMA-2-7B | meta-llama/Llama-2-7b-hf | [LLaMA-68M](https://huggingface.co/JackFram/llama-68m) , [LLaMA-160M](https://huggingface.co/JackFram/llama-160m) |

+| LLaMA-2-13B | meta-llama/Llama-2-13b-hf | [LLaMA-68M](https://huggingface.co/JackFram/llama-68m) , [LLaMA-160M](https://huggingface.co/JackFram/llama-160m) |

+| LLaMA-2-70B | meta-llama/Llama-2-70b-hf | [LLaMA-68M](https://huggingface.co/JackFram/llama-68m) , [LLaMA-160M](https://huggingface.co/JackFram/llama-160m) |

+| OPT-6.7B | facebook/opt-6.7b | [OPT-125M](https://huggingface.co/facebook/opt-125m) |

+| OPT-13B | facebook/opt-13b | [OPT-125M](https://huggingface.co/facebook/opt-125m) |

+| OPT-30B | facebook/opt-30b | [OPT-125M](https://huggingface.co/facebook/opt-125m) |

+| OPT-66B | facebook/opt-66b | [OPT-125M](https://huggingface.co/facebook/opt-125m) |

+| Falcon-7B | tiiuae/falcon-7b | |

+| Falcon-40B | tiiuae/falcon-40b | |

+| StarCoder-7B | bigcode/starcoderbase-7b | |

+| StarCoder-15.5B | bigcode/starcoder | |

+

+### CPU Offloading

+FlexFlow Serve also offers offloading-based inference for running large models (e.g., llama-7B) on a single GPU. CPU offloading is a choice to save tensors in CPU memory, and only copy the tensor to GPU when doing calculation. Notice that now we selectively offload the largest weight tensors (weights tensor in Linear, Attention). Besides, since the small model occupies considerably less space, it it does not pose a bottleneck for GPU memory, the offloading will bring more runtime space and computational cost, so we only do the offloading for the large model. [TODO: update instructions] You can run the offloading example by enabling the `-offload` and `-offload-reserve-space-size` flags.

+

+### Quantization

+FlexFlow Serve supports int4 and int8 quantization. The compressed tensors are stored on the CPU side. Once copied to the GPU, these tensors undergo decompression and conversion back to their original precision. Please find the compressed weight files in our s3 bucket, or use [this script](../inference/utils/compress_llama_weights.py) from [FlexGen](https://github.com/FMInference/FlexGen) project to do the compression manually.

+

+### Prompt Datasets

+We provide five prompt datasets for evaluating FlexFlow Serve: [Chatbot instruction prompts](https://specinfer.s3.us-east-2.amazonaws.com/prompts/chatbot.json), [ChatGPT Prompts](https://specinfer.s3.us-east-2.amazonaws.com/prompts/chatgpt.json), [WebQA](https://specinfer.s3.us-east-2.amazonaws.com/prompts/webqa.json), [Alpaca](https://specinfer.s3.us-east-2.amazonaws.com/prompts/alpaca.json), and [PIQA](https://specinfer.s3.us-east-2.amazonaws.com/prompts/piqa.json).

+

+## TODOs

+

+FlexFlow Serve is under active development. We currently focus on the following tasks and strongly welcome all contributions from bug fixes to new features and extensions.

+

+* AMD benchmarking. We are actively working on benchmarking FlexFlow Serve on AMD GPUs and comparing it with the performance on NVIDIA GPUs.

+* Chatbot prompt templates and Multi-round conversations

+* Support for FastAPI server

+* Integration with LangChain for document question answering

+

+## Acknowledgements

+This project is initiated by members from CMU, Stanford, and UCSD. We will be continuing developing and supporting FlexFlow Serve. Please cite FlexFlow Serve as:

+

+``` bibtex

+@misc{miao2023specinfer,

+ title={SpecInfer: Accelerating Generative Large Language Model Serving with Speculative Inference and Token Tree Verification},

+ author={Xupeng Miao and Gabriele Oliaro and Zhihao Zhang and Xinhao Cheng and Zeyu Wang and Rae Ying Yee Wong and Alan Zhu and Lijie Yang and Xiaoxiang Shi and Chunan Shi and Zhuoming Chen and Daiyaan Arfeen and Reyna Abhyankar and Zhihao Jia},

+ year={2023},

+ eprint={2305.09781},

+ archivePrefix={arXiv},

+ primaryClass={cs.CL}

+}

+```

+

+## License

+FlexFlow uses Apache License 2.0.

diff --git a/.github/workflows/build-skip.yml b/.github/workflows/build-skip.yml

index b3ab69e9c1..8635c0d137 100644

--- a/.github/workflows/build-skip.yml

+++ b/.github/workflows/build-skip.yml

@@ -3,6 +3,7 @@ on:

pull_request:

paths-ignore:

- "include/**"

+ - "inference/**"

- "cmake/**"

- "config/**"

- "deps/**"

diff --git a/.github/workflows/build.yml b/.github/workflows/build.yml

index ada29c5798..ef5961bc87 100644

--- a/.github/workflows/build.yml

+++ b/.github/workflows/build.yml

@@ -3,6 +3,7 @@ on:

pull_request:

paths:

- "include/**"

+ - "inference/**"

- "cmake/**"

- "config/**"

- "deps/**"

@@ -15,6 +16,7 @@ on:

- "master"

paths:

- "include/**"

+ - "inference/**"

- "cmake/**"

- "config/**"

- "deps/**"

@@ -38,6 +40,8 @@ jobs:

matrix:

gpu_backend: ["cuda", "hip_rocm"]

fail-fast: false

+ env:

+ FF_GPU_BACKEND: ${{ matrix.gpu_backend }}

steps:

- name: Checkout Git Repository

uses: actions/checkout@v3

@@ -48,21 +52,23 @@ jobs:

run: .github/workflows/helpers/free_space_on_runner.sh

- name: Install CUDA

- uses: Jimver/cuda-toolkit@v0.2.11

+ uses: Jimver/cuda-toolkit@v0.2.16

+ if: ${{ matrix.gpu_backend == 'cuda' }}

id: cuda-toolkit

with:

- cuda: "11.8.0"

+ cuda: "12.1.1"

# Disable caching of the CUDA binaries, since it does not give us any significant performance improvement

use-github-cache: "false"

+ log-file-suffix: 'cmake_${{matrix.gpu_backend}}.txt'

- name: Install system dependencies

- run: FF_GPU_BACKEND=${{ matrix.gpu_backend }} .github/workflows/helpers/install_dependencies.sh

+ run: .github/workflows/helpers/install_dependencies.sh

- name: Install conda and FlexFlow dependencies

uses: conda-incubator/setup-miniconda@v2

with:

activate-environment: flexflow

- environment-file: conda/environment.yml

+ environment-file: conda/flexflow.yml

auto-activate-base: false

- name: Build FlexFlow

@@ -70,17 +76,25 @@ jobs:

export CUDNN_DIR="$CUDA_PATH"

export CUDA_DIR="$CUDA_PATH"

export FF_HOME=$(pwd)

- export FF_GPU_BACKEND=${{ matrix.gpu_backend }}

export FF_CUDA_ARCH=70

+ export FF_HIP_ARCH=gfx1100,gfx1036

+ export hip_version=5.6

+ export FF_BUILD_ALL_INFERENCE_EXAMPLES=ON

+

+ if [[ "${FF_GPU_BACKEND}" == "cuda" ]]; then

+ export FF_BUILD_ALL_EXAMPLES=ON

+ export FF_BUILD_UNIT_TESTS=ON

+ else

+ export FF_BUILD_ALL_EXAMPLES=OFF

+ export FF_BUILD_UNIT_TESTS=OFF

+ fi

+

cores_available=$(nproc --all)

n_build_cores=$(( cores_available -1 ))

if (( $n_build_cores < 1 )) ; then n_build_cores=1 ; fi

mkdir build

cd build

- if [[ "${FF_GPU_BACKEND}" == "cuda" ]]; then

- export FF_BUILD_ALL_EXAMPLES=ON

- export FF_BUILD_UNIT_TESTS=ON

- fi

+

../config/config.linux

make -j $n_build_cores

@@ -89,25 +103,24 @@ jobs:

export CUDNN_DIR="$CUDA_PATH"

export CUDA_DIR="$CUDA_PATH"

export FF_HOME=$(pwd)

- export FF_GPU_BACKEND=${{ matrix.gpu_backend }}

export FF_CUDA_ARCH=70

- cd build

+ export FF_HIP_ARCH=gfx1100,gfx1036

+ export hip_version=5.6

+ export FF_BUILD_ALL_INFERENCE_EXAMPLES=ON

+

if [[ "${FF_GPU_BACKEND}" == "cuda" ]]; then

- export FF_BUILD_ALL_EXAMPLES=ON

+ export FF_BUILD_ALL_EXAMPLES=ON

export FF_BUILD_UNIT_TESTS=ON

+ else

+ export FF_BUILD_ALL_EXAMPLES=OFF

+ export FF_BUILD_UNIT_TESTS=OFF

fi

+

+ cd build

../config/config.linux

sudo make install

sudo ldconfig

- - name: Check availability of Python flexflow.core module

- if: ${{ matrix.gpu_backend == 'cuda' }}

- run: |

- export LD_LIBRARY_PATH="$CUDA_PATH/lib64/stubs:$LD_LIBRARY_PATH"

- sudo ln -s "$CUDA_PATH/lib64/stubs/libcuda.so" "$CUDA_PATH/lib64/stubs/libcuda.so.1"

- export CPU_ONLY_TEST=1

- python -c "import flexflow.core; exit()"

-

- name: Run C++ unit tests

if: ${{ matrix.gpu_backend == 'cuda' }}

run: |

@@ -115,9 +128,19 @@ jobs:

export CUDA_DIR="$CUDA_PATH"

export LD_LIBRARY_PATH="$CUDA_PATH/lib64/stubs:$LD_LIBRARY_PATH"

export FF_HOME=$(pwd)

+ sudo ln -s "$CUDA_PATH/lib64/stubs/libcuda.so" "$CUDA_PATH/lib64/stubs/libcuda.so.1"

cd build

./tests/unit/unit-test

+ - name: Check availability of flexflow modules in Python

+ run: |

+ if [[ "${FF_GPU_BACKEND}" == "cuda" ]]; then

+ export LD_LIBRARY_PATH="$CUDA_PATH/lib64/stubs:$LD_LIBRARY_PATH"

+ fi

+ # Remove build folder to check that the installed version can run independently of the build files

+ rm -rf build

+ python -c "import flexflow.core; import flexflow.serve as ff; exit()"

+

makefile-build:

name: Build FlexFlow with the Makefile

runs-on: ubuntu-20.04

@@ -134,11 +157,12 @@ jobs:

run: .github/workflows/helpers/free_space_on_runner.sh

- name: Install CUDA

- uses: Jimver/cuda-toolkit@v0.2.11

+ uses: Jimver/cuda-toolkit@v0.2.16

id: cuda-toolkit

with:

- cuda: "11.8.0"

+ cuda: "12.1.1"

use-github-cache: "false"

+ log-file-suffix: 'makefile_${{matrix.gpu_backend}}.txt'

- name: Install system dependencies

run: .github/workflows/helpers/install_dependencies.sh

@@ -147,7 +171,7 @@ jobs:

uses: conda-incubator/setup-miniconda@v2

with:

activate-environment: flexflow

- environment-file: conda/environment.yml

+ environment-file: conda/flexflow.yml

auto-activate-base: false

- name: Build FlexFlow

@@ -163,5 +187,4 @@ jobs:

cd python

make -j $n_build_cores

- export CPU_ONLY_TEST=1

python -c 'import flexflow.core'

diff --git a/.github/workflows/clang-format-check.yml b/.github/workflows/clang-format-check.yml

index 46c9bf3be2..fdf53e8254 100644

--- a/.github/workflows/clang-format-check.yml

+++ b/.github/workflows/clang-format-check.yml

@@ -10,7 +10,7 @@ jobs:

- check: "src"

exclude: '\.proto$'

- check: "include"

- - check: "nmt"

+ - check: "inference"

- check: "python"

- check: "scripts"

- check: "tests"

diff --git a/.github/workflows/docker-build-skip.yml b/.github/workflows/docker-build-skip.yml

index 59b584c6c4..e5d7de858f 100644

--- a/.github/workflows/docker-build-skip.yml

+++ b/.github/workflows/docker-build-skip.yml

@@ -13,27 +13,22 @@ concurrency:

cancel-in-progress: true

jobs:

- docker-build:

- name: Build and Install FlexFlow in a Docker Container

- runs-on: ubuntu-20.04

+ docker-build-rocm:

+ name: Build and Install FlexFlow in a Docker Container (ROCm backend)

+ runs-on: ubuntu-latest

strategy:

matrix:

- gpu_backend: ["cuda", "hip_rocm"]

- cuda_version: ["11.1", "11.2", "11.3", "11.5", "11.6", "11.7", "11.8"]

- # The CUDA version doesn't matter when building for hip_rocm, so we just pick one arbitrarily (11.8) to avoid building for hip_rocm once per number of CUDA version supported

- exclude:

- - gpu_backend: "hip_rocm"

- cuda_version: "11.1"

- - gpu_backend: "hip_rocm"

- cuda_version: "11.2"

- - gpu_backend: "hip_rocm"

- cuda_version: "11.3"

- - gpu_backend: "hip_rocm"

- cuda_version: "11.5"

- - gpu_backend: "hip_rocm"

- cuda_version: "11.6"

- - gpu_backend: "hip_rocm"

- cuda_version: "11.7"

+ hip_version: ["5.3", "5.4", "5.5", "5.6"]

+ fail-fast: false

+ steps:

+ - run: 'echo "No docker-build required"'

+

+ docker-build-cuda:

+ name: Build and Install FlexFlow in a Docker Container (CUDA backend)

+ runs-on: ubuntu-latest

+ strategy:

+ matrix:

+ cuda_version: ["11.1", "11.6", "11.7", "11.8", "12.0", "12.1", "12.2"]

fail-fast: false

steps:

- run: 'echo "No docker-build required"'

diff --git a/.github/workflows/docker-build.yml b/.github/workflows/docker-build.yml

index d059a0605f..eeaab0e0af 100644

--- a/.github/workflows/docker-build.yml

+++ b/.github/workflows/docker-build.yml

@@ -7,10 +7,11 @@ on:

- ".github/workflows/docker-build.yml"

push:

branches:

+ - "inference"

- "master"

schedule:

- # Run every week on Sunday at midnight PT (3am ET / 8am UTC) to keep the docker images updated

- - cron: "0 8 * * 0"

+ # At 00:00 on day-of-month 1, 14, and 28.

+ - cron: "0 0 1,14,28 * *"

workflow_dispatch:

# Cancel outdated workflows if they are still running

@@ -19,53 +20,121 @@ concurrency:

cancel-in-progress: true

jobs:

- docker-build:

- name: Build and Install FlexFlow in a Docker Container

+ rocm-builder-start:

+ name: Start an AWS instance to build the ROCM Docker images

+ runs-on: ubuntu-latest

+ if: ${{ ( github.event_name == 'push' || github.event_name == 'schedule' || github.event_name == 'workflow_dispatch' ) && github.ref_name == 'inference' }}

+ env:

+ ROCM_BUILDER_INSTANCE_ID: ${{ secrets.ROCM_BUILDER_INSTANCE_ID }}

+ steps:

+ - name: Configure AWS credentials

+ uses: aws-actions/configure-aws-credentials@v1

+ with:

+ aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

+ aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

+ aws-region: us-east-2

+

+ - name: Start EC2 instance

+ run: aws ec2 start-instances --instance-ids $ROCM_BUILDER_INSTANCE_ID

+

+ docker-build-rocm:

+ name: Build and Install FlexFlow in a Docker Container (ROCm backend)

runs-on: ubuntu-20.04

+ if: ${{ ( github.event_name != 'push' && github.event_name != 'schedule' && github.event_name != 'workflow_dispatch' ) || github.ref_name != 'inference' }}

+ env:

+ FF_GPU_BACKEND: "hip_rocm"

+ hip_version: 5.6

+ steps:

+ - name: Checkout Git Repository

+ uses: actions/checkout@v3

+ with:

+ submodules: recursive

+

+ - name: Free additional space on runner

+ run: .github/workflows/helpers/free_space_on_runner.sh

+

+ - name: Build Docker container

+ run: FF_HIP_ARCH="gfx1100,gfx1036" ./docker/build.sh flexflow

+

+ - name: Check availability of flexflow modules in Python

+ run: docker run --entrypoint /bin/bash flexflow-${FF_GPU_BACKEND}-${hip_version}:latest -c "python -c 'import flexflow.core; import flexflow.serve as ff; exit()'"

+

+ keep-runner-registered:

+ name: Keep runner alive

+ if: ${{ github.event_name == 'schedule' }}

+ runs-on: [self-hosted, rocm_builder]

+ defaults:

+ run:

+ shell: bash -l {0} # required to use an activated conda environment

+ env:

+ CONDA: "3"

+ needs: rocm-builder-start

+ steps:

+ - name: Keep alive

+ run: |

+ echo "Keep self-hosted runner registered with Github"

+ sleep 10m

+

+ docker-build-and-publish-rocm:

+ name: Build and Deploy FlexFlow Docker Containers (ROCm backend)

+ needs: rocm-builder-start

+ runs-on: [self-hosted, rocm_builder]

+ if: ${{ ( github.event_name == 'push' || github.event_name == 'workflow_dispatch' ) && github.ref_name == 'inference' }}

strategy:

matrix:

- gpu_backend: ["cuda", "hip_rocm"]

- cuda_version: ["11.1", "11.2", "11.3", "11.5", "11.6", "11.7", "11.8"]

- # The CUDA version doesn't matter when building for hip_rocm, so we just pick one arbitrarily (11.8) to avoid building for hip_rocm once per number of CUDA version supported

- exclude:

- - gpu_backend: "hip_rocm"

- cuda_version: "11.1"

- - gpu_backend: "hip_rocm"

- cuda_version: "11.2"

- - gpu_backend: "hip_rocm"

- cuda_version: "11.3"

- - gpu_backend: "hip_rocm"

- cuda_version: "11.5"

- - gpu_backend: "hip_rocm"

- cuda_version: "11.6"

- - gpu_backend: "hip_rocm"

- cuda_version: "11.7"

+ hip_version: ["5.3", "5.4", "5.5", "5.6"]

fail-fast: false

env:

- FF_GPU_BACKEND: ${{ matrix.gpu_backend }}

- cuda_version: ${{ matrix.cuda_version }}

- branch_name: ${{ github.head_ref || github.ref_name }}

+ FF_GPU_BACKEND: "hip_rocm"

+ hip_version: ${{ matrix.hip_version }}

steps:

- name: Checkout Git Repository

uses: actions/checkout@v3

with:

submodules: recursive

- - name: Free additional space on runner

+ - name: Build Docker container

+ # On push to inference, build for all compatible architectures, so that we can publish

+ # a pre-built general-purpose image. On all other cases, only build for one architecture

+ # to save time.

+ run: FF_HIP_ARCH=all ./docker/build.sh flexflow

+

+ - name: Check availability of flexflow modules in Python

+ run: docker run --entrypoint /bin/bash flexflow-${FF_GPU_BACKEND}-${hip_version}:latest -c "python -c 'import flexflow.core; import flexflow.serve as ff; exit()'"

+

+ - name: Publish Docker environment image (on push to inference)

env:

- deploy_needed: ${{ ( github.event_name == 'push' || github.event_name == 'schedule' ) && env.branch_name == 'inference' }}

- build_needed: ${{ matrix.gpu_backend == 'hip_rocm' || ( matrix.gpu_backend == 'cuda' && matrix.cuda_version == '11.8' ) }}

+ FLEXFLOW_CONTAINER_TOKEN: ${{ secrets.FLEXFLOW_CONTAINER_TOKEN }}

run: |

- if [[ $deploy_needed == "true" || $build_needed == "true" ]]; then

- .github/workflows/helpers/free_space_on_runner.sh

- else

- echo "Skipping this step to save time"

- fi

+ ./docker/publish.sh flexflow-environment

+ ./docker/publish.sh flexflow

+

+ docker-build-cuda:

+ name: Build and Install FlexFlow in a Docker Container (CUDA backend)

+ runs-on: ubuntu-20.04

+ strategy:

+ matrix:

+ cuda_version: ["11.1", "11.6", "11.7", "11.8", "12.0", "12.1", "12.2"]

+ fail-fast: false

+ env:

+ FF_GPU_BACKEND: "cuda"

+ cuda_version: ${{ matrix.cuda_version }}

+ steps:

+ - name: Checkout Git Repository

+ if: ${{ ( ( github.event_name == 'push' || github.event_name == 'workflow_dispatch' ) && github.ref_name == 'inference' ) || matrix.cuda_version == '12.0' }}

+ uses: actions/checkout@v3

+ with:

+ submodules: recursive

+

+ - name: Free additional space on runner

+ if: ${{ ( ( github.event_name == 'push' || github.event_name == 'workflow_dispatch' ) && github.ref_name == 'inference' ) || matrix.cuda_version == '12.0' }}

+ run: .github/workflows/helpers/free_space_on_runner.sh

- name: Build Docker container

+ if: ${{ ( ( github.event_name == 'push' || github.event_name == 'workflow_dispatch' ) && github.ref_name == 'inference' ) || matrix.cuda_version == '12.0' }}

env:

- deploy_needed: ${{ ( github.event_name == 'push' || github.event_name == 'schedule' ) && env.branch_name == 'inference' }}

- build_needed: ${{ matrix.gpu_backend == 'hip_rocm' || ( matrix.gpu_backend == 'cuda' && matrix.cuda_version == '11.8' ) }}

+ deploy_needed: ${{ ( github.event_name == 'push' || github.event_name == 'workflow_dispatch' ) && github.ref_name == 'inference' }}

+ build_needed: ${{ matrix.cuda_version == '12.0' }}

run: |

# On push to inference, build for all compatible architectures, so that we can publish

# a pre-built general-purpose image. On all other cases, only build for one architecture

@@ -74,42 +143,45 @@ jobs:

export FF_CUDA_ARCH=all

./docker/build.sh flexflow

elif [[ $build_needed == "true" ]]; then

- export FF_CUDA_ARCH=70

+ export FF_CUDA_ARCH=86

./docker/build.sh flexflow

- else

- echo "Skipping build to save time"

fi

- - name: Check availability of Python flexflow.core module

- if: ${{ matrix.gpu_backend == 'cuda' }}

- env:

- deploy_needed: ${{ ( github.event_name == 'push' || github.event_name == 'schedule' ) && env.branch_name == 'inference' }}

- build_needed: ${{ matrix.gpu_backend == 'hip_rocm' || ( matrix.gpu_backend == 'cuda' && matrix.cuda_version == '11.8' ) }}

- run: |

- if [[ $deploy_needed == "true" || $build_needed == "true" ]]; then

- docker run --env CPU_ONLY_TEST=1 --entrypoint /bin/bash flexflow-cuda-${cuda_version}:latest -c "export LD_LIBRARY_PATH=/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH; sudo ln -s /usr/local/cuda/lib64/stubs/libcuda.so /usr/local/cuda/lib64/stubs/libcuda.so.1; python -c 'import flexflow.core; exit()'"

- else

- echo "Skipping test to save time"

- fi

+ - name: Check availability of flexflow modules in Python

+ if: ${{ ( ( github.event_name == 'push' || github.event_name == 'workflow_dispatch' ) && github.ref_name == 'inference' ) || matrix.cuda_version == '12.0' }}

+ run: docker run --entrypoint /bin/bash flexflow-${FF_GPU_BACKEND}-${cuda_version}:latest -c "export LD_LIBRARY_PATH=/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH; sudo ln -s /usr/local/cuda/lib64/stubs/libcuda.so /usr/local/cuda/lib64/stubs/libcuda.so.1; python -c 'import flexflow.core; import flexflow.serve as ff; exit()'"

- name: Publish Docker environment image (on push to inference)

- if: github.repository_owner == 'flexflow'

+ if: ${{ github.repository_owner == 'flexflow' && ( github.event_name == 'push' || github.event_name == 'workflow_dispatch' ) && github.ref_name == 'inference' }}

env:

FLEXFLOW_CONTAINER_TOKEN: ${{ secrets.FLEXFLOW_CONTAINER_TOKEN }}

- deploy_needed: ${{ ( github.event_name == 'push' || github.event_name == 'schedule' ) && env.branch_name == 'inference' }}

run: |

- if [[ $deploy_needed == "true" ]]; then

- ./docker/publish.sh flexflow-environment

- ./docker/publish.sh flexflow

- else

- echo "No need to update Docker containers in ghrc.io registry at this time."

- fi

+ ./docker/publish.sh flexflow-environment

+ ./docker/publish.sh flexflow

+

+ rocm-builder-stop:

+ needs: [docker-build-and-publish-rocm, keep-runner-registered]

+ if: ${{ always() && ( github.event_name == 'push' || github.event_name == 'schedule' || github.event_name == 'workflow_dispatch' ) && github.ref_name == 'inference' }}

+ runs-on: ubuntu-latest

+ name: Stop the AWS instance we used to build the ROCM Docker images

+ env:

+ ROCM_BUILDER_INSTANCE_ID: ${{ secrets.ROCM_BUILDER_INSTANCE_ID }}

+ steps:

+ - name: Configure AWS credentials

+ uses: aws-actions/configure-aws-credentials@v1

+ with:

+ aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

+ aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

+ aws-region: us-east-2

+

+ - name: Start EC2 instance

+ run: aws ec2 stop-instances --instance-ids $ROCM_BUILDER_INSTANCE_ID

notify-slack:

name: Notify Slack in case of failure

runs-on: ubuntu-20.04

- needs: docker-build

- if: ${{ failure() && github.event_name == 'schedule' && github.repository_owner == 'flexflow' }}

+ needs: [docker-build-cuda, docker-build-and-publish-rocm]

+ if: ${{ failure() && github.event_name == 'workflow_dispatch' && github.repository_owner == 'flexflow' }}

steps:

- name: Send Slack message

env:

diff --git a/.github/workflows/gpu-ci-daemon.yml b/.github/workflows/gpu-ci-daemon.yml

index 603b44c34e..b36e7b49e1 100644

--- a/.github/workflows/gpu-ci-daemon.yml

+++ b/.github/workflows/gpu-ci-daemon.yml

@@ -34,5 +34,6 @@ jobs:

run: |

pip3 install pip --upgrade

pip3 install pyopenssl --upgrade

+ pip3 install urllib3 --upgrade

pip3 install pygithub

python3 .github/workflows/helpers/gpu_ci_helper.py --daemon

diff --git a/.github/workflows/gpu-ci-skip.yml b/.github/workflows/gpu-ci-skip.yml

index 157f3c271a..f4cb950931 100644

--- a/.github/workflows/gpu-ci-skip.yml

+++ b/.github/workflows/gpu-ci-skip.yml

@@ -8,9 +8,15 @@ on:

- "python/**"

- "setup.py"

- "include/**"

+ - "inference/**"

- "src/**"

+ - "tests/inference/**"

+ - "conda/flexflow.yml"

- ".github/workflows/gpu-ci.yml"

- - "tests/multi_gpu_tests.sh"

+ - "tests/cpp_gpu_tests.sh"

+ - "tests/inference_tests.sh"

+ - "tests/training_tests.sh"

+ - "tests/python_interface_test.sh"

workflow_dispatch:

concurrency:

@@ -30,10 +36,18 @@ jobs:

needs: gpu-ci-concierge

steps:

- run: 'echo "No gpu-ci required"'

-

- gpu-ci-flexflow:

- name: Single Machine, Multiple GPUs Tests

+

+ inference-tests:

+ name: Inference Tests

runs-on: ubuntu-20.04

needs: gpu-ci-concierge

steps:

- run: 'echo "No gpu-ci required"'

+

+ training-tests:

+ name: Training Tests

+ runs-on: ubuntu-20.04

+ # if: ${{ github.event_name != 'pull_request' || github.base_ref != 'inference' }}

+ needs: inference-tests

+ steps:

+ - run: 'echo "No gpu-ci required"'

diff --git a/.github/workflows/gpu-ci.yml b/.github/workflows/gpu-ci.yml

index 3b679e9f20..00ca2df603 100644

--- a/.github/workflows/gpu-ci.yml

+++ b/.github/workflows/gpu-ci.yml

@@ -1,21 +1,10 @@

name: "gpu-ci"

on:

- pull_request:

- paths:

- - "cmake/**"

- - "config/**"

- - "deps/**"

- - "python/**"

- - "setup.py"

- - "include/**"

- - "src/**"

- - ".github/workflows/gpu-ci.yml"

- - "tests/cpp_gpu_tests.sh"

- - "tests/multi_gpu_tests.sh"

- - "tests/python_interface_test.sh"

+ schedule:

+ - cron: "0 0 1,14,28 * *" # At 00:00 on day-of-month 1, 14, and 28.

push:

branches:

- - "master"

+ - "inference"

paths:

- "cmake/**"

- "config/**"

@@ -23,10 +12,14 @@ on:

- "python/**"

- "setup.py"

- "include/**"

+ - "inference/**"

- "src/**"

+ - "tests/inference/**"

+ - "conda/flexflow.yml"

- ".github/workflows/gpu-ci.yml"

- "tests/cpp_gpu_tests.sh"

- - "tests/multi_gpu_tests.sh"

+ - "tests/inference_tests.sh"

+ - "tests/training_tests.sh"

- "tests/python_interface_test.sh"

workflow_dispatch:

@@ -48,12 +41,33 @@ jobs:

run: |

pip3 install pip --upgrade

pip3 install pyopenssl --upgrade

+ pip3 install urllib3 --upgrade

pip3 install pygithub

python3 .github/workflows/helpers/gpu_ci_helper.py

+ keep-runner-registered:

+ name: Keep runner alive

+ if: ${{ github.event_name == 'schedule' }}

+ runs-on: [self-hosted, gpu]

+ defaults:

+ run:

+ shell: bash -l {0} # required to use an activated conda environment

+ env:

+ CONDA: "3"

+ needs: gpu-ci-concierge

+ container:

+ image: ghcr.io/flexflow/flexflow-environment-cuda-11.8:latest

+ options: --gpus all --shm-size=8192m

+ steps:

+ - name: Keep alive

+ run: |

+ echo "Keep self-hosted runner registered with Github"

+ sleep 10m

+

python-interface-check:

name: Check Python Interface

- runs-on: self-hosted

+ if: ${{ github.event_name != 'schedule' }}

+ runs-on: [self-hosted, gpu]

defaults:

run:

shell: bash -l {0} # required to use an activated conda environment

@@ -77,7 +91,7 @@ jobs:

with:

miniconda-version: "latest"

activate-environment: flexflow

- environment-file: conda/flexflow-cpu.yml

+ environment-file: conda/flexflow.yml

auto-activate-base: false

auto-update-conda: false

@@ -89,7 +103,7 @@ jobs:

run: |

export PATH=$CONDA_PREFIX/bin:$PATH

export FF_HOME=$(pwd)

- export FF_USE_PREBUILT_LEGION=OFF

+ export FF_USE_PREBUILT_LEGION=OFF #remove this after fixing python path issue in Legion

mkdir build

cd build

../config/config.linux

@@ -106,6 +120,7 @@ jobs:

run: |

export PATH=$CONDA_PREFIX/bin:$PATH

export FF_HOME=$(pwd)

+ export FF_USE_PREBUILT_LEGION=OFF #remove this after fixing python path issue in Legion

cd build

../config/config.linux

make install

@@ -124,45 +139,150 @@ jobs:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$CONDA_PREFIX/lib

./tests/align/test_all_operators.sh

- gpu-ci-flexflow:

- name: Single Machine, Multiple GPUs Tests

- runs-on: self-hosted

- needs: python-interface-check

+ inference-tests:

+ name: Inference Tests

+ if: ${{ github.event_name != 'schedule' }}

+ runs-on: [self-hosted, gpu]

+ defaults:

+ run:

+ shell: bash -l {0} # required to use an activated conda environment

+ env:

+ CONDA: "3"

+ HUGGINGFACE_TOKEN: ${{ secrets.HUGGINGFACE_TOKEN }}

+ needs: gpu-ci-concierge

+ container:

+ image: ghcr.io/flexflow/flexflow-environment-cuda-11.8:latest

+ options: --gpus all --shm-size=8192m

+ steps:

+ - name: Install updated git version

+ run: sudo add-apt-repository ppa:git-core/ppa -y && sudo apt update -y && sudo apt install -y --no-install-recommends git

+

+ - name: Checkout Git Repository

+ uses: actions/checkout@v3

+ with:

+ submodules: recursive

+

+ - name: Install conda and FlexFlow dependencies

+ uses: conda-incubator/setup-miniconda@v2

+ with:

+ miniconda-version: "latest"

+ activate-environment: flexflow

+ environment-file: conda/flexflow.yml

+ auto-activate-base: false

+

+ - name: Build FlexFlow

+ run: |

+ export PATH=$CONDA_PREFIX/bin:$PATH

+ export FF_HOME=$(pwd)

+ export FF_USE_PREBUILT_LEGION=OFF #remove this after fixing python path issue in Legion

+ export FF_BUILD_ALL_INFERENCE_EXAMPLES=ON

+ mkdir build

+ cd build

+ ../config/config.linux

+ make -j

+

+ - name: Run PEFT tests

+ run: |

+ export PATH=$CONDA_PREFIX/bin:$PATH

+ export CUDNN_DIR=/usr/local/cuda

+ export CUDA_DIR=/usr/local/cuda

+ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$CONDA_PREFIX/lib

+

+ source ./build/set_python_envs.sh

+ ./tests/peft_test.sh

+

+ - name: Run inference tests

+ env:

+ CPP_INFERENCE_TESTS: ${{ vars.CPP_INFERENCE_TESTS }}

+ run: |

+ export PATH=$CONDA_PREFIX/bin:$PATH

+ export FF_HOME=$(pwd)

+ export CUDNN_DIR=/usr/local/cuda

+ export CUDA_DIR=/usr/local/cuda

+ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$CONDA_PREFIX/lib

+

+ # GPT tokenizer test

+ # ./tests/gpt_tokenizer_test.sh

+

+ # Inference tests

+ source ./build/set_python_envs.sh

+ ./tests/inference_tests.sh

+

+ - name: Save inference output as an artifact

+ if: always()

+ run: |

+ cd inference

+ tar -zcvf output.tar.gz ./output

+

+ - name: Upload artifact

+ uses: actions/upload-artifact@v3

+ if: always()

+ with:

+ name: output

+ path: inference/output.tar.gz

+

+ # Github persists the .cache folder across different runs/containers

+ - name: Clear cache

+ if: always()

+ run: sudo rm -rf ~/.cache

+

+ training-tests:

+ name: Training Tests

+ if: ${{ github.event_name != 'schedule' }}

+ runs-on: [self-hosted, gpu]

+ # skip this time-consuming test for PRs to the inference branch

+ # if: ${{ github.event_name != 'pull_request' || github.base_ref != 'inference' }}

+ defaults:

+ run:

+ shell: bash -l {0} # required to use an activated conda environment

+ env:

+ CONDA: "3"

+ needs: inference-tests

container:

image: ghcr.io/flexflow/flexflow-environment-cuda-11.8:latest

options: --gpus all --shm-size=8192m

steps:

- name: Install updated git version

run: sudo add-apt-repository ppa:git-core/ppa -y && sudo apt update -y && sudo apt install -y --no-install-recommends git

+

- name: Checkout Git Repository

uses: actions/checkout@v3

with:

submodules: recursive

+

+ - name: Install conda and FlexFlow dependencies

+ uses: conda-incubator/setup-miniconda@v2

+ with:

+ miniconda-version: "latest"

+ activate-environment: flexflow

+ environment-file: conda/flexflow.yml

+ auto-activate-base: false

- name: Build and Install FlexFlow

run: |

- export PATH=/opt/conda/bin:$PATH

+ export PATH=$CONDA_PREFIX/bin:$PATH

export FF_HOME=$(pwd)

export FF_BUILD_ALL_EXAMPLES=ON

- export FF_USE_PREBUILT_LEGION=OFF

+ export FF_BUILD_ALL_INFERENCE_EXAMPLES=ON

+ export FF_USE_PREBUILT_LEGION=OFF #remove this after fixing python path issue in Legion

pip install . --verbose

- name: Check FlexFlow Python interface (pip)

run: |

- export PATH=/opt/conda/bin:$PATH

+ export PATH=$CONDA_PREFIX/bin:$PATH

export FF_HOME=$(pwd)

- export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/opt/conda/lib

+ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$CONDA_PREFIX/lib

./tests/python_interface_test.sh after-installation

- name: Run multi-gpu tests

run: |

- export PATH=/opt/conda/bin:$PATH

+ export PATH=$CONDA_PREFIX/bin:$PATH

export CUDNN_DIR=/usr/local/cuda

export CUDA_DIR=/usr/local/cuda

export FF_HOME=$(pwd)

- export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/opt/conda/lib

+ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$CONDA_PREFIX/lib

# C++ tests

./tests/cpp_gpu_tests.sh 4

# Python tests

- ./tests/multi_gpu_tests.sh 4

+ ./tests/training_tests.sh 4

diff --git a/.github/workflows/helpers/install_cudnn.sh b/.github/workflows/helpers/install_cudnn.sh

index 318134e331..73b8e88418 100755

--- a/.github/workflows/helpers/install_cudnn.sh

+++ b/.github/workflows/helpers/install_cudnn.sh

@@ -5,8 +5,11 @@ set -x

# Cd into directory holding this script

cd "${BASH_SOURCE[0]%/*}"

+ubuntu_version=$(lsb_release -rs)

+ubuntu_version=${ubuntu_version//./}

+

# Install CUDNN

-cuda_version=${1:-11.8.0}

+cuda_version=${1:-12.1.1}

cuda_version=$(echo "${cuda_version}" | cut -f1,2 -d'.')

echo "Installing CUDNN for CUDA version: ${cuda_version} ..."

CUDNN_LINK=http://developer.download.nvidia.com/compute/redist/cudnn/v8.0.5/cudnn-11.1-linux-x64-v8.0.5.39.tgz

@@ -44,6 +47,12 @@ elif [[ "$cuda_version" == "11.7" ]]; then

elif [[ "$cuda_version" == "11.8" ]]; then

CUDNN_LINK=https://developer.download.nvidia.com/compute/redist/cudnn/v8.7.0/local_installers/11.8/cudnn-linux-x86_64-8.7.0.84_cuda11-archive.tar.xz

CUDNN_TARBALL_NAME=cudnn-linux-x86_64-8.7.0.84_cuda11-archive.tar.xz

+elif [[ "$cuda_version" == "12.0" || "$cuda_version" == "12.1" || "$cuda_version" == "12.2" || "$cuda_version" == "12.3" || "$cuda_version" == "12.4" || "$cuda_version" == "12.5" ]]; then

+ CUDNN_LINK=https://developer.download.nvidia.com/compute/redist/cudnn/v8.8.0/local_installers/12.0/cudnn-local-repo-ubuntu2004-8.8.0.121_1.0-1_amd64.deb

+ CUDNN_TARBALL_NAME=cudnn-local-repo-ubuntu2004-8.8.0.121_1.0-1_amd64.deb

+else

+ echo "CUDNN support for CUDA version above 12.5 not yet added"

+ exit 1

fi

wget -c -q $CUDNN_LINK

if [[ "$cuda_version" == "11.6" || "$cuda_version" == "11.7" || "$cuda_version" == "11.8" ]]; then

@@ -52,6 +61,17 @@ if [[ "$cuda_version" == "11.6" || "$cuda_version" == "11.7" || "$cuda_version"

sudo cp -r "$CUDNN_EXTRACTED_TARBALL_NAME"/include/* /usr/local/include

sudo cp -r "$CUDNN_EXTRACTED_TARBALL_NAME"/lib/* /usr/local/lib

rm -rf "$CUDNN_EXTRACTED_TARBALL_NAME"

+elif [[ "$CUDNN_TARBALL_NAME" == *.deb ]]; then

+ wget -c -q "https://developer.download.nvidia.com/compute/cuda/repos/ubuntu${ubuntu_version}/x86_64/cuda-keyring_1.1-1_all.deb"

+ sudo dpkg -i cuda-keyring_1.1-1_all.deb

+ sudo apt update -y

+ rm -f cuda-keyring_1.1-1_all.deb

+ sudo dpkg -i $CUDNN_TARBALL_NAME

+ sudo cp /var/cudnn-local-repo-ubuntu2004-8.8.0.121/cudnn-local-A9E17745-keyring.gpg /usr/share/keyrings/

+ sudo apt update -y

+ sudo apt install -y libcudnn8

+ sudo apt install -y libcudnn8-dev

+ sudo apt install -y libcudnn8-samples

else

sudo tar -xzf $CUDNN_TARBALL_NAME -C /usr/local

fi

diff --git a/.github/workflows/helpers/install_dependencies.sh b/.github/workflows/helpers/install_dependencies.sh

index 5ab211c962..6435a37eea 100755

--- a/.github/workflows/helpers/install_dependencies.sh

+++ b/.github/workflows/helpers/install_dependencies.sh

@@ -7,24 +7,61 @@ cd "${BASH_SOURCE[0]%/*}"

# General dependencies

echo "Installing apt dependencies..."

-sudo apt-get update && sudo apt-get install -y --no-install-recommends wget binutils git zlib1g-dev libhdf5-dev && \

+sudo apt-get update && sudo apt-get install -y --no-install-recommends wget binutils git zlib1g-dev libhdf5-dev jq && \

sudo rm -rf /var/lib/apt/lists/*

-# Install CUDNN

-./install_cudnn.sh

-

-# Install HIP dependencies if needed

FF_GPU_BACKEND=${FF_GPU_BACKEND:-"cuda"}

+hip_version=${hip_version:-"5.6"}

if [[ "${FF_GPU_BACKEND}" != @(cuda|hip_cuda|hip_rocm|intel) ]]; then

echo "Error, value of FF_GPU_BACKEND (${FF_GPU_BACKEND}) is invalid."

exit 1

-elif [[ "$FF_GPU_BACKEND" == "hip_cuda" || "$FF_GPU_BACKEND" = "hip_rocm" ]]; then

+fi

+# Install CUDNN if needed

+if [[ "$FF_GPU_BACKEND" == "cuda" || "$FF_GPU_BACKEND" = "hip_cuda" ]]; then

+ # Install CUDNN

+ ./install_cudnn.sh

+ # Install NCCL

+ ./install_nccl.sh

+fi

+# Install HIP dependencies if needed

+if [[ "$FF_GPU_BACKEND" == "hip_cuda" || "$FF_GPU_BACKEND" = "hip_rocm" ]]; then

echo "FF_GPU_BACKEND: ${FF_GPU_BACKEND}. Installing HIP dependencies"

- wget https://repo.radeon.com/amdgpu-install/22.20.5/ubuntu/focal/amdgpu-install_22.20.50205-1_all.deb

- sudo apt-get install -y ./amdgpu-install_22.20.50205-1_all.deb

- rm ./amdgpu-install_22.20.50205-1_all.deb

+ # Check that hip_version is one of 5.3,5.4,5.5,5.6

+ if [[ "$hip_version" != "5.3" && "$hip_version" != "5.4" && "$hip_version" != "5.5" && "$hip_version" != "5.6" ]]; then

+ echo "hip_version '${hip_version}' is not supported, please choose among {5.3, 5.4, 5.5, 5.6}"

+ exit 1

+ fi

+ # Compute script name and url given the version

+ AMD_GPU_SCRIPT_NAME=amdgpu-install_5.6.50600-1_all.deb

+ if [ "$hip_version" = "5.3" ]; then

+ AMD_GPU_SCRIPT_NAME=amdgpu-install_5.3.50300-1_all.deb

+ elif [ "$hip_version" = "5.4" ]; then

+ AMD_GPU_SCRIPT_NAME=amdgpu-install_5.4.50400-1_all.deb

+ elif [ "$hip_version" = "5.5" ]; then

+ AMD_GPU_SCRIPT_NAME=amdgpu-install_5.5.50500-1_all.deb

+ fi

+ AMD_GPU_SCRIPT_URL="https://repo.radeon.com/amdgpu-install/${hip_version}/ubuntu/focal/${AMD_GPU_SCRIPT_NAME}"

+ # Download and install AMD GPU software with ROCM and HIP support

+ wget "$AMD_GPU_SCRIPT_URL"

+ sudo apt-get install -y ./${AMD_GPU_SCRIPT_NAME}

+ sudo rm ./${AMD_GPU_SCRIPT_NAME}

sudo amdgpu-install -y --usecase=hip,rocm --no-dkms

- sudo apt-get install -y hip-dev hipblas miopen-hip rocm-hip-sdk

+ sudo apt-get install -y hip-dev hipblas miopen-hip rocm-hip-sdk rocm-device-libs

+

+ # Install protobuf v3.20.x manually

+ sudo apt-get update -y && sudo apt-get install -y pkg-config zip g++ zlib1g-dev unzip python autoconf automake libtool curl make

+ git clone -b 3.20.x https://github.com/protocolbuffers/protobuf.git

+ cd protobuf/

+ git submodule update --init --recursive

+ ./autogen.sh

+ ./configure

+ cores_available=$(nproc --all)

+ n_build_cores=$(( cores_available -1 ))

+ if (( n_build_cores < 1 )) ; then n_build_cores=1 ; fi

+ make -j $n_build_cores

+ sudo make install

+ sudo ldconfig

+ cd ..

else

echo "FF_GPU_BACKEND: ${FF_GPU_BACKEND}. Skipping installing HIP dependencies"

fi

diff --git a/.github/workflows/helpers/install_nccl.sh b/.github/workflows/helpers/install_nccl.sh

new file mode 100755

index 0000000000..ae6793ea2a

--- /dev/null

+++ b/.github/workflows/helpers/install_nccl.sh

@@ -0,0 +1,51 @@

+#!/bin/bash

+set -euo pipefail

+set -x

+

+# Cd into directory holding this script

+cd "${BASH_SOURCE[0]%/*}"

+

+# Add NCCL key ring

+ubuntu_version=$(lsb_release -rs)

+ubuntu_version=${ubuntu_version//./}

+wget "https://developer.download.nvidia.com/compute/cuda/repos/ubuntu${ubuntu_version}/x86_64/cuda-keyring_1.1-1_all.deb"

+sudo dpkg -i cuda-keyring_1.1-1_all.deb

+sudo apt update -y

+rm -f cuda-keyring_1.1-1_all.deb

+

+# Install NCCL

+cuda_version=${1:-12.1.1}

+cuda_version=$(echo "${cuda_version}" | cut -f1,2 -d'.')

+echo "Installing NCCL for CUDA version: ${cuda_version} ..."

+

+# We need to run a different install command based on the CUDA version, otherwise running `sudo apt install libnccl2 libnccl-dev`

+# will automatically upgrade CUDA to the latest version.

+

+if [[ "$cuda_version" == "11.0" ]]; then

+ sudo apt install libnccl2=2.15.5-1+cuda11.0 libnccl-dev=2.15.5-1+cuda11.0

+elif [[ "$cuda_version" == "11.1" ]]; then

+ sudo apt install libnccl2=2.8.4-1+cuda11.1 libnccl-dev=2.8.4-1+cuda11.1

+elif [[ "$cuda_version" == "11.2" ]]; then

+ sudo apt install libnccl2=2.8.4-1+cuda11.2 libnccl-dev=2.8.4-1+cuda11.2

+elif [[ "$cuda_version" == "11.3" ]]; then

+ sudo apt install libnccl2=2.9.9-1+cuda11.3 libnccl-dev=2.9.9-1+cuda11.3

+elif [[ "$cuda_version" == "11.4" ]]; then

+ sudo apt install libnccl2=2.11.4-1+cuda11.4 libnccl-dev=2.11.4-1+cuda11.4

+elif [[ "$cuda_version" == "11.5" ]]; then

+ sudo apt install libnccl2=2.11.4-1+cuda11.5 libnccl-dev=2.11.4-1+cuda11.5

+elif [[ "$cuda_version" == "11.6" ]]; then

+ sudo apt install libnccl2=2.12.12-1+cuda11.6 libnccl-dev=2.12.12-1+cuda11.6

+elif [[ "$cuda_version" == "11.7" ]]; then

+ sudo apt install libnccl2=2.14.3-1+cuda11.7 libnccl-dev=2.14.3-1+cuda11.7

+elif [[ "$cuda_version" == "11.8" ]]; then

+ sudo apt install libnccl2=2.16.5-1+cuda11.8 libnccl-dev=2.16.5-1+cuda11.8

+elif [[ "$cuda_version" == "12.0" ]]; then

+ sudo apt install libnccl2=2.18.3-1+cuda12.0 libnccl-dev=2.18.3-1+cuda12.0

+elif [[ "$cuda_version" == "12.1" ]]; then

+ sudo apt install libnccl2=2.18.3-1+cuda12.1 libnccl-dev=2.18.3-1+cuda12.1

+elif [[ "$cuda_version" == "12.2" ]]; then

+ sudo apt install libnccl2=2.18.3-1+cuda12.2 libnccl-dev=2.18.3-1+cuda12.2

+else

+ echo "Installing NCCL for CUDA version ${cuda_version} is not supported"

+ exit 1

+fi

diff --git a/.github/workflows/helpers/oracle_con.py b/.github/workflows/helpers/oracle_con.py

new file mode 100644

index 0000000000..0891d66e99

--- /dev/null

+++ b/.github/workflows/helpers/oracle_con.py

@@ -0,0 +1,37 @@

+import oci

+import argparse

+import os

+

+parser = argparse.ArgumentParser(description="Program with optional flags")

+group = parser.add_mutually_exclusive_group()

+group.add_argument("--start", action="store_true", help="Start action")

+group.add_argument("--stop", action="store_true", help="Stop action")

+parser.add_argument("--instance_id", type=str, required=True, help="instance id required")

+args = parser.parse_args()

+

+oci_key_content = os.getenv("OCI_CLI_KEY_CONTENT")

+

+config = {

+ "user": os.getenv("OCI_CLI_USER"),

+ "key_content": os.getenv("OCI_CLI_KEY_CONTENT"),

+ "fingerprint": os.getenv("OCI_CLI_FINGERPRINT"),

+ "tenancy": os.getenv("OCI_CLI_TENANCY"),

+ "region": os.getenv("OCI_CLI_REGION")

+}

+

+# Initialize the OCI configuration

+oci.config.validate_config(config)

+

+# Initialize the ComputeClient to interact with VM instances

+compute = oci.core.ComputeClient(config)

+

+# Replace 'your_instance_id' with the actual instance ID of your VM

+instance_id = args.instance_id

+

+# Perform the action

+if args.start:

+ # Start the VM

+ compute.instance_action(instance_id, "START")

+else:

+ # Stop the VM

+ compute.instance_action(instance_id, "STOP")

diff --git a/.github/workflows/helpers/prebuild_legion.sh b/.github/workflows/helpers/prebuild_legion.sh

new file mode 100755

index 0000000000..9f5cbe147a

--- /dev/null

+++ b/.github/workflows/helpers/prebuild_legion.sh

@@ -0,0 +1,75 @@

+#! /usr/bin/env bash

+set -euo pipefail

+

+# Parse input params

+python_version=${python_version:-"empty"}

+gpu_backend=${gpu_backend:-"empty"}

+gpu_backend_version=${gpu_backend_version:-"empty"}

+

+if [[ "${gpu_backend}" != @(cuda|hip_cuda|hip_rocm|intel) ]]; then

+ echo "Error, value of gpu_backend (${gpu_backend}) is invalid. Pick between 'cuda', 'hip_cuda', 'hip_rocm' or 'intel'."

+ exit 1

+else

+ echo "Pre-building Legion with GPU backend: ${gpu_backend}"

+fi

+

+if [[ "${gpu_backend}" == "cuda" || "${gpu_backend}" == "hip_cuda" ]]; then

+ # Check that CUDA version is supported. Versions above 12.0 not supported because we don't publish docker images for it yet.

+ if [[ "$gpu_backend_version" != @(11.1|11.2|11.3|11.4|11.5|11.6|11.7|11.8|12.0) ]]; then

+ echo "cuda_version is not supported, please choose among {11.1|11.2|11.3|11.4|11.5|11.6|11.7|11.8|12.0}"

+ exit 1

+ fi

+ export cuda_version="$gpu_backend_version"

+elif [[ "${gpu_backend}" == "hip_rocm" ]]; then

+ # Check that HIP version is supported

+ if [[ "$gpu_backend_version" != @(5.3|5.4|5.5|5.6) ]]; then

+ echo "hip_version is not supported, please choose among {5.3, 5.4, 5.5, 5.6}"

+ exit 1

+ fi

+ export hip_version="$gpu_backend_version"

+else

+ echo "gpu backend: ${gpu_backend} and gpu_backend_version: ${gpu_backend_version} not yet supported."

+ exit 1

+fi

+

+# Cd into directory holding this script

+cd "${BASH_SOURCE[0]%/*}"

+

+export FF_GPU_BACKEND="${gpu_backend}"

+export FF_CUDA_ARCH=all

+export FF_HIP_ARCH=all

+export BUILD_LEGION_ONLY=ON

+export INSTALL_DIR="/usr/legion"

+export python_version="${python_version}"

+

+# Build Docker Flexflow Container

+echo "building docker"

+../../../docker/build.sh flexflow

+

+# Cleanup any existing container with the same name

+docker rm prelegion || true

+

+# Create container to be able to copy data from the image

+docker create --name prelegion flexflow-"${gpu_backend}"-"${gpu_backend_version}":latest

+

+# Copy legion libraries to host

+echo "extract legion library assets"

+mkdir -p ../../../prebuilt_legion_assets

+rm -rf ../../../prebuilt_legion_assets/tmp || true

+docker cp prelegion:$INSTALL_DIR ../../../prebuilt_legion_assets/tmp

+

+

+# Create the tarball file

+cd ../../../prebuilt_legion_assets/tmp

+export LEGION_TARBALL="legion_ubuntu-20.04_${gpu_backend}-${gpu_backend_version}_py${python_version}.tar.gz"

+

+echo "Creating archive $LEGION_TARBALL"

+tar -zcvf "../$LEGION_TARBALL" ./

+cd ..

+echo "Checking the size of the Legion tarball..."

+du -h "$LEGION_TARBALL"

+

+

+# Cleanup

+rm -rf tmp/*

+docker rm prelegion

diff --git a/.github/workflows/multinode-test.yml b/.github/workflows/multinode-test.yml

index 37f81b615f..2fc527bf08 100644

--- a/.github/workflows/multinode-test.yml

+++ b/.github/workflows/multinode-test.yml

@@ -25,6 +25,7 @@ jobs:

run: |

pip3 install pip --upgrade

pip3 install pyopenssl --upgrade

+ pip3 install urllib3 --upgrade

pip3 install pygithub

python3 .github/workflows/helpers/gpu_ci_helper.py

@@ -37,7 +38,7 @@ jobs:

# 10h timeout, instead of default of 360min (6h)

timeout-minutes: 600

container:

- image: ghcr.io/flexflow/flexflow-environment-cuda-11.8:latest

+ image: ghcr.io/flexflow/flexflow-environment-cuda-12.0:latest

options: --gpus all --shm-size=8192m

steps:

- name: Install updated git version

@@ -77,7 +78,7 @@ jobs:

export OMPI_ALLOW_RUN_AS_ROOT=1

export OMPI_ALLOW_RUN_AS_ROOT_CONFIRM=1

export OMPI_MCA_btl_vader_single_copy_mechanism=none

- ./tests/multi_gpu_tests.sh 2 2

+ ./tests/training_tests.sh 2 2

multinode-gpu-test-ucx:

name: Multinode GPU Test with UCX

@@ -86,7 +87,7 @@ jobs:

runs-on: self-hosted

needs: gpu-ci-concierge

container:

- image: ghcr.io/flexflow/flexflow-environment-cuda-11.8:latest

+ image: ghcr.io/flexflow/flexflow-environment-cuda-12.0:latest

options: --gpus all --shm-size=8192m

# 10h timeout, instead of default of 360min (6h)

timeout-minutes: 600

@@ -128,7 +129,7 @@ jobs:

export OMPI_ALLOW_RUN_AS_ROOT=1

export OMPI_ALLOW_RUN_AS_ROOT_CONFIRM=1

export OMPI_MCA_btl_vader_single_copy_mechanism=none

- ./tests/multi_gpu_tests.sh 2 2

+ ./tests/training_tests.sh 2 2

multinode-gpu-test-native-ucx:

name: Multinode GPU Test with native UCX

@@ -137,7 +138,7 @@ jobs:

runs-on: self-hosted

needs: gpu-ci-concierge

container:

- image: ghcr.io/flexflow/flexflow-environment-cuda-11.8:latest

+ image: ghcr.io/flexflow/flexflow-environment-cuda-12.0:latest

options: --gpus all --shm-size=8192m

steps:

- name: Install updated git version

@@ -176,7 +177,7 @@ jobs:

export OMPI_ALLOW_RUN_AS_ROOT=1

export OMPI_ALLOW_RUN_AS_ROOT_CONFIRM=1

export OMPI_MCA_btl_vader_single_copy_mechanism=none

- ./tests/multi_gpu_tests.sh 2 2

+ ./tests/training_tests.sh 2 2

notify-slack:

name: Notify Slack in case of failure

diff --git a/.github/workflows/pip-install-skip.yml b/.github/workflows/pip-install-skip.yml

index f2606b94d8..92c3223e32 100644

--- a/.github/workflows/pip-install-skip.yml

+++ b/.github/workflows/pip-install-skip.yml

@@ -7,6 +7,7 @@ on:

- "deps/**"

- "python/**"

- "setup.py"

+ - "requirements.txt"

- ".github/workflows/helpers/install_dependencies.sh"

- ".github/workflows/pip-install.yml"

workflow_dispatch:

diff --git a/.github/workflows/pip-install.yml b/.github/workflows/pip-install.yml

index 7d60d3bf52..d5acbfc2e1 100644

--- a/.github/workflows/pip-install.yml

+++ b/.github/workflows/pip-install.yml

@@ -7,6 +7,7 @@ on:

- "deps/**"

- "python/**"

- "setup.py"

+ - "requirements.txt"

- ".github/workflows/helpers/install_dependencies.sh"

- ".github/workflows/pip-install.yml"

push:

@@ -18,6 +19,7 @@ on:

- "deps/**"

- "python/**"

- "setup.py"

+ - "requirements.txt"

- ".github/workflows/helpers/install_dependencies.sh"

- ".github/workflows/pip-install.yml"

workflow_dispatch:

@@ -42,10 +44,10 @@ jobs:

run: .github/workflows/helpers/free_space_on_runner.sh

- name: Install CUDA

- uses: Jimver/cuda-toolkit@v0.2.11

+ uses: Jimver/cuda-toolkit@v0.2.16

id: cuda-toolkit

with:

- cuda: "11.8.0"

+ cuda: "12.1.1"

# Disable caching of the CUDA binaries, since it does not give us any significant performance improvement

use-github-cache: "false"

@@ -64,10 +66,11 @@ jobs:

export FF_HOME=$(pwd)

export FF_CUDA_ARCH=70

pip install . --verbose

+ # Remove build folder to check that the installed version can run independently of the build files

+ rm -rf build

- - name: Check availability of Python flexflow.core module

+ - name: Check availability of flexflow modules in Python

run: |

export LD_LIBRARY_PATH="$CUDA_PATH/lib64/stubs:$LD_LIBRARY_PATH"

sudo ln -s "$CUDA_PATH/lib64/stubs/libcuda.so" "$CUDA_PATH/lib64/stubs/libcuda.so.1"

- export CPU_ONLY_TEST=1

- python -c "import flexflow.core; exit()"

+ python -c 'import flexflow.core; import flexflow.serve as ff; exit()'

diff --git a/.github/workflows/prebuild-legion.yml b/.github/workflows/prebuild-legion.yml

new file mode 100644

index 0000000000..633fb00eb8

--- /dev/null

+++ b/.github/workflows/prebuild-legion.yml

@@ -0,0 +1,84 @@

+name: "prebuild-legion"

+on:

+ push:

+ branches:

+ - "inference"

+ paths:

+ - "cmake/**"

+ - "config/**"

+ - "deps/legion/**"

+ - ".github/workflows/helpers/install_dependencies.sh"

+ workflow_dispatch:

+concurrency:

+ group: prebuild-legion-${{ github.head_ref || github.run_id }}

+ cancel-in-progress: true

+

+jobs:

+ prebuild-legion:

+ name: Prebuild Legion with CMake

+ runs-on: ubuntu-20.04

+ defaults:

+ run:

+ shell: bash -l {0} # required to use an activated conda environment

+ strategy:

+ matrix:

+ gpu_backend: ["cuda", "hip_rocm"]

+ gpu_backend_version: ["12.0", "5.6"]

+ python_version: ["3.11"]

+ exclude:

+ - gpu_backend: "cuda"

+ gpu_backend_version: "5.6"

+ - gpu_backend: "hip_rocm"

+ gpu_backend_version: "12.0"

+ fail-fast: false

+ steps:

+ - name: Checkout Git Repository

+ uses: actions/checkout@v3

+ with:

+ submodules: recursive

+

+ - name: Free additional space on runner

+ run: .github/workflows/helpers/free_space_on_runner.sh

+

+ - name: Build Legion

+ env:

+ gpu_backend: ${{ matrix.gpu_backend }}

+ gpu_backend_version: ${{ matrix.gpu_backend_version }}

+ python_version: ${{ matrix.python_version }}

+ run: .github/workflows/helpers/prebuild_legion.sh

+

+ - name: Archive compiled Legion library (CUDA)

+ uses: actions/upload-artifact@v3

+ with:

+ name: legion_ubuntu-20.04_${{ matrix.gpu_backend }}-${{ matrix.gpu_backend_version }}_py${{ matrix.python_version }}

+ path: prebuilt_legion_assets/legion_ubuntu-20.04_${{ matrix.gpu_backend }}-${{ matrix.gpu_backend_version }}_py${{ matrix.python_version }}.tar.gz

+

+ create-release:

+ name: Create new release

+ runs-on: ubuntu-20.04

+ needs: prebuild-legion

+ steps:

+ - name: Checkout Git Repository

+ uses: actions/checkout@v3

+ - name: Free additional space on runner

+ run: .github/workflows/helpers/free_space_on_runner.sh

+ - name: Create folder for artifacts

+ run: mkdir artifacts unwrapped_artifacts

+ - name: Download artifacts

+ uses: actions/download-artifact@v3

+ with:

+ path: ./artifacts

+ - name: Display structure of downloaded files

+ working-directory: ./artifacts

+ run: ls -R

+ - name: Unwrap all artifacts

+ working-directory: ./artifacts

+ run: find . -maxdepth 2 -mindepth 2 -type f -name "*.tar.gz" -exec mv {} ../unwrapped_artifacts/ \;

+ - name: Get datetime

+ run: echo "RELEASE_DATETIME=$(date '+%Y-%m-%dT%H-%M-%S')" >> $GITHUB_ENV

+ - name: Release

+ env:

+ NAME: ${{ env.RELEASE_DATETIME }}

+ TAG_NAME: ${{ env.RELEASE_DATETIME }}

+ GITHUB_TOKEN: ${{ secrets.FLEXFLOW_TOKEN }}

+ run: gh release create $TAG_NAME ./unwrapped_artifacts/*.tar.gz --repo flexflow/flexflow-third-party

diff --git a/.gitignore b/.gitignore

index 20d3979b08..cc34c1a7b6 100644

--- a/.gitignore

+++ b/.gitignore

@@ -15,6 +15,11 @@ __pycache__/

# C extensions

*.so

+/inference/weights/*

+/inference/tokenizer/*

+/inference/prompt/*

+/inference/output/*

+

# Distribution / packaging

.Python

build/

@@ -83,10 +88,7 @@ docs/build/

# Doxygen documentation

docs/doxygen/output/

-

-# Exhale documentation

-docs/source/_doxygen/

-docs/source/c++_api/

+docs/doxygen/cpp_api/

# PyBuilder

.pybuilder/

@@ -179,6 +181,15 @@ train-labels-idx1-ubyte

# Logs

logs/

+gpt_tokenizer

# pip version

python/flexflow/version.txt

+

+inference_tensors

+hf_peft_tensors

+lora_training_logs

+

+Untitled-1.ipynb

+Untitled-2.ipynb

+tests/inference/python_test_configs/*.json

diff --git a/.gitmodules b/.gitmodules

index b8419fda94..c68582d4ac 100644

--- a/.gitmodules

+++ b/.gitmodules

@@ -19,3 +19,7 @@

[submodule "deps/json"]

path = deps/json

url = https://github.com/nlohmann/json.git

+[submodule "deps/tokenizers-cpp"]

+ path = deps/tokenizers-cpp

+ url = https://github.com/mlc-ai/tokenizers-cpp.git

+ fetchRecurseSubmodules = true

\ No newline at end of file

diff --git a/CMakeLists.txt b/CMakeLists.txt

index 8ad3b81f9c..f06969ae04 100644

--- a/CMakeLists.txt

+++ b/CMakeLists.txt

@@ -1,6 +1,7 @@

cmake_minimum_required(VERSION 3.10)

project(FlexFlow)

+

include(ExternalProject)

# Set policy CMP0074 to eliminate cmake warnings

@@ -12,7 +13,21 @@ if (CMAKE_VERSION VERSION_GREATER_EQUAL "3.24.0")

endif()

set(CMAKE_MODULE_PATH ${CMAKE_MODULE_PATH} ${CMAKE_CURRENT_LIST_DIR}/cmake)

set(FLEXFLOW_ROOT ${CMAKE_CURRENT_LIST_DIR})

-set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -UNDEBUG")

+set(CMAKE_CXX_FLAGS "-std=c++17 ${CMAKE_CXX_FLAGS} -fPIC -UNDEBUG")

+set(CMAKE_HIP_FLAGS "-std=c++17 ${CMAKE_HIP_FLAGS} -fPIC -UNDEBUG")

+

+# set std 17

+#set(CMAKE_CXX_STANDARD 17)

+#set(CMAKE_CUDA_STANDARD 17)

+

+option(INFERENCE_TESTS "Run inference tests" OFF)

+set(LIBTORCH_PATH "${CMAKE_CURRENT_SOURCE_DIR}/../libtorch" CACHE STRING "LibTorch Path")

+if (INFERENCE_TESTS)

+ find_package(Torch REQUIRED PATHS ${LIBTORCH_PATH} NO_DEFAULT_PATH)

+ set(CMAKE_CXX_FLAGS "-std=c++17 ${CMAKE_CXX_FLAGS} -fPIC ${TORCH_CXX_FLAGS}")

+ message(STATUS "LIBTORCH_PATH: ${LIBTORCH_PATH}")

+ message(STATUS "TORCH_LIBRARIES: ${TORCH_LIBRARIES}")

+endif()

# Set a default build type if none was specified

set(default_build_type "Debug")

@@ -22,8 +37,33 @@ if(NOT CMAKE_BUILD_TYPE AND NOT CMAKE_CONFIGURATION_TYPES)

STRING "Choose the type of build." FORCE)

endif()

+# option for using Python

+option(FF_USE_PYTHON "Enable Python" ON)

+if (FF_USE_PYTHON)

+ find_package(Python3 COMPONENTS Interpreter Development)

+endif()

+

+if(INSTALL_DIR)

+ message(STATUS "INSTALL_DIR: ${INSTALL_DIR}")

+ set(CMAKE_INSTALL_PREFIX ${INSTALL_DIR} CACHE PATH "Installation directory" FORCE)

+else()

+ # Install DIR not set. Use default, unless a conda environment is in use

+ if ((DEFINED ENV{CONDA_PREFIX} OR (Python3_EXECUTABLE AND Python3_EXECUTABLE MATCHES "conda")) AND NOT FF_BUILD_FROM_PYPI)

+ if (DEFINED ENV{CONDA_PREFIX})

+ set(CONDA_PREFIX $ENV{CONDA_PREFIX})

+ else()

+ get_filename_component(CONDA_PREFIX "${Python3_EXECUTABLE}" DIRECTORY)

+ get_filename_component(CONDA_PREFIX "${CONDA_PREFIX}" DIRECTORY)

+ endif()

+ # Set CMAKE_INSTALL_PREFIX to the Conda environment's installation path

+ set(CMAKE_INSTALL_PREFIX ${CONDA_PREFIX} CACHE PATH "Installation directory" FORCE)

+ message(STATUS "Active conda environment detected. Setting CMAKE_INSTALL_PREFIX: ${CMAKE_INSTALL_PREFIX}")

+ endif()

+endif()

+

# do not disable assertions even if in release mode

set(CMAKE_CXX_FLAGS_RELEASE "${CMAKE_CXX_FLAGS_RELEASE} -UNDEBUG")

+set(CMAKE_HIP_FLAGS_RELEASE "${CMAKE_HIP_FLAGS_RELEASE} -UNDEBUG")

if(${CMAKE_SYSTEM_NAME} MATCHES "Linux")

set(LIBEXT ".so")

@@ -35,114 +75,23 @@ option(FF_BUILD_FROM_PYPI "Build from pypi" OFF)

# build shared or static flexflow lib

option(BUILD_SHARED_LIBS "Build shared libraries instead of static ones" ON)

-# option for using Python

-option(FF_USE_PYTHON "Enable Python" ON)

+# option for building legion only

+option(BUILD_LEGION_ONLY "Build Legion only" OFF)

# option to download pre-compiled NCCL/Legion libraries

option(FF_USE_PREBUILT_NCCL "Enable use of NCCL pre-compiled library, if available" ON)

option(FF_USE_PREBUILT_LEGION "Enable use of Legion pre-compiled library, if available" ON)

option(FF_USE_ALL_PREBUILT_LIBRARIES "Enable use of all pre-compiled libraries, if available" OFF)

-# option for using Python

-set(FF_GASNET_CONDUITS aries udp mpi ibv ucx)

+# option for using network

+set(FF_GASNET_CONDUITS aries udp mpi ibv)

set(FF_GASNET_CONDUIT "mpi" CACHE STRING "Select GASNet conduit ${FF_GASNET_CONDUITS}")

set_property(CACHE FF_GASNET_CONDUIT PROPERTY STRINGS ${FF_GASNET_CONDUITS})

set(FF_LEGION_NETWORKS "" CACHE STRING "Network backend(s) to use")

-if ((FF_LEGION_NETWORKS STREQUAL "gasnet" AND FF_GASNET_CONDUIT STREQUAL "ucx") OR FF_LEGION_NETWORKS STREQUAL "ucx")

- if("${FF_UCX_URL}" STREQUAL "")

- set(UCX_URL "https://github.com/openucx/ucx/releases/download/v1.14.0-rc1/ucx-1.14.0.tar.gz")

- else()

- set(UCX_URL "${FF_UCX_URL}")

- endif()

-

- set(UCX_DIR ${CMAKE_CURRENT_BINARY_DIR}/ucx)

- get_filename_component(UCX_COMPRESSED_FILE_NAME "${UCX_URL}" NAME)

- # message(STATUS "UCX_URL: ${UCX_URL}")

- # message(STATUS "UCX_COMPRESSED_FILE_NAME: ${UCX_COMPRESSED_FILE_NAME}")

- set(UCX_COMPRESSED_FILE_PATH "${CMAKE_CURRENT_BINARY_DIR}/${UCX_COMPRESSED_FILE_NAME}")

- set(UCX_BUILD_NEEDED OFF)

- set(UCX_CONFIG_FILE ${UCX_DIR}/config.txt)

- set(UCX_BUILD_OUTPUT ${UCX_DIR}/build.log)

-

- if(EXISTS ${UCX_CONFIG_FILE})

- file(READ ${UCX_CONFIG_FILE} PREV_UCX_CONFIG)

- # message(STATUS "PREV_UCX_CONFIG: ${PREV_UCX_CONFIG}")

- if("${UCX_URL}" STREQUAL "${PREV_UCX_CONFIG}")

- # configs match - no build needed

- set(UCX_BUILD_NEEDED OFF)

- else()

- message(STATUS "UCX configuration has changed - rebuilding...")

- set(UCX_BUILD_NEEDED ON)

- endif()

- else()

- message(STATUS "Configuring and building UCX...")

- set(UCX_BUILD_NEEDED ON)

- endif()

-

- if(UCX_BUILD_NEEDED)

- if(NOT EXISTS "${UCX_COMPRESSED_FILE_PATH}")

- message(STATUS "Downloading openucx/ucx from: ${UCX_URL}")

- file(

- DOWNLOAD

- "${UCX_URL}" "${UCX_COMPRESSED_FILE_PATH}"

- SHOW_PROGRESS

- STATUS status

- LOG log

- )

-

- list(GET status 0 status_code)

- list(GET status 1 status_string)

-

- if(status_code EQUAL 0)

- message(STATUS "Downloading... done")

- else()

- message(FATAL_ERROR "error: downloading '${UCX_URL}' failed

- status_code: ${status_code}

- status_string: ${status_string}

- log:

- --- LOG BEGIN ---

- ${log}

- --- LOG END ---"

- )

- endif()

- else()

- message(STATUS "${UCX_COMPRESSED_FILE_NAME} already exists")

- endif()

-

- execute_process(COMMAND mkdir -p ${UCX_DIR})

- execute_process(COMMAND tar xzf ${UCX_COMPRESSED_FILE_PATH} -C ${UCX_DIR} --strip-components 1)

- message(STATUS "Building UCX...")

- execute_process(

- COMMAND sh -c "cd ${UCX_DIR} && ${UCX_DIR}/contrib/configure-release --prefix=${UCX_DIR}/install --enable-mt && make -j8 && make install"

- RESULT_VARIABLE UCX_BUILD_STATUS

- OUTPUT_FILE ${UCX_BUILD_OUTPUT}

- ERROR_FILE ${UCX_BUILD_OUTPUT}

- )

-