diff --git a/.goreleaser.yml b/.goreleaser.yml

index 29fff213..b5f710f1 100644

--- a/.goreleaser.yml

+++ b/.goreleaser.yml

@@ -31,13 +31,7 @@ builds:

goarch: arm

goarm: 5

archives:

- - replacements:

- darwin: macos

- linux: linux

- windows: windows

- 386: 386

- amd64: amd64

- format_overrides:

+ - format_overrides:

- goos: windows

format: zip

files:

diff --git a/README.md b/README.md

index a8844f55..415097dc 100644

--- a/README.md

+++ b/README.md

@@ -1,8 +1,9 @@

-

+

- Distributed Performance Testing Platform

-

+

+

+Ddosify: "Canva" of Observability

@@ -15,9 +16,8 @@

@@ -15,9 +16,8 @@

-## Ddosify Self-Hosted (Distributed, No-code UI): [More →](./selfhosted/README.md)

- +

+

### Quick Start

@@ -25,45 +25,72 @@

```bash

curl -sSL https://raw.githubusercontent.com/ddosify/ddosify/master/selfhosted/install.sh | bash

```

-

-## Ddosify Engine (Single node, usage on CLI): [More →](./engine_docs/README.md)

- +

+

-### Quick Start

+## What is Ddosify?

+Ddosify is a magic wand that instantly spots glitches and guarantees the smooth performance of your infrastructure and application while saving you time and money. Ddosify Platform includes Performance Testing and Kubernetes Observability capabilities. It uniquely integrates these two parts and effortlessly spots the performance issues.

-```bash

-docker run -it --rm ddosify/ddosify ddosify -t https://app.servdown.com

-```

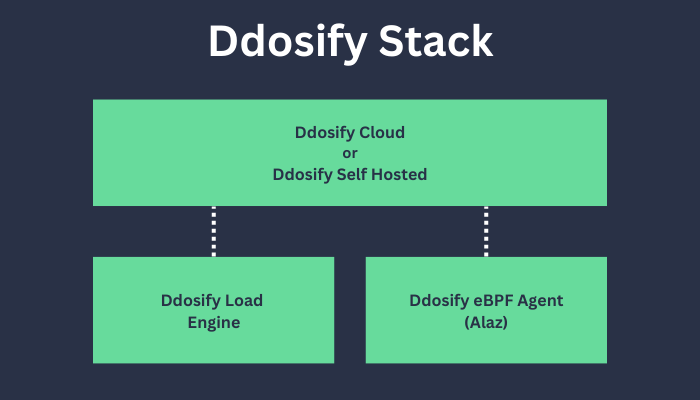

+Ddosify Stack consists of 4 parts. Those are **Ddosify Engine, Ddosify eBPF Agent (Alaz), Ddosify Self-Hosted, and Ddosify Cloud**.

-## What is Ddosify?

-Ddosify is a comprehensive performance testing platform, designed specifically to evaluate backend load and latency. It offers three distinct deployment options to cater to various needs: Ddosify Engine, Ddosify Self-Hosted, and Ddosify Cloud.

+

+ +

+

### :rocket: Ddosify Engine

-This is the load engine of Ddosify, written in Golang. It is fully open-source and can be used on the CLI. Ddosify Engine is available via Docker, Docker Extension, Homebrew Tap, and downloadable pre-compiled binaries from the releases page for macOS, Linux, and Windows.

+This is the load engine of Ddosify, written in Golang. Ddosify Self-Hosted and Ddosify Cloud use it on load generation. It is fully open-source and can be used on the CLI as a standalone tool. Ddosify Engine is available via [Docker](https://hub.docker.com/r/ddosify/ddosify), [Docker Extension](https://hub.docker.com/extensions/ddosify/ddosify-docker-extension), [Homebrew Tap](https://github.com/ddosify/ddosify#homebrew-tap-macos-and-linux), and downloadable pre-compiled binaries from the [releases page](https://github.com/ddosify/ddosify/releases/latest) for macOS, Linux, and Windows.

Check out the [Engine Docs](https://github.com/ddosify/ddosify/tree/master/engine_docs) page for more information and usage.

+### 🐝 Ddosify eBPF Agent (Alaz)

+[Alaz](https://github.com/ddosify/alaz) is an open-source Ddosify eBPF agent that can inspect and collect Kubernetes (K8s) service traffic without the need for code instrumentation, sidecars, or service restarts. Alaz is deployed as a DaemonSet on your Kubernetes cluster. It collects metrics and sends them to Ddosify Cloud or Ddosify Self-Hosted. It also embeds prometheus node-exporter inside. So that you will have visibility on your cluster nodes also.

+

+Check out the [Alaz](https://github.com/ddosify/alaz) repository for more information and usage.

+

### 🏠 Ddosify Self-Hosted

-In contrast to the Engine version, Ddosify Self-Hosted features a web-based user interface and distributed load generation capabilities. While it shares many of the same functionalities as Ddosify Cloud, the Self-Hosted version is designed to be deployed within your own infrastructure for enhanced control and customization. And it's completely Free!

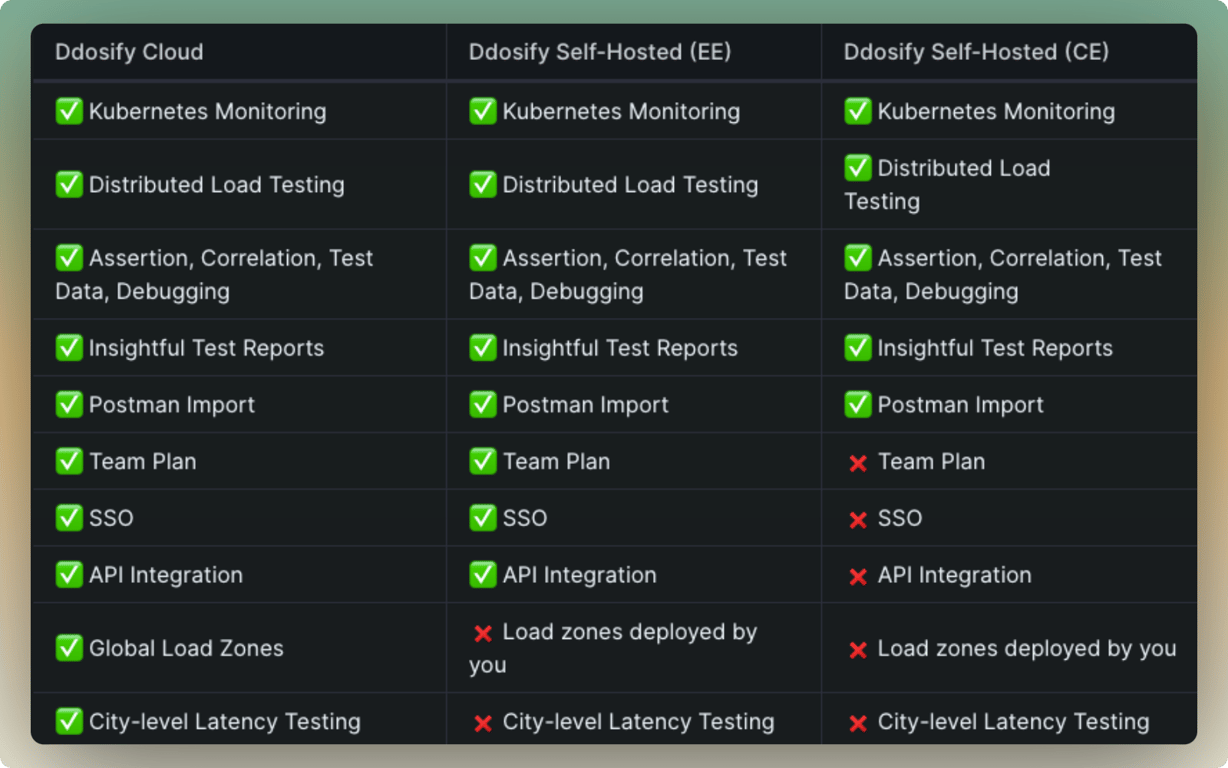

+Ddosify Self-Hosted features a web-based user interface, distributed load generation, and Kubernetes Monitoring capabilities. While it shares many of the same functionalities as Ddosify Cloud, the Self-Hosted version is designed to be deployed within your own infrastructure for enhanced control and customization. There are two versions of it, **Community Edition (CE)** and **Enterprise Edition (EE)**. You can see the differences in the below comparison table.

Check out the [Self-Hosted](https://github.com/ddosify/ddosify/tree/master/selfhosted) page for more information and usage.

### ☁️ Ddosify Cloud

-Ddosify Cloud enables users to assess backend endpoints' performance through load and latency testing, offering a user-friendly interface, comprehensive charts, extensive geographic targeting options, and additional features for an improved testing experience.

+With Ddosify Cloud, anyone can test the performance of backend endpoints, monitor Kubernetes Clusters, and find the bottlenecks in the system. It has a No code UI, insightful charts, service maps, and more features!

-Check out [Ddosify Cloud](https://ddosify.com) to start effortless testing.

+Check out [Ddosify Cloud](https://app.ddosify.com/) to instantly find the performance issues on your system.

-### ☁️ Ddosify Cloud vs 🏠 Ddosify Self-Hosted vs :rocket: Ddosify Engine

+### ☁️ Ddosify Cloud vs 🏠 Ddosify Self-Hosted EE vs 🏡 Ddosify Self-Hosted CE

- +

+ +

+*CE: Community Edition, EE: Enterprise Edition*

+

+*CE: Community Edition, EE: Enterprise Edition*

+## Observability Features

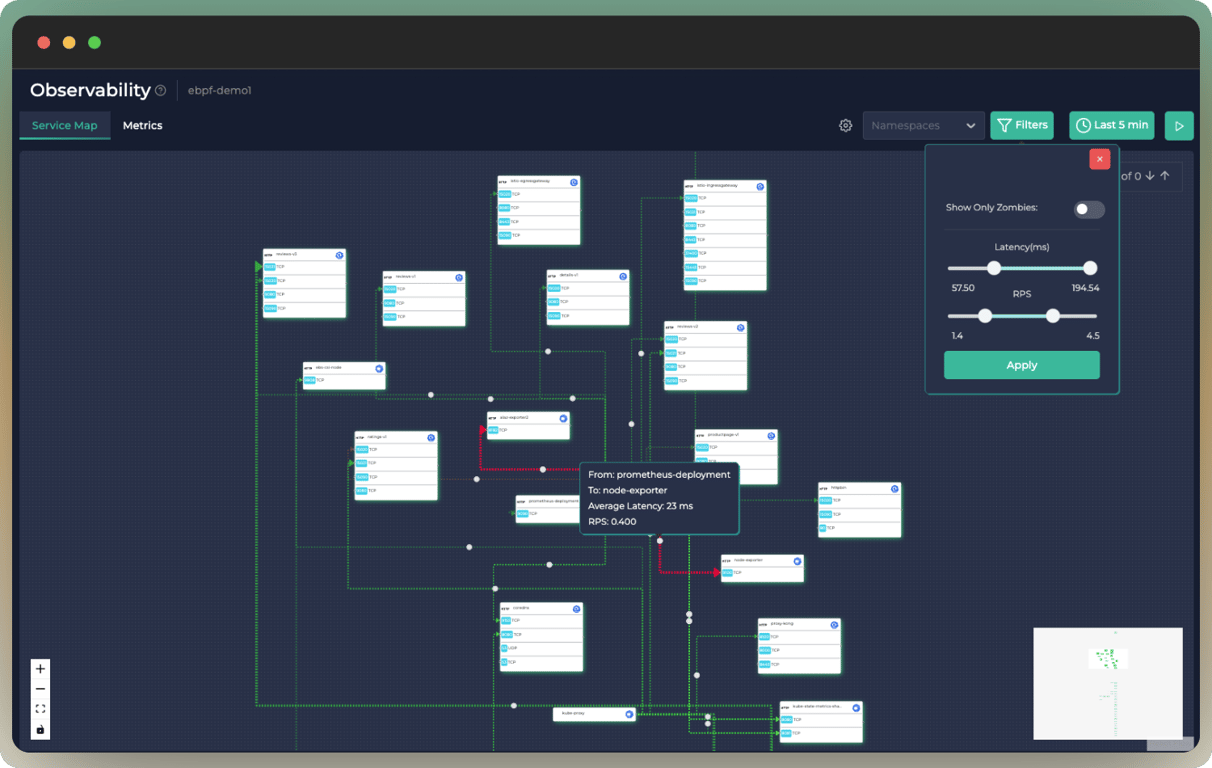

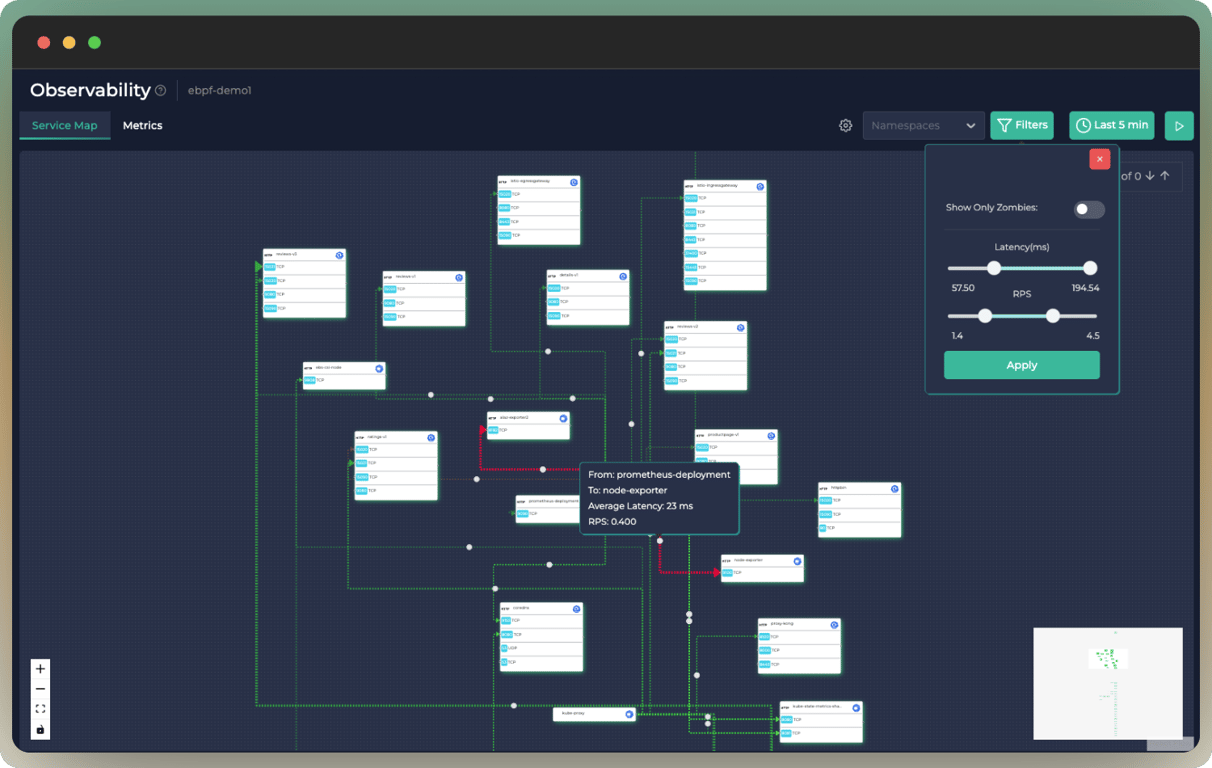

+#### ✅ Service Map

+Easily get insights about what is going on in your cluster. More →

+

+ +

+

+

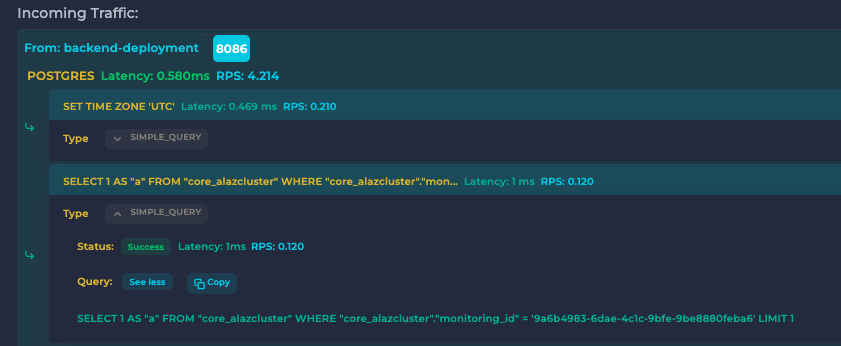

+#### ✅ Detailed Insights

+Inspect incoming, outgoing traffic, SQL queries, and more. More →

+

+ +

+

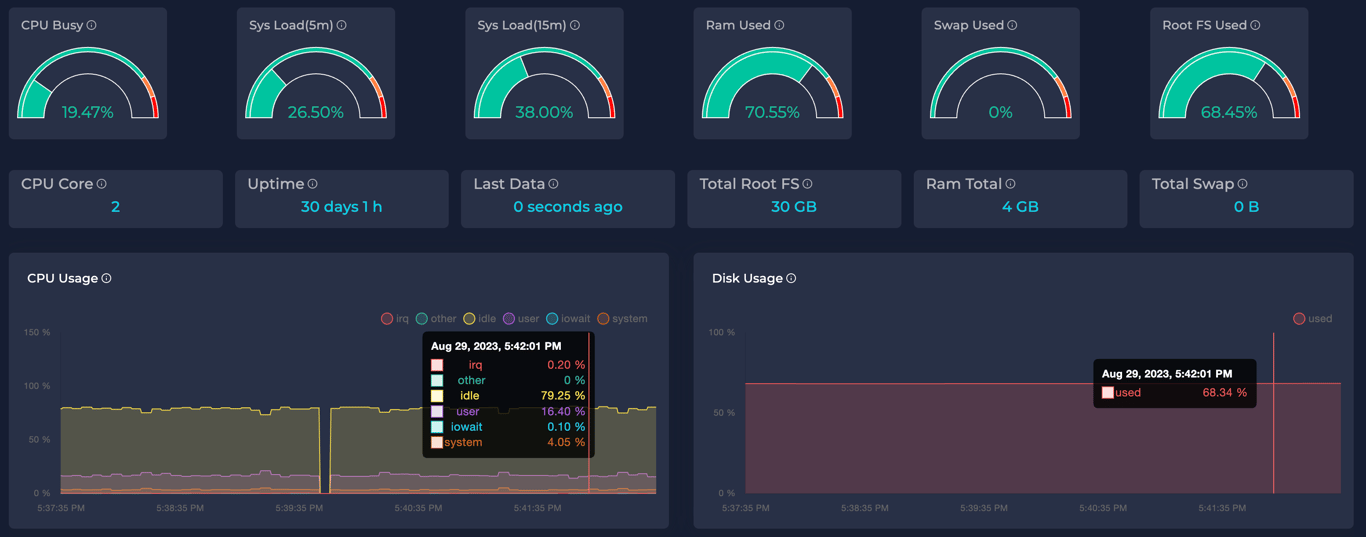

+#### ✅ Metrics Dashboard

+The Metric Dashboard provides a straightforward way to observe Node Metrics. More →

+

+ +

+

-## Features

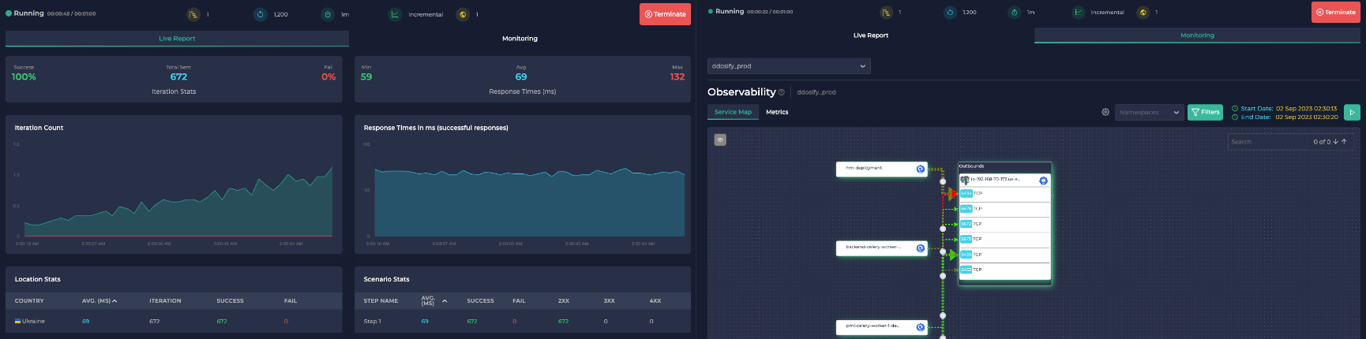

+#### ✅ Find Bottlenecks

+Start a load test and monitor your system all within the same UI.

+

+ +

+

+## Load Testing Features

#### ✅ Parametrization

Use built-in random data generators. More →

@@ -112,7 +139,7 @@ Import Postman collections with ease and transform them into load testing scenar

This repository includes the source code for the Ddosify Engine. You can access Docker Images for the Ddosify Engine and Self Hosted on Docker Hub.

-The [Engine Docs](https://github.com/ddosify/ddosify/tree/master/engine_docs) folder provides information on the installation, usage, and features of the Ddosify Engine. The [Self-Hosted](https://github.com/ddosify/ddosify/tree/master/selfhosted) folder contains installation instructions for the Self-Hosted version. To learn about the usage of both Self-Hosted and Cloud versions, please refer to the [this documentation](https://docs.ddosify.com/concepts/test-suite).

+The [Engine Docs](https://github.com/ddosify/ddosify/tree/master/engine_docs) folder provides information on the installation, usage, and features of the Ddosify Engine. The [Self-Hosted](https://github.com/ddosify/ddosify/tree/master/selfhosted) folder contains installation instructions for the Self-Hosted version. [Ddosify eBPF agent (Alaz)](https://github.com/ddosify/alaz) has its own repository. To learn about the usage of both Self-Hosted and Cloud versions, please refer to the [this documentation](https://docs.ddosify.com/concepts/test-suite).

## Communication

diff --git a/assets/ddosify.profile b/assets/ddosify.profile

index 85b569a0..f23684b4 100644

--- a/assets/ddosify.profile

+++ b/assets/ddosify.profile

@@ -11,4 +11,4 @@ cat< 0 {

- fmt.Fprintf(w, "%s\n", blue(fmt.Sprintf("- Environment Variables")))

+ fmt.Fprintf(w, "%s\n", blue("- Environment Variables"))

for eKey, eVal := range verboseInfo.Envs {

switch eVal.(type) {

case map[string]interface{}:

@@ -228,7 +228,7 @@ func (s *stdout) printInDebugMode(input chan *types.ScenarioResult) {

fmt.Fprintf(w, "\n")

}

if len(verboseInfo.TestData) > 0 {

- fmt.Fprintf(w, "%s\n", blue(fmt.Sprintf("- Test Data")))

+ fmt.Fprintf(w, "%s\n", blue("- Test Data"))

for eKey, eVal := range verboseInfo.TestData {

switch eVal.(type) {

@@ -260,7 +260,7 @@ func (s *stdout) printInDebugMode(input chan *types.ScenarioResult) {

fmt.Fprint(out, b.String())

break

}

- fmt.Fprintf(w, "%s\n", blue(fmt.Sprintf("- Request")))

+ fmt.Fprintf(w, "%s\n", blue("- Request"))

fmt.Fprintf(w, "\tTarget: \t%s \n", verboseInfo.Request.Url)

fmt.Fprintf(w, "\tMethod: \t%s \n", verboseInfo.Request.Method)

@@ -276,7 +276,7 @@ func (s *stdout) printInDebugMode(input chan *types.ScenarioResult) {

if verboseInfo.Error == "" {

// response

- fmt.Fprintf(w, "\n%s\n", blue(fmt.Sprintf("- Response")))

+ fmt.Fprintf(w, "\n%s\n", blue("- Response"))

fmt.Fprintf(w, "\tStatusCode:\t%-5d \n", verboseInfo.Response.StatusCode)

fmt.Fprintf(w, "\tResponseTime:\t%-5d(ms) \n", verboseInfo.Response.ResponseTime)

fmt.Fprintf(w, "\t%s\n", "Headers: ")

@@ -291,14 +291,14 @@ func (s *stdout) printInDebugMode(input chan *types.ScenarioResult) {

}

if len(verboseInfo.FailedCaptures) > 0 {

- fmt.Fprintf(w, "%s\n", yellow(fmt.Sprintf("- Failed Captures")))

+ fmt.Fprintf(w, "%s\n", yellow("- Failed Captures"))

for wKey, wVal := range verboseInfo.FailedCaptures {

fmt.Fprintf(w, "\t\t%s: \t%s \n", wKey, wVal)

}

}

if len(verboseInfo.FailedAssertions) > 0 {

- fmt.Fprintf(w, "%s", yellow(fmt.Sprintf("- Failed Assertions")))

+ fmt.Fprintf(w, "%s", yellow("- Failed Assertions"))

for _, failAssertion := range verboseInfo.FailedAssertions {

fmt.Fprintf(w, "\n\t\tRule: %s\n", failAssertion.Rule)

prettyReceived, _ := json.MarshalIndent(failAssertion.Received, "\t\t", "\t")

diff --git a/core/scenario/requester/http.go b/core/scenario/requester/http.go

index 3d8b403c..d9f1065f 100644

--- a/core/scenario/requester/http.go

+++ b/core/scenario/requester/http.go

@@ -437,7 +437,12 @@ func (h *HttpRequester) prepareReq(envs map[string]interface{}, trace *httptrace

if errURL != nil {

return nil, errURL

}

- httpReq.Host = httpReq.URL.Host

+

+ // If Host is not given in the header, set it from the original URL

+ // Note that a temporary url used in initRequest

+ if httpReq.Header.Get("Host") == "" {

+ httpReq.Host = httpReq.URL.Host

+ }

// header

if h.containsDynamicField["header"] {

@@ -619,10 +624,13 @@ func (h *HttpRequester) initRequestInstance() (err error) {

// Headers

header := make(http.Header)

for k, v := range h.packet.Headers {

+ header.Set(k, v)

+ // Since we use a temp url, we need to override the request.Host either

+ // it will be app.ddosify.com

+ // or it will be the host from the headers

+ // later on prepareReq, we will override the host if it is set in the headers

if strings.EqualFold(k, "Host") {

h.request.Host = v

- } else {

- header.Set(k, v)

}

}

diff --git a/core/scenario/requester/http_test.go b/core/scenario/requester/http_test.go

index 1d62bb3f..bd30c891 100644

--- a/core/scenario/requester/http_test.go

+++ b/core/scenario/requester/http_test.go

@@ -266,6 +266,7 @@ func TestInitRequest(t *testing.T) {

expectedWithHeaders.Header.Set("Header1", "Value1")

expectedWithHeaders.Header.Set("Header2", "Value2")

expectedWithHeaders.Header.Set("User-Agent", "Firefox")

+ expectedWithHeaders.Header.Set("Host", "test.com")

expectedWithHeaders.Host = "test.com"

expectedWithHeaders.SetBasicAuth(sWithHeaders.Auth.Username, sWithHeaders.Auth.Password)

diff --git a/core/scenario/scripting/assertion/assert_test.go b/core/scenario/scripting/assertion/assert_test.go

index a2dba5c7..d8de47be 100644

--- a/core/scenario/scripting/assertion/assert_test.go

+++ b/core/scenario/scripting/assertion/assert_test.go

@@ -693,6 +693,13 @@ func TestAssert(t *testing.T) {

},

expected: true,

},

+ {

+ input: "p98(iteration_duration) == 99",

+ envs: &evaluator.AssertEnv{

+ TotalTime: []int64{34, 37, 39, 44, 45, 55, 66, 67, 72, 75, 77, 89, 92, 98, 99},

+ },

+ expected: true,

+ },

{

input: "p95(iteration_duration) == 99",

envs: &evaluator.AssertEnv{

@@ -810,6 +817,11 @@ func TestAssert(t *testing.T) {

expected: false,

expectedError: "ArgumentError",

},

+ {

+ input: "p98(23)", // arg must be array

+ expected: false,

+ expectedError: "ArgumentError",

+ },

{

input: "p95(23)", // arg must be array

expected: false,

diff --git a/core/scenario/scripting/assertion/evaluator/evaluator.go b/core/scenario/scripting/assertion/evaluator/evaluator.go

index 7db41909..aed42227 100644

--- a/core/scenario/scripting/assertion/evaluator/evaluator.go

+++ b/core/scenario/scripting/assertion/evaluator/evaluator.go

@@ -237,6 +237,15 @@ func Eval(node ast.Node, env *AssertEnv, receivedMap map[string]interface{}) (in

}

}

return percentile(arr, 99)

+ case P98:

+ arr, ok := args[0].([]int64)

+ if !ok {

+ return false, ArgumentError{

+ msg: "argument of percentile funcs must be an int64 array",

+ wrappedErr: nil,

+ }

+ }

+ return percentile(arr, 98)

case P95:

arr, ok := args[0].([]int64)

if !ok {

diff --git a/core/scenario/scripting/assertion/evaluator/function.go b/core/scenario/scripting/assertion/evaluator/function.go

index 8a32f7c9..17054be4 100644

--- a/core/scenario/scripting/assertion/evaluator/function.go

+++ b/core/scenario/scripting/assertion/evaluator/function.go

@@ -208,6 +208,7 @@ var assertionFuncMap = map[string]struct{}{

MAX: {},

AVG: {},

P99: {},

+ P98: {},

P95: {},

P90: {},

P80: {},

@@ -234,6 +235,7 @@ const (

MAX = "max"

AVG = "avg"

P99 = "p99"

+ P98 = "p98"

P95 = "p95"

P90 = "p90"

P80 = "p80"

diff --git a/core/types/scenario.go b/core/types/scenario.go

index d3ba7bc3..4f23398d 100644

--- a/core/types/scenario.go

+++ b/core/types/scenario.go

@@ -86,7 +86,7 @@ func (s *Scenario) validate() error {

// add global envs

for key := range s.Envs {

- if !envVarNameRegexp.Match([]byte(key)) { // not a valid env definition

+ if !envVarNameRegexp.MatchString(key) { // not a valid env definition

return fmt.Errorf("env key is not valid: %s", key)

}

definedEnvs[key] = struct{}{} // exist

@@ -98,7 +98,7 @@ func (s *Scenario) validate() error {

return fmt.Errorf("csv key can not have dot in it: %s", key)

}

for _, s := range splitted {

- if !envVarNameRegexp.Match([]byte(s)) { // not a valid env definition

+ if !envVarNameRegexp.MatchString(s) { // not a valid env definition

return fmt.Errorf("csv key is not valid: %s", key)

}

}

@@ -112,7 +112,7 @@ func (s *Scenario) validate() error {

// enrich Envs map with captured envs from each step

for _, ce := range st.EnvsToCapture {

- if !envVarNameRegexp.Match([]byte(ce.Name)) { // not a valid env definition

+ if !envVarNameRegexp.MatchString(ce.Name) { // not a valid env definition

return fmt.Errorf("captured env key is not valid: %s", ce.Name)

}

definedEnvs[ce.Name] = struct{}{}

diff --git a/engine_docs/README.md b/engine_docs/README.md

index 9baf7adf..e797d9d1 100644

--- a/engine_docs/README.md

+++ b/engine_docs/README.md

@@ -1,8 +1,9 @@

-

+

- Ddosify - High-performance load testing tool

-

+

+

+Ddosify Engine: High-performance load testing tool

@@ -746,6 +747,7 @@ Unlike assertions focused on individual steps, which determine the success or fa

| Function | Parameters | Description |

| ---------- | ---------------------- | ------------------------------------------------- |

| `p99` | ( arr `int array` ) | 99th percentile, use as `p99(iteration_duration)` |

+| `p98` | ( arr `int array` ) | 98th percentile, use as `p98(iteration_duration)` |

| `p95` | ( arr `int array`) | 95th percentile, use as `p95(iteration_duration)` |

| `p90` | ( arr `int array`) | 90th percentile, use as `p90(iteration_duration)` |

| `p80` | ( arr `int array`) | 80th percentile, use as `p80(iteration_duration)` |

@@ -1055,6 +1057,32 @@ Following fields are available for cookie assertion:

- Select Open

- Close the opened terminal

+### OS Limit - Too Many Open Files

+

+If you create large load tests, you may encounter the following errors:

+

+```

+Server Error Distribution (Count:Reason):

+ 199 :Get "https://example.ddosify.com": dial tcp 188.114.96.3:443: socket: too many open files

+ 159 :Get "https://example.ddosify.com": dial tcp 188.114.97.3:443: socket: too many open files

+```

+

+This is because the OS limits the number of open files. You can check the current limit by running `ulimit -n` command. You can increase this limit to 50000 by running the following command on both Linux and macOS.

+

+```bash

+ulimit -n 50000

+```

+

+But this will only increase the limit for the current session. To increase the limit permanently, you can change the shell configuration file. For example, if you are using bash, you can add the following lines to `~/.bashrc` file. If you are using zsh, you can add the following lines to `~/.zshrc` file.

+

+```bash

+# For .bashrc

+echo "ulimit -n 50000" >> ~/.bashrc

+

+# For .zshrc

+echo "ulimit -n 50000" >> ~/.zshrc

+```

+

## Communication

You can join our [Discord Server](https://discord.gg/9KdnrSUZQg) for issues, feature requests, feedbacks or anything else.

diff --git a/scripts/install.sh b/scripts/install.sh

index 1f8dc90a..a58f2741 100755

--- a/scripts/install.sh

+++ b/scripts/install.sh

@@ -12,7 +12,7 @@ uname_arch() {

armv*) arch="armv6" ;;

armv*) arch="armv6" ;;

esac

- if [ "$(uname_os)" == "macos" ]; then

+ if [ "$(uname_os)" == "darwin" ]; then

arch="all"

fi

echo ${arch}

@@ -20,9 +20,6 @@ uname_arch() {

uname_os() {

os=$(uname -s | tr '[:upper:]' '[:lower:]')

- case $os in

- darwin) os="macos" ;;

- esac

echo "$os"

}

@@ -58,8 +55,8 @@ if [ ! -f $tmpfolder/$GITHUB_REPO ]; then

fi

binary=$tmpfolder/$GITHUB_REPO

-echo "Installing $GITHUB_REPO to $INSTALL_DIR"

+echo "Installing $GITHUB_REPO to $INSTALL_DIR (sudo access required to write to $INSTALL_DIR)"

sudo install "$binary" $INSTALL_DIR

echo "Installed $GITHUB_REPO to $INSTALL_DIR"

-

+echo "Simple usage: ddosify -t https://testserver.ddosify.com"

rm -rf "${tmpdir}"

diff --git a/selfhosted/README.md b/selfhosted/README.md

index edd52b29..60e95173 100644

--- a/selfhosted/README.md

+++ b/selfhosted/README.md

@@ -1,15 +1,18 @@

-

@@ -746,6 +747,7 @@ Unlike assertions focused on individual steps, which determine the success or fa

| Function | Parameters | Description |

| ---------- | ---------------------- | ------------------------------------------------- |

| `p99` | ( arr `int array` ) | 99th percentile, use as `p99(iteration_duration)` |

+| `p98` | ( arr `int array` ) | 98th percentile, use as `p98(iteration_duration)` |

| `p95` | ( arr `int array`) | 95th percentile, use as `p95(iteration_duration)` |

| `p90` | ( arr `int array`) | 90th percentile, use as `p90(iteration_duration)` |

| `p80` | ( arr `int array`) | 80th percentile, use as `p80(iteration_duration)` |

@@ -1055,6 +1057,32 @@ Following fields are available for cookie assertion:

- Select Open

- Close the opened terminal

+### OS Limit - Too Many Open Files

+

+If you create large load tests, you may encounter the following errors:

+

+```

+Server Error Distribution (Count:Reason):

+ 199 :Get "https://example.ddosify.com": dial tcp 188.114.96.3:443: socket: too many open files

+ 159 :Get "https://example.ddosify.com": dial tcp 188.114.97.3:443: socket: too many open files

+```

+

+This is because the OS limits the number of open files. You can check the current limit by running `ulimit -n` command. You can increase this limit to 50000 by running the following command on both Linux and macOS.

+

+```bash

+ulimit -n 50000

+```

+

+But this will only increase the limit for the current session. To increase the limit permanently, you can change the shell configuration file. For example, if you are using bash, you can add the following lines to `~/.bashrc` file. If you are using zsh, you can add the following lines to `~/.zshrc` file.

+

+```bash

+# For .bashrc

+echo "ulimit -n 50000" >> ~/.bashrc

+

+# For .zshrc

+echo "ulimit -n 50000" >> ~/.zshrc

+```

+

## Communication

You can join our [Discord Server](https://discord.gg/9KdnrSUZQg) for issues, feature requests, feedbacks or anything else.

diff --git a/scripts/install.sh b/scripts/install.sh

index 1f8dc90a..a58f2741 100755

--- a/scripts/install.sh

+++ b/scripts/install.sh

@@ -12,7 +12,7 @@ uname_arch() {

armv*) arch="armv6" ;;

armv*) arch="armv6" ;;

esac

- if [ "$(uname_os)" == "macos" ]; then

+ if [ "$(uname_os)" == "darwin" ]; then

arch="all"

fi

echo ${arch}

@@ -20,9 +20,6 @@ uname_arch() {

uname_os() {

os=$(uname -s | tr '[:upper:]' '[:lower:]')

- case $os in

- darwin) os="macos" ;;

- esac

echo "$os"

}

@@ -58,8 +55,8 @@ if [ ! -f $tmpfolder/$GITHUB_REPO ]; then

fi

binary=$tmpfolder/$GITHUB_REPO

-echo "Installing $GITHUB_REPO to $INSTALL_DIR"

+echo "Installing $GITHUB_REPO to $INSTALL_DIR (sudo access required to write to $INSTALL_DIR)"

sudo install "$binary" $INSTALL_DIR

echo "Installed $GITHUB_REPO to $INSTALL_DIR"

-

+echo "Simple usage: ddosify -t https://testserver.ddosify.com"

rm -rf "${tmpdir}"

diff --git a/selfhosted/README.md b/selfhosted/README.md

index edd52b29..60e95173 100644

--- a/selfhosted/README.md

+++ b/selfhosted/README.md

@@ -1,15 +1,18 @@

-

+

- Distributed, No-code Performance Testing within Your Own Infrastructure

-

+

+

+Ddosify Self Hosted: "Canva" of Observability

- +

+

This README provides instructions for installing and an overview of the system requirements for Ddosify Self-Hosted. For further information on its features, please refer to the ["What is Ddosify"](https://github.com/ddosify/ddosify/#what-is-ddosify) section in the main README, or consult the complete [documentation](https://docs.ddosify.com/concepts/test-suite).

+ +

## Effortless Installation

✅ **Arm64 and Amd64 Support**: Broad architecture compatibility ensures the tool works seamlessly across different systems on both Linux and MacOS.

@@ -20,6 +23,9 @@ This README provides instructions for installing and an overview of the system r

✅ **Easy to Deploy**: Automated setup processes using Docker Compose and Helm Charts.

+✅ **AWS Marketplace**: [AWS Marketplace](https://aws.amazon.com/marketplace/pp/prodview-mwvnujtgjedjy) listing for easy deployment on AWS (Amazon Web Services).

+

+✅ **Kubernetes Monitoring**: With the help of [Ddosify eBPF Agent (Alaz)](https://github.com/ddosify/alaz) you can monitor your Kubernetes Cluster, create Service Map and get metrics from your Kubernetes nodes.

## 🛠 Prerequisites

@@ -109,7 +115,7 @@ NAME=ddosify_hammer_1

docker run --name $NAME -dit \

--network selfhosted_ddosify \

--restart always \

- ddosify/selfhosted_hammer:1.0.0

+ ddosify/selfhosted_hammer:1.4.2

```

### **Example 2**: Adding the engine to a different server

@@ -126,7 +132,7 @@ docker run --name $NAME -dit \

--env DDOSIFY_SERVICE_ADDRESS=$DDOSIFY_SERVICE_ADDRESS \

--env IP_ADDRESS=$IP_ADDRESS \

--restart always \

- ddosify/selfhosted_hammer:0.1.0

+ ddosify/selfhosted_hammer:1.4.2

```

You should see `mq_waiting_new_job` log in the engine container logs. This means that the engine is waiting for a job from the service server. After the engine is added, you can see it in the Engines page in the dashboard.

@@ -167,6 +173,9 @@ failed to remove network selfhosted_ddosify: Error response from...

If you want to start the project again, run the script in the [Quick Start](#%EF%B8%8F-quick-start-recommended) section again.

+## Enable Kubernetes Monitoring

+

+To monitor your Kubernetes Cluster, you need to run the [Ddosify eBPF Agent (Alaz)](https://github.com/ddosify/alaz) as a DaemonSet. Refer to the [Alaz](https://github.com/ddosify/alaz) repository for more information.

## 🧩 Services Overview

@@ -180,6 +189,7 @@ If you want to start the project again, run the script in the [Quick Start](#%EF

| `RabbitMQ` | Message broker enabling communication between Hammer Manager and Hammers. |

| `SeaweedFS Object Storage` | Object storage for multipart files and test data (CSV) used in load tests. |

| `Nginx` | Reverse proxy for backend and frontend services. |

+| `Prometheus` | Collects the Kubernetes Monitoring metrics from the Backend service. |

## 📝 License

diff --git a/selfhosted/docker-compose.yml b/selfhosted/docker-compose.yml

index e08cb269..f26751f0 100644

--- a/selfhosted/docker-compose.yml

+++ b/selfhosted/docker-compose.yml

@@ -15,7 +15,7 @@ services:

- ddosify

frontend:

- image: ddosify/selfhosted_frontend:1.1.2

+ image: ddosify/selfhosted_frontend:2.4.1

depends_on:

- backend

restart: always

@@ -24,7 +24,7 @@ services:

- ddosify

backend:

- image: ddosify/selfhosted_backend:1.3.0

+ image: ddosify/selfhosted_backend:2.3.1

depends_on:

- postgres

- influxdb

@@ -38,10 +38,44 @@ services:

pull_policy: always

command: /workspace/start_scripts/start_app_onprem.sh

+ backend-celery-worker:

+ image: ddosify/selfhosted_backend:2.3.1

+ depends_on:

+ - postgres

+ - influxdb

+ - redis

+ - seaweedfs

+ - backend

+ - rabbitmq-celery-backend

+ env_file:

+ - .env

+ networks:

+ - ddosify

+ restart: always

+ pull_policy: always

+ command: /workspace/start_scripts/start_celery_worker.sh

+

+ backend-celery-beat:

+ image: ddosify/selfhosted_backend:2.3.1

+ depends_on:

+ - postgres

+ - influxdb

+ - redis

+ - seaweedfs

+ - backend

+ - rabbitmq-celery-backend

+ env_file:

+ - .env

+ networks:

+ - ddosify

+ restart: always

+ pull_policy: always

+ command: /workspace/start_scripts/start_celery_beat.sh

+

hammermanager:

ports:

- "9901:8001"

- image: ddosify/selfhosted_hammermanager:1.2.0

+ image: ddosify/selfhosted_hammermanager:1.2.2

depends_on:

- postgres

- rabbitmq-job

@@ -55,7 +89,7 @@ services:

command: /workspace/start_scripts/start_app.sh

hammermanager-celery-worker:

- image: ddosify/selfhosted_hammermanager:1.2.0

+ image: ddosify/selfhosted_hammermanager:1.2.2

depends_on:

- postgres

- rabbitmq-job

@@ -70,7 +104,7 @@ services:

command: /workspace/start_scripts/start_celery_worker.sh

hammermanager-celery-beat:

- image: ddosify/selfhosted_hammermanager:1.2.0

+ image: ddosify/selfhosted_hammermanager:1.2.2

depends_on:

- postgres

- rabbitmq-job

@@ -85,7 +119,7 @@ services:

command: /workspace/start_scripts/start_celery_beat.sh

hammer:

- image: ddosify/selfhosted_hammer:1.3.0

+ image: ddosify/selfhosted_hammer:1.4.2

volumes:

- hammer_id:/root/uuid

depends_on:

@@ -101,7 +135,7 @@ services:

pull_policy: always

hammerdebug:

- image: ddosify/selfhosted_hammer:1.3.0

+ image: ddosify/selfhosted_hammer:1.4.2

volumes:

- hammerdebug_id:/root/uuid

depends_on:

@@ -134,6 +168,12 @@ services:

- ddosify

restart: always

+ rabbitmq-celery-backend:

+ image: "rabbitmq:3.9.4"

+ networks:

+ - ddosify

+ restart: always

+

rabbitmq-job:

ports:

- "6672:5672"

@@ -168,7 +208,7 @@ services:

restart: always

seaweedfs:

- image: chrislusf/seaweedfs:3.51

+ image: chrislusf/seaweedfs:3.56

ports:

- "8333:8333"

command: 'server -s3 -dir="/data"'

@@ -178,6 +218,18 @@ services:

volumes:

- seaweedfs_data:/data

+ prometheus:

+ image: prom/prometheus:v2.37.9

+ ports:

+ - "9090:9090"

+ command: --config.file=/prometheus/prometheus.yml --storage.tsdb.path=/prometheus --web.console.libraries=/usr/share/prometheus/console_libraries --web.console.templates=/usr/share/prometheus/consoles --storage.tsdb.retention=10d

+ volumes:

+ - ./init_scripts/prometheus/prometheus.yml:/prometheus/prometheus.yml

+ - prometheus_data:/prometheus

+ networks:

+ - ddosify

+ restart: always

+

volumes:

postgres_data:

influxdb_data:

@@ -185,6 +237,7 @@ volumes:

seaweedfs_data:

hammer_id:

hammerdebug_id:

+ prometheus_data:

networks:

ddosify:

diff --git a/selfhosted/init_scripts/prometheus/prometheus.yml b/selfhosted/init_scripts/prometheus/prometheus.yml

new file mode 100644

index 00000000..a26769b5

--- /dev/null

+++ b/selfhosted/init_scripts/prometheus/prometheus.yml

@@ -0,0 +1,16 @@

+global:

+ scrape_interval: 10s

+ evaluation_interval: 10s

+

+alerting:

+ alertmanagers:

+ - static_configs:

+ - targets:

+

+rule_files:

+

+scrape_configs:

+ - job_name: "backend"

+ metrics_path: '/alaz/metrics/scrape'

+ static_configs:

+ - targets: ["backend:8008"]

diff --git a/selfhosted/install.sh b/selfhosted/install.sh

index 14a3ee9f..b7b38b2b 100755

--- a/selfhosted/install.sh

+++ b/selfhosted/install.sh

@@ -40,9 +40,11 @@ if ! command -v docker >/dev/null 2>&1; then

fi

# Check if Docker Compose is installed

-if ! command -v docker-compose >/dev/null 2>&1 && ! command -v docker >/dev/null 2>&1 || ! docker compose version >/dev/null 2>&1; then

- echo "❌ Docker Compose not found. Please install Docker Compose and try again."

- exit 1

+if ! command -v docker-compose >/dev/null 2>&1; then

+ if ! docker compose version >/dev/null 2>&1; then

+ echo "❌ Docker Compose not found. Please install Docker Compose and try again."

+ exit 1

+ fi

fi

# Check if Docker is running

@@ -59,12 +61,9 @@ REPO_DIR="$HOME/.ddosify"

if [ -d "$REPO_DIR" ]; then

echo "🔄 Repository already exists at $REPO_DIR - Attempting to update..."

cd "$REPO_DIR"

- git checkout master >/dev/null 2>&1

+ git checkout master

cd "$REPO_DIR/selfhosted"

- git pull >/dev/null 2>&1

-

- # Check for errors during pull

- if [ $? -ne 0 ]; then

+ git pull 2>&1 || {

read -p "⚠️ Error updating repository. Clean and update? [Y/n]: " answer

answer=${answer:-Y}

if [[ $answer =~ ^[Yy]$ ]]; then

@@ -72,7 +71,7 @@ if [ -d "$REPO_DIR" ]; then

git clean -fd >/dev/null 2>&1

git pull >/dev/null 2>&1

fi

- fi

+ }

else

# Clone the repository

echo "📦 Cloning repository to $REPO_DIR directory..."

+

## Effortless Installation

✅ **Arm64 and Amd64 Support**: Broad architecture compatibility ensures the tool works seamlessly across different systems on both Linux and MacOS.

@@ -20,6 +23,9 @@ This README provides instructions for installing and an overview of the system r

✅ **Easy to Deploy**: Automated setup processes using Docker Compose and Helm Charts.

+✅ **AWS Marketplace**: [AWS Marketplace](https://aws.amazon.com/marketplace/pp/prodview-mwvnujtgjedjy) listing for easy deployment on AWS (Amazon Web Services).

+

+✅ **Kubernetes Monitoring**: With the help of [Ddosify eBPF Agent (Alaz)](https://github.com/ddosify/alaz) you can monitor your Kubernetes Cluster, create Service Map and get metrics from your Kubernetes nodes.

## 🛠 Prerequisites

@@ -109,7 +115,7 @@ NAME=ddosify_hammer_1

docker run --name $NAME -dit \

--network selfhosted_ddosify \

--restart always \

- ddosify/selfhosted_hammer:1.0.0

+ ddosify/selfhosted_hammer:1.4.2

```

### **Example 2**: Adding the engine to a different server

@@ -126,7 +132,7 @@ docker run --name $NAME -dit \

--env DDOSIFY_SERVICE_ADDRESS=$DDOSIFY_SERVICE_ADDRESS \

--env IP_ADDRESS=$IP_ADDRESS \

--restart always \

- ddosify/selfhosted_hammer:0.1.0

+ ddosify/selfhosted_hammer:1.4.2

```

You should see `mq_waiting_new_job` log in the engine container logs. This means that the engine is waiting for a job from the service server. After the engine is added, you can see it in the Engines page in the dashboard.

@@ -167,6 +173,9 @@ failed to remove network selfhosted_ddosify: Error response from...

If you want to start the project again, run the script in the [Quick Start](#%EF%B8%8F-quick-start-recommended) section again.

+## Enable Kubernetes Monitoring

+

+To monitor your Kubernetes Cluster, you need to run the [Ddosify eBPF Agent (Alaz)](https://github.com/ddosify/alaz) as a DaemonSet. Refer to the [Alaz](https://github.com/ddosify/alaz) repository for more information.

## 🧩 Services Overview

@@ -180,6 +189,7 @@ If you want to start the project again, run the script in the [Quick Start](#%EF

| `RabbitMQ` | Message broker enabling communication between Hammer Manager and Hammers. |

| `SeaweedFS Object Storage` | Object storage for multipart files and test data (CSV) used in load tests. |

| `Nginx` | Reverse proxy for backend and frontend services. |

+| `Prometheus` | Collects the Kubernetes Monitoring metrics from the Backend service. |

## 📝 License

diff --git a/selfhosted/docker-compose.yml b/selfhosted/docker-compose.yml

index e08cb269..f26751f0 100644

--- a/selfhosted/docker-compose.yml

+++ b/selfhosted/docker-compose.yml

@@ -15,7 +15,7 @@ services:

- ddosify

frontend:

- image: ddosify/selfhosted_frontend:1.1.2

+ image: ddosify/selfhosted_frontend:2.4.1

depends_on:

- backend

restart: always

@@ -24,7 +24,7 @@ services:

- ddosify

backend:

- image: ddosify/selfhosted_backend:1.3.0

+ image: ddosify/selfhosted_backend:2.3.1

depends_on:

- postgres

- influxdb

@@ -38,10 +38,44 @@ services:

pull_policy: always

command: /workspace/start_scripts/start_app_onprem.sh

+ backend-celery-worker:

+ image: ddosify/selfhosted_backend:2.3.1

+ depends_on:

+ - postgres

+ - influxdb

+ - redis

+ - seaweedfs

+ - backend

+ - rabbitmq-celery-backend

+ env_file:

+ - .env

+ networks:

+ - ddosify

+ restart: always

+ pull_policy: always

+ command: /workspace/start_scripts/start_celery_worker.sh

+

+ backend-celery-beat:

+ image: ddosify/selfhosted_backend:2.3.1

+ depends_on:

+ - postgres

+ - influxdb

+ - redis

+ - seaweedfs

+ - backend

+ - rabbitmq-celery-backend

+ env_file:

+ - .env

+ networks:

+ - ddosify

+ restart: always

+ pull_policy: always

+ command: /workspace/start_scripts/start_celery_beat.sh

+

hammermanager:

ports:

- "9901:8001"

- image: ddosify/selfhosted_hammermanager:1.2.0

+ image: ddosify/selfhosted_hammermanager:1.2.2

depends_on:

- postgres

- rabbitmq-job

@@ -55,7 +89,7 @@ services:

command: /workspace/start_scripts/start_app.sh

hammermanager-celery-worker:

- image: ddosify/selfhosted_hammermanager:1.2.0

+ image: ddosify/selfhosted_hammermanager:1.2.2

depends_on:

- postgres

- rabbitmq-job

@@ -70,7 +104,7 @@ services:

command: /workspace/start_scripts/start_celery_worker.sh

hammermanager-celery-beat:

- image: ddosify/selfhosted_hammermanager:1.2.0

+ image: ddosify/selfhosted_hammermanager:1.2.2

depends_on:

- postgres

- rabbitmq-job

@@ -85,7 +119,7 @@ services:

command: /workspace/start_scripts/start_celery_beat.sh

hammer:

- image: ddosify/selfhosted_hammer:1.3.0

+ image: ddosify/selfhosted_hammer:1.4.2

volumes:

- hammer_id:/root/uuid

depends_on:

@@ -101,7 +135,7 @@ services:

pull_policy: always

hammerdebug:

- image: ddosify/selfhosted_hammer:1.3.0

+ image: ddosify/selfhosted_hammer:1.4.2

volumes:

- hammerdebug_id:/root/uuid

depends_on:

@@ -134,6 +168,12 @@ services:

- ddosify

restart: always

+ rabbitmq-celery-backend:

+ image: "rabbitmq:3.9.4"

+ networks:

+ - ddosify

+ restart: always

+

rabbitmq-job:

ports:

- "6672:5672"

@@ -168,7 +208,7 @@ services:

restart: always

seaweedfs:

- image: chrislusf/seaweedfs:3.51

+ image: chrislusf/seaweedfs:3.56

ports:

- "8333:8333"

command: 'server -s3 -dir="/data"'

@@ -178,6 +218,18 @@ services:

volumes:

- seaweedfs_data:/data

+ prometheus:

+ image: prom/prometheus:v2.37.9

+ ports:

+ - "9090:9090"

+ command: --config.file=/prometheus/prometheus.yml --storage.tsdb.path=/prometheus --web.console.libraries=/usr/share/prometheus/console_libraries --web.console.templates=/usr/share/prometheus/consoles --storage.tsdb.retention=10d

+ volumes:

+ - ./init_scripts/prometheus/prometheus.yml:/prometheus/prometheus.yml

+ - prometheus_data:/prometheus

+ networks:

+ - ddosify

+ restart: always

+

volumes:

postgres_data:

influxdb_data:

@@ -185,6 +237,7 @@ volumes:

seaweedfs_data:

hammer_id:

hammerdebug_id:

+ prometheus_data:

networks:

ddosify:

diff --git a/selfhosted/init_scripts/prometheus/prometheus.yml b/selfhosted/init_scripts/prometheus/prometheus.yml

new file mode 100644

index 00000000..a26769b5

--- /dev/null

+++ b/selfhosted/init_scripts/prometheus/prometheus.yml

@@ -0,0 +1,16 @@

+global:

+ scrape_interval: 10s

+ evaluation_interval: 10s

+

+alerting:

+ alertmanagers:

+ - static_configs:

+ - targets:

+

+rule_files:

+

+scrape_configs:

+ - job_name: "backend"

+ metrics_path: '/alaz/metrics/scrape'

+ static_configs:

+ - targets: ["backend:8008"]

diff --git a/selfhosted/install.sh b/selfhosted/install.sh

index 14a3ee9f..b7b38b2b 100755

--- a/selfhosted/install.sh

+++ b/selfhosted/install.sh

@@ -40,9 +40,11 @@ if ! command -v docker >/dev/null 2>&1; then

fi

# Check if Docker Compose is installed

-if ! command -v docker-compose >/dev/null 2>&1 && ! command -v docker >/dev/null 2>&1 || ! docker compose version >/dev/null 2>&1; then

- echo "❌ Docker Compose not found. Please install Docker Compose and try again."

- exit 1

+if ! command -v docker-compose >/dev/null 2>&1; then

+ if ! docker compose version >/dev/null 2>&1; then

+ echo "❌ Docker Compose not found. Please install Docker Compose and try again."

+ exit 1

+ fi

fi

# Check if Docker is running

@@ -59,12 +61,9 @@ REPO_DIR="$HOME/.ddosify"

if [ -d "$REPO_DIR" ]; then

echo "🔄 Repository already exists at $REPO_DIR - Attempting to update..."

cd "$REPO_DIR"

- git checkout master >/dev/null 2>&1

+ git checkout master

cd "$REPO_DIR/selfhosted"

- git pull >/dev/null 2>&1

-

- # Check for errors during pull

- if [ $? -ne 0 ]; then

+ git pull 2>&1 || {

read -p "⚠️ Error updating repository. Clean and update? [Y/n]: " answer

answer=${answer:-Y}

if [[ $answer =~ ^[Yy]$ ]]; then

@@ -72,7 +71,7 @@ if [ -d "$REPO_DIR" ]; then

git clean -fd >/dev/null 2>&1

git pull >/dev/null 2>&1

fi

- fi

+ }

else

# Clone the repository

echo "📦 Cloning repository to $REPO_DIR directory..."

+

+

+

+

+*CE: Community Edition, EE: Enterprise Edition*

+

+

+

+

+