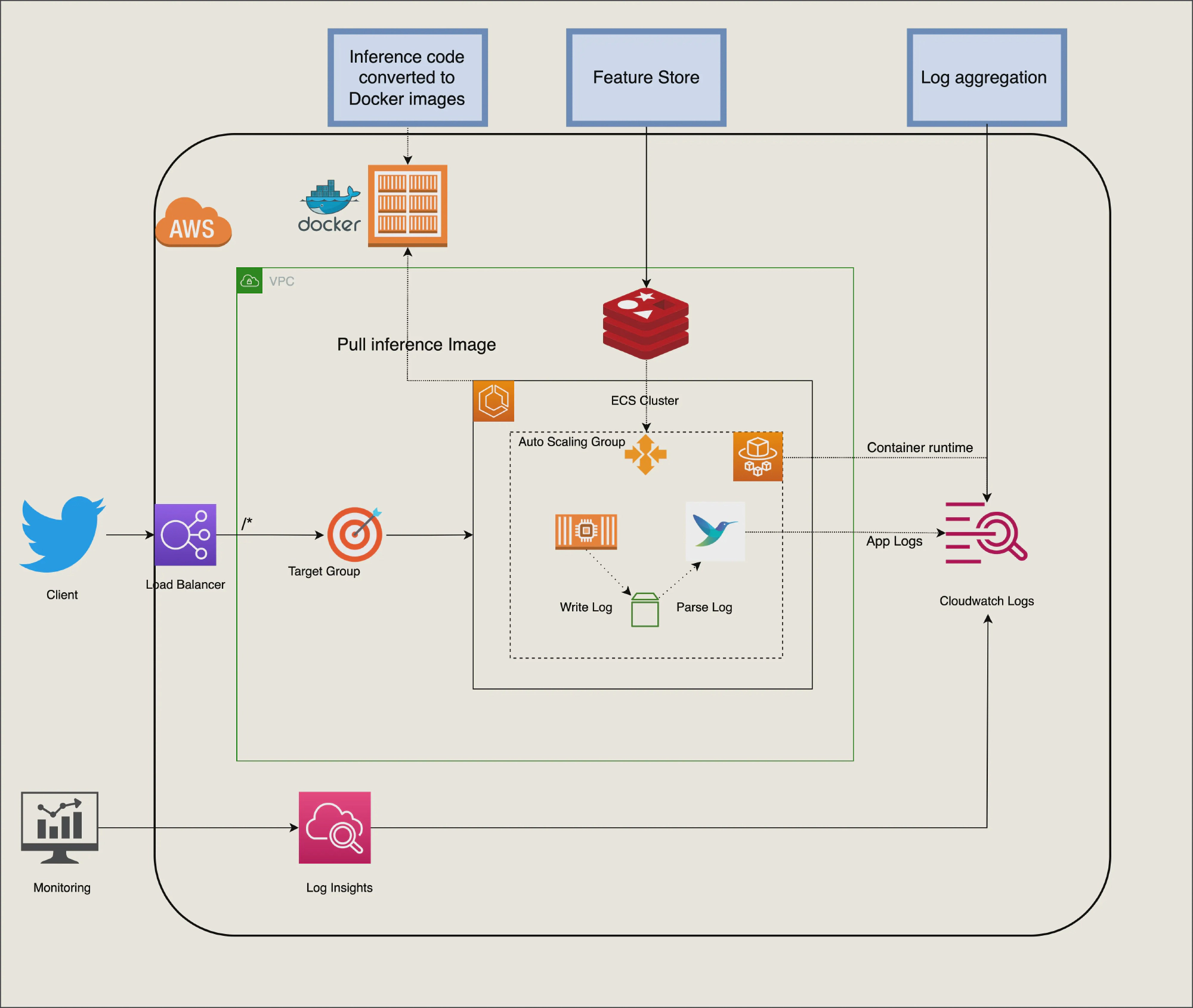

For the hands-on AI project, we will use Twitter sentiment analyst to demonstrate full-stack machine learning inference.

The idea of the project is to get metadata from Twitter URL and sentiment analyst content. After that, we will aggregate sentiment text from the individual user to analyze more sentiment for each user.

First, make sure you copy the following file and edit its contents:

cp .env_template .env

vim .envDownload model transfomer to local data

pip install transformers

python generate_torch_script.py

cp -r twitter-roberta-base-sentiment/* data/*

rm -rf twitter-roberta-base-sentiment # Remove nonuse model for faster build timeSet redis_host enviroment to redis

Once you have the .env setup, run the following script to init docker-compose service:

make build-local # Using sudo if your docker require sudo accessWhen done, you should have listed:

Service is on http://localhost:2000/Access the swagger docs in http://localhost:2000/docs

Variables:

PROJECT_NAMEAny arbitrary project name. Use 'echo' if you don't have any preference.AWS_DEFAULT_REGIONYour preferred AWS regionAWS_ACCOUNT_IDYour account ID as you see hereKEY_PAIRName of Key Pair you'd like to use to setup the infrastructure. Find it here

Once you have the .env setup, run the following script to initialize VPC, ECR/ECS and App Service.

make build-infraWhen done, you should have listed:

Bastion endpoint:

54.65.206.60

Public endpoint:

http://clust-LoadB-4RPWCBUJAH83-1023823123.ap-southeast-1.elb.amazonaws.comAccess the above load balancer and make sure that you have output like this:

$ curl -i "http://clust-LoadB-4RPWCBUJAH83-1023823123.ap-southeast-1.elb.amazonaws.com"

HTTP/1.1 200 OK

Date: Mon, 18 Jul 2022 03:29:51 GMT

Content-Type: application/json

Content-Length: 49

Connection: keep-alive

server: uvicorn

{"statusCode":200,"body":"{\"message\": \"OK\"}"}%Congrats! You're successfully done create AI service!

For more service detail, go to my blog