Releases: jhu-lcsr/costar_plan

CoSTAR Plan Initial Public Release

CoSTAR Hyper

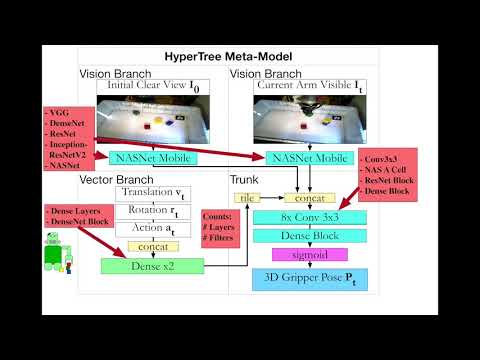

Code for the paper Training Frankenstein's Creature To Stack: HyperTree Architecture Search.

Dataset collection code and integration with the CoSTAR Block Stacking Dataset.

HyperTree Architecture Search Code and Plotting Utilities

HyperTree Pretrained weights are available for download.

The attached binary files are helpful with viewing the google grasping dataset with V-REP as detailed in CoSTAR Hyper.

Costar Task Planning (CTP)

There have been some updates so that the CoSTAR Block Stacking Dataset can be loaded.

CoSTAR data support

Adds support for training the new conditional image models on CoSTAR data, including new tools for saving data. Some refactoring has been done, and we have deleted more dead code.

The simdata.tar.gz file contains the entire dataset used to train our conditional image models on the simulated block stacking task with obstacle avoidance. Now anyone should be able to reproduce results from our papers.

Script changes, GAN fixes, and more

Adding a bunch of fixes including more testing options and an improved planning script

Updates to visual task planning models

Changes:

- batchnorm now replaced with instance normalization

- added extra features and layers

- added permanent dropout - not currently used but can help GANs

MHP support; beautiful pictures for the simulation task

So this version is working very nicely for our simulated tasks, but not as nicely for surgical robots -- so we're adding in multiple hypothesis support.

GAN implementation for CTP goal prediction

We added support for the conditional GANs described in the paper Image to Image Translation with Conditional Adversarial Networks. We can use this for learning a representation space for our predictive models or for the predictions themselves.

Task Parser

This is the first release with a functioning version of the CTP task parser. Given a set of labeled data, this builds probabilistic skill models (including dynamic movement primitives) and uses them to construct a whole graph of various actions.

Important notes:

ctp_tomincludes code and scenarios for the TOM robot at ICSctp_tomcontains theparse.pytool, which lets you try out the parser on multiple ROS bags

Predictor Net Updates

This release contains a preliminary version of our proposed predictor network architecture for predictive modeling of task structure. You can train such a model end to end via a command such as:

rosrun costar_models ctp_model_tool \

--data_file rpy.npz \

--model predictor \

-e 1000 \

--features multi \

--batch_size 24 \

--optimizer adam \

--lr 0.001 \

--upsampling conv_transpose \

--use_noise true \

--noise_dim 32 \

--steps_per_epoch 300 \

--dropout_rate 0.2 \

--skip_connections 1

Which constructs an end-to-end trainable version of the network that can predict 4 hypotheses.

Predictor Nets

Predictor net performance has improved dramatically; they now see fairly low prediction error, and seem to get very nice results w.r.t. predicting future scenes and grasp poses, thanks to richer dense representations.

Predictor neural nets for planning

This is the "first pass" version of the predictor neural nets, with nice, reliable data collection for the stacking task.

Data collection example:

rosrun costar_bullet start --robot ur5 --task stack1 --agent task -i 50 --features multi --verbose --seed 0 --success_only --cpu --save --data_file small.npz

Learning example:

rosrun costar_models ctp_model_tool --data_file small.npz --model predictor -e 1000 --features multi --batch_size 32 --load_model --optimizer adam