The AI runner is a containerized Python application responsible for processes AI inference requests on the Livepeer AI subnet. It loads models into GPU memory and exposes a REST API other programs like the AI worker can use to request AI inference requests.

A high level sketch of how the runner is used:

The AI runner, found in the app directory, consists of:

- Routes: FastAPI routes in app/routes that handle requests and delegate them to the appropriate pipeline.

- Pipelines: Modules in app/pipelines that manage model loading, request processing, and response generation for specific AI tasks.

It also includes utility scripts:

- bench.py: Benchmarks the runner's performance.

- gen_openapi.py: Generates the OpenAPI specification for the runner's API endpoints.

- dl_checkpoints.sh: Downloads model checkpoints from Hugging Face.

- modal_app.py: Deploys the runner on Modal, a serverless GPU platform.

Ensure Docker and the nvidia-container-toolkit are installed, with Docker correctly configured. Then, either pull the pre-built image from DockerHub or build the image locally in this directory.

docker pull livepeer/ai-runner:latestdocker build -t livepeer/ai-runner:latest .The AI Runner container's runner app uses HuggingFace model IDs to reference models. It expects model checkpoints in a /models directory, which can be mounted to a local models directory. To download model checkpoints to the local models directory, use the dl-checkpoints.sh script:

-

Install Hugging Face CLI: Install the Hugging Face CLI by running the following command:

pip install huggingface_hub[cli,hf_transfer]

-

Set up Hugging Face Access Token: Generate a Hugging Face access token as per the official guide and assign it to the

HG_TOKENenvironment variable. This token enables downloading of private models from the Hugging Face model hub. Alternatively, use the Hugging Face CLI's login command to install the token.[!IMPORTANT] The

ld_checkpoints.shscript includes the SVD1.1 model. To use this model on the AI Subnet, visit its page, log in, and accept the terms. -

Download AI Models: Use the ld_checkpoints.sh script to download models from

aiModels.jsonto~/.lpData/models. Run the following command in the.lpDatadirectory:curl -s https://raw.githubusercontent.com/livepeer/ai-worker/main/runner/dl_checkpoints.sh | bash -s -- --beta[!NOTE] The

--betaflag downloads only models supported by the Livepeer.inc Gateway node on the AI Subnet. Remove this flag to download all models.

Tip

If you want to download individual models you can checkout the dl-checkpoints.sh script to see how to download model checkpoints to the local models directory.

Run container:

docker run --name text-to-image -e PIPELINE=text-to-image -e MODEL_ID=<MODEL_ID> --gpus <GPU_IDS> -p 8000:8000 -v ./models:/models livepeer/ai-runner:latest

Query API:

curl -X POST -H "Content-Type: application/json" localhost:8000/text-to-image -d '{"prompt":"a mountain lion"}'Retrieve the image from the response:

curl -O 0.0.0.0:8937/stream/<STREAM_URL>.pngRun container:

docker run --name image-to-image -e PIPELINE=image-to-image -e MODEL_ID=<MODEL_ID> --gpus <GPU_IDS> -p 8000:8000 -v ./models:/models livepeer/ai-runner:latestQuery API:

curl -X POST localhost:8000/image-to-image -F prompt="a mountain lion" -F image=@<IMAGE_FILE>Retrieve the image from the response:

curl -O 0.0.0.0:8937/stream/<STREAM_URL>.pngRun container

docker run --name image-to-video -e PIPELINE=image-to-video -e MODEL_ID=<MODEL_ID> --gpus <GPU_IDS> -p 8000:8000 -v ./models:/models livepeer/ai-runner:latest

Query API:

curl -X POST localhost:8000/image-to-video -F image=@<IMAGE_FILE>Retrieve the image from the response:

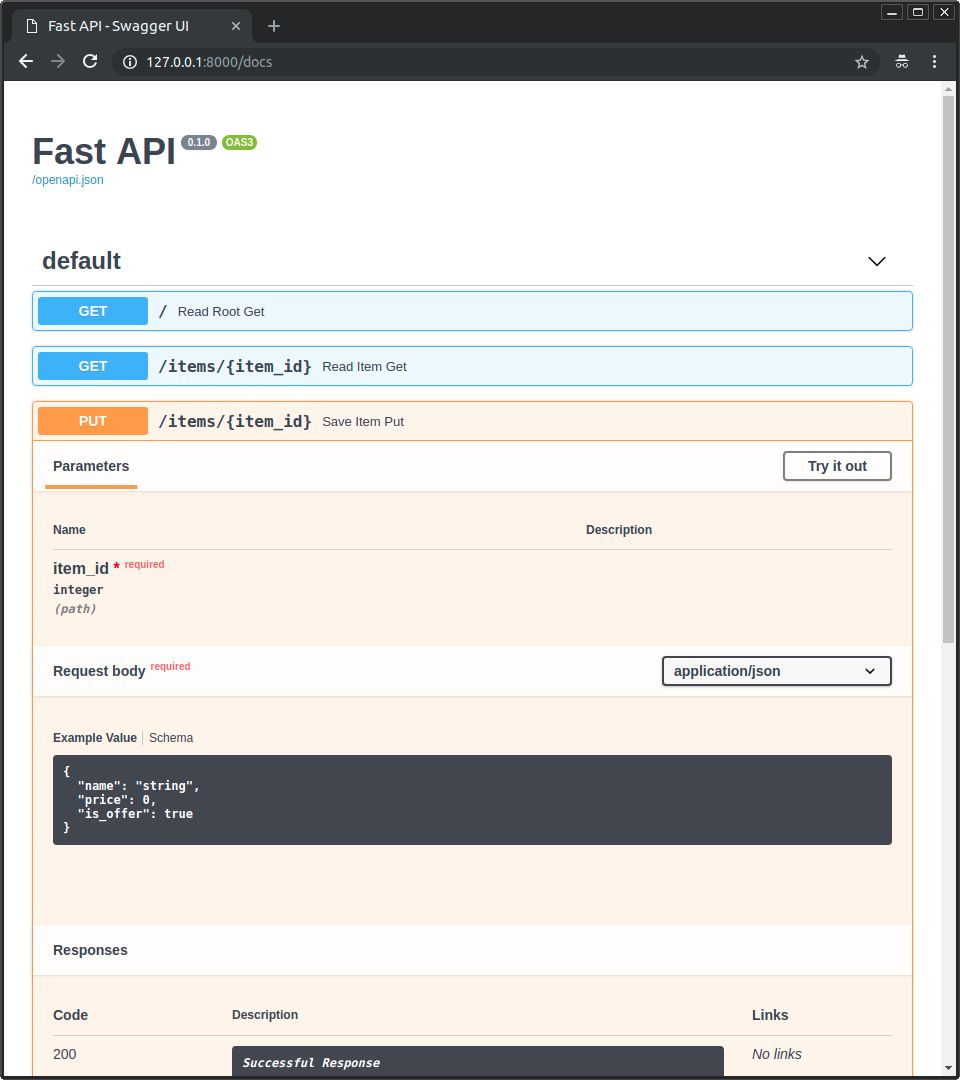

curl -O 0.0.0.0:8937/stream/<STREAM_URL>.mp4The runner comes with a built-in Swagger UI for testing API endpoints. Visit http://localhost:8000/docs to access it.

The runner contains several optimizations to speed up inference or reduce memory usage:

SFAST: Enables the stable-fast optimization, enhancing inference performance.

docker run --gpus <GPU_IDs> -v ./models:/models livepeer/ai-runner:latest python bench.py --pipeline <PIPELINE> --model_id <MODEL_ID> --runs <RUNS> --batch_size <BATCH_SIZE>Example command:

# Benchmark the text-to-image pipeline with the stabilityai/sd-turbo model over 3 runs using GPU 0

docker run --gpus 0 -v ./models:/models livepeer/ai-runner:latest python bench.py --pipeline text-to-image --model_id stabilityai/sd-turbo --runs 3Example output:

----AGGREGATE METRICS----

pipeline load time: 1.473s

pipeline load max GPU memory allocated: 2.421GiB

pipeline load max GPU memory reserved: 2.488GiB

avg inference time: 0.482s

avg inference time per output: 0.482s

avg inference max GPU memory allocated: 3.024s

avg inference max GPU memory reserved: 3.623sFor benchmarking script usage information:

docker run livepeer/ai-runner:latest python bench.py -hTo deploy the runner on Modal, a serverless GPU platform, follow these steps:

-

Prepare for Deployment: Use the

modal_app.pyfile for deployment. Before you start, perform a dry-run in a dev environment to ensure everything is set up correctly. -

Create Secrets: In the Modal dashboard, create an

api-auth-tokensecret and assign theAUTH_TOKENvariable to it. This secret is necessary for authentication. If you're using gated HuggingFace models, create ahuggingfacesecret and set theHF_TOKENvariable to your HuggingFace access token. -

Create a Volume: Run

modal volume create modelsto create a network volume. This volume will store the weights of your models. -

Download Models: For each model ID referenced in

modal_app.py, runmodal run modal_app.py::download_model --model-id <MODEL_ID>to download the model. -

Deploy the Apps: Finally, deploy the apps with

modal deploy modal_app.py. After deployment, the web endpoints of your apps will be visible in your Modal dashboard.

Regenerate the OpenAPI specification for the AI runner's API endpoints with:

python gen_openapi.pyThis creates openapi.json. For a YAML version, use:

python gen_openapi.py --type yamlFor more information on developing and debugging the AI runner, see the development documentation.

Based off of this repo.