This repository contains the official implementation of GenRC, an automated training-free pipeline to complete a room-scale 3D mesh with sparse RGBD observations. The source codes will be released soon.

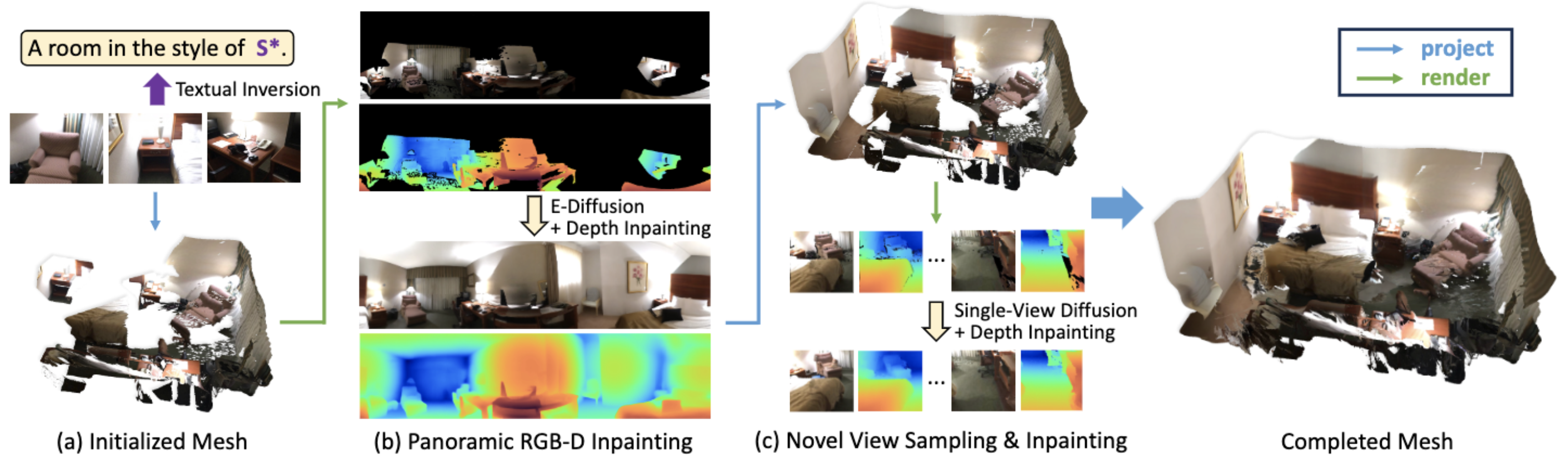

Pipeline of GenRC: (a) Firstly, we extract text embeddings as a token to represent the style of provided RGBD images via textual inversion. Next, we project these images to a 3D mesh. (b) Following that, we render a panorama from a plausible room center and use equirectangular projection to render various viewpoints of the scene from the panoramic image. Then, we propose E-Diffusion that satisfies equirectangular geometry to concurrently denoise these images and determine their depth via monocular depth estimation, resulting in a cross-view consistent panoramic RGBD image. (c) Lastly, we sample novel views from the mesh to fill in holes, resulting in a complete mesh.

Create a conda environment:

conda create -n genrc python=3.9 -y

conda activate genrc

pip install -r requirements.txtThen install Pytorch3D by following the official instructions. For example, to install Pytorch3D on Linux (tested with PyTorch 1.13.1, CUDA 11.7, Pytorch3D 0.7.2):

conda install -c fvcore -c iopath -c conda-forge fvcore iopath -y

pip install "git+https://github.com/facebookresearch/pytorch3d.git@stable"Download the pretrained model weights for the fixed depth inpainting model, that we use:

- refer to the official IronDepth implemention to download the files

normal_scannet.ptandirondepth_scannet.pt. - place the files under

genrc/checkpoints

(Optional) Download the pretrained model weights for the text-to-image model:

git clone https://huggingface.co/stabilityai/stable-diffusion-2-inpainting

git clone https://huggingface.co/stabilityai/stable-diffusion-2-1

ln -s <path/to/stable-diffusion-2-inpainting> checkpoints

ln -s <path/to/stable-diffusion-2-1> checkpoints(Optional) Download the repository and install packages for textual inversion:

git clone https://github.com/huggingface/diffusers

cd diffusers

pip install .

cd examples/textual_inversion

pip install -r requirements.txt

Download the preprocessed ScanNetV2 dataset. Extract via:

mkdir input && unzip scans_keyframe.zip -d input && mv input/scans_keyframe input/ScanNetV2(Optional) Prepare test frames for textual inversion and prepare a script file for running textual inversion.

python prepare_textual_inversion_data_RGBD2.py

python prepare_textual_inversion_script_RGBD2.py

# run textual inversion

source textual_inversion.shTo run and evaluate on ScanNet dataset:

python generate_scene_scannet_rgbd2_test_all.py --exp_name ScanNetV2_exp

To run the baseline method, T2R+RGBD: (please refer to our paper)

python generate_scene_scannet_rgbd2_test_all.py --exp_name ScanNetV2_exp --method "T2R+RGBD"

(Optional) Generate ground-truth mesh and calculate one-directional Chamfer distance:

python calculate_chamfer_completeness.py --exp_name ScanNetV2_exp

Merge all calculated metrics into one csv file:

python merge_test_metrics.py --exp_name ScanNetV2_exp

@inproceedings{minfenli2024GenRC,

title={GenRC: 3D Indoor Scene Generation from Sparse Image Collections},

author={Ming-Feng Li, Yueh-Feng Ku, Hong-Xuan Yen, Chi Liu, Yu-Lun Liu, Albert Y. C. Chen, Cheng-Hao Kuo, Min Sun},

booktitle=ECCV,

year={2024}

}

Thanks to the following projects for providing their open-source codebases and models.