This is a web UI wrapper for alpaca.cpp

Thanks to:

- github.com/AidanGuarniere/chatGPT-UI-template

- github.com/antimatter15/alpaca.cpp and github.com/ggerganov/llama.cpp

- github.com/nomic-ai/gpt4all

- Suggestion for parameters

- Save chat history to disk

- Implement context memory

- Conversation history

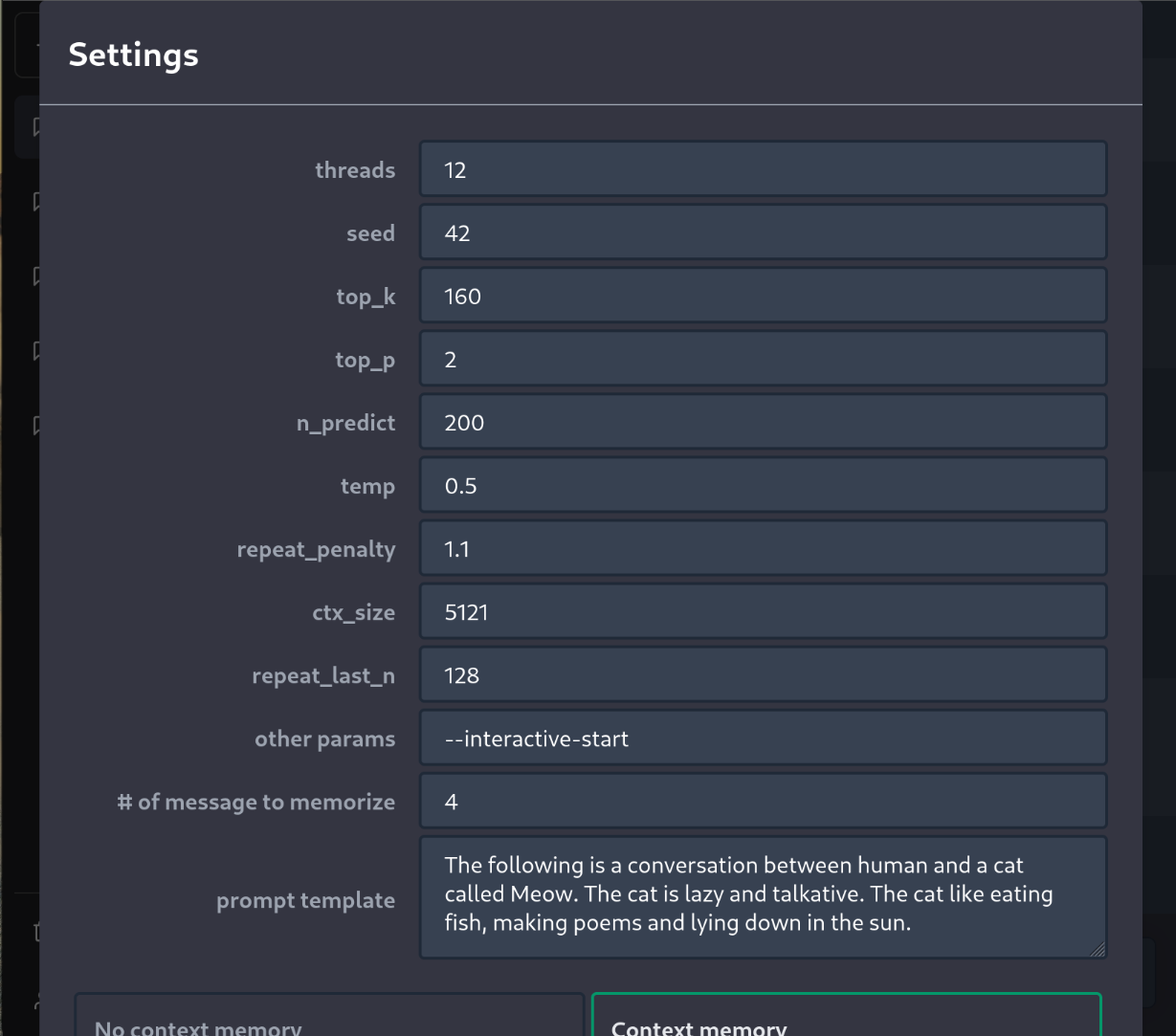

- Interface for tweaking parameters

- Better guide / documentation

- Ability to stop / regenerate response

- Detect code response / use monospace font

- Responsive UI

- Configuration presets

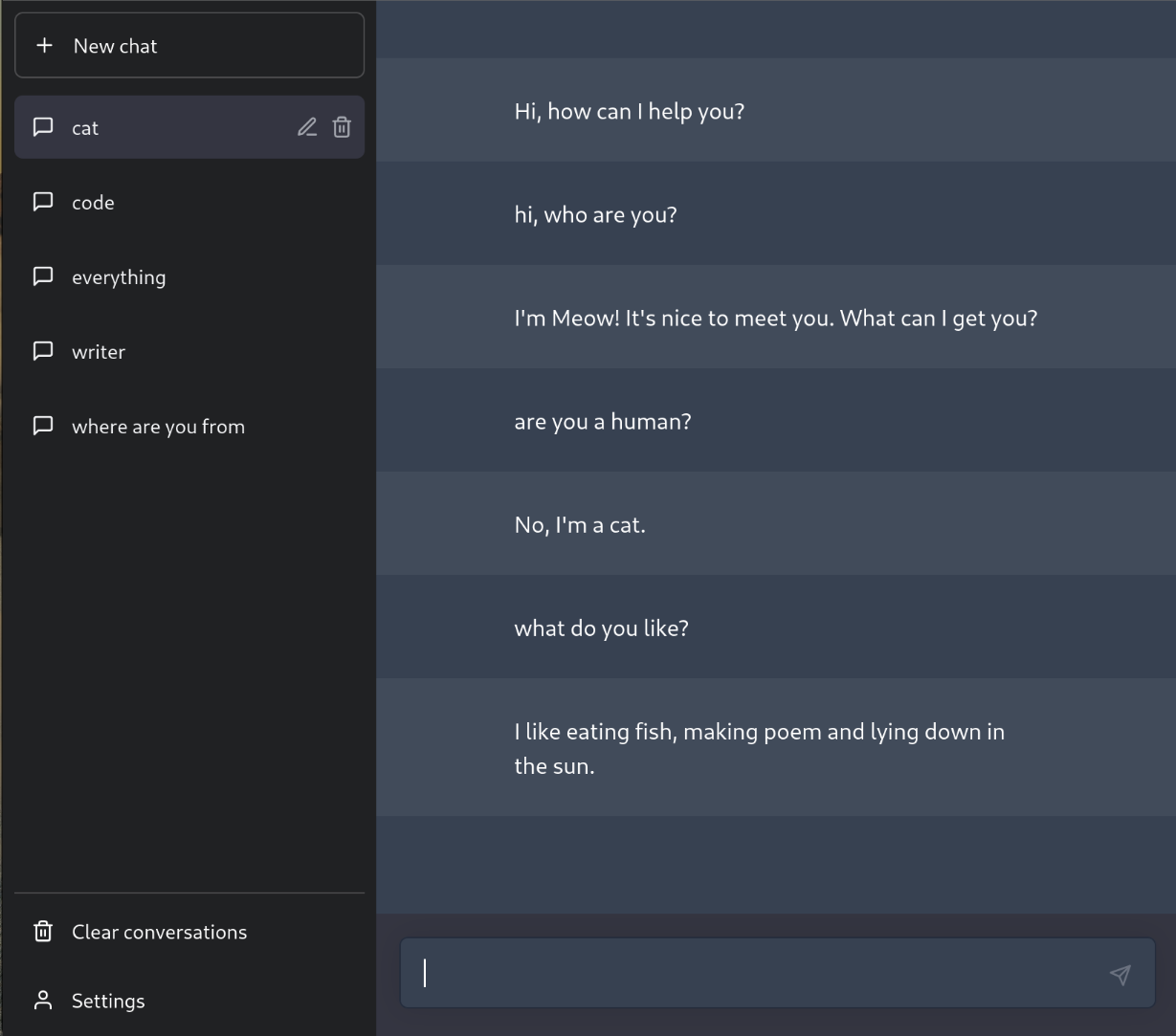

Screenshot:

Pre-requirements:

- You have nodejs v18+ installed on your machine (or if you have Docker, you don't need to install nodejs)

- You are using Linux (Windows should also work, but I have not tested yet)

For Windows user, these is a detailed guide here: doc/windows.md

🔶 Step 1: Clone this repository to your local machine

🔶 Step 2: Download the model and binary file to run the model. You have some options:

-

👉 (Recommended)

Alpaca.cppandAlpaca-native-4bit-ggmlmodel => This combination give me very convincing responses most of the time- Download

chatbinary file and place it underbinfolder: https://github.com/antimatter15/alpaca.cpp/releases - Download

ggml-alpaca-7b-q4.binand place it underbinfolder: https://huggingface.co/Sosaka/Alpaca-native-4bit-ggml/blob/main/ggml-alpaca-7b-q4.bin

- Download

-

👉 Alternatively, you can use

gpt4all: Downloadgpt4all-lora-quantized.binandgpt4all-lora-quantized-*-x86from github.com/nomic-ai/gpt4all, put them intobinfolder

🔶 Step 3: Edit bin/config.js so that the executable name and the model file name are correct

(If you are using chat and ggml-alpaca-7b-q4.bin, you don't need to modify anything)

🔶 Step 4: Run these commands

npm i

npm start

Alternatively, you can just use docker compose up if you have Docker installed.

Then, open http://localhost:13000/ on your browser

- Test on Windows

- Proxy ws via nextjs

- Add Dockerfile / docker-compose

- UI: add avatar