-

Notifications

You must be signed in to change notification settings - Fork 798

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Wav2Lip: Accurately Lip-syncing Videos (#2417)

CVS-152780

- Loading branch information

1 parent

8bc5fa4

commit f74ddef

Showing

8 changed files

with

1,168 additions

and

2 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -427,6 +427,7 @@ LibriSpeech | |

| librispeech | ||

| Lim | ||

| LinearCameraEmbedder | ||

| Lippipeline | ||

| Liu | ||

| LLama | ||

| LLaMa | ||

|

|

||

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,27 @@ | ||

| # Wav2Lip: Accurately Lip-syncing Videos and OpenVINO | ||

|

|

||

| Lip sync technologies are widely used for digital human use cases, which enhance the user experience in dialog scenarios. | ||

|

|

||

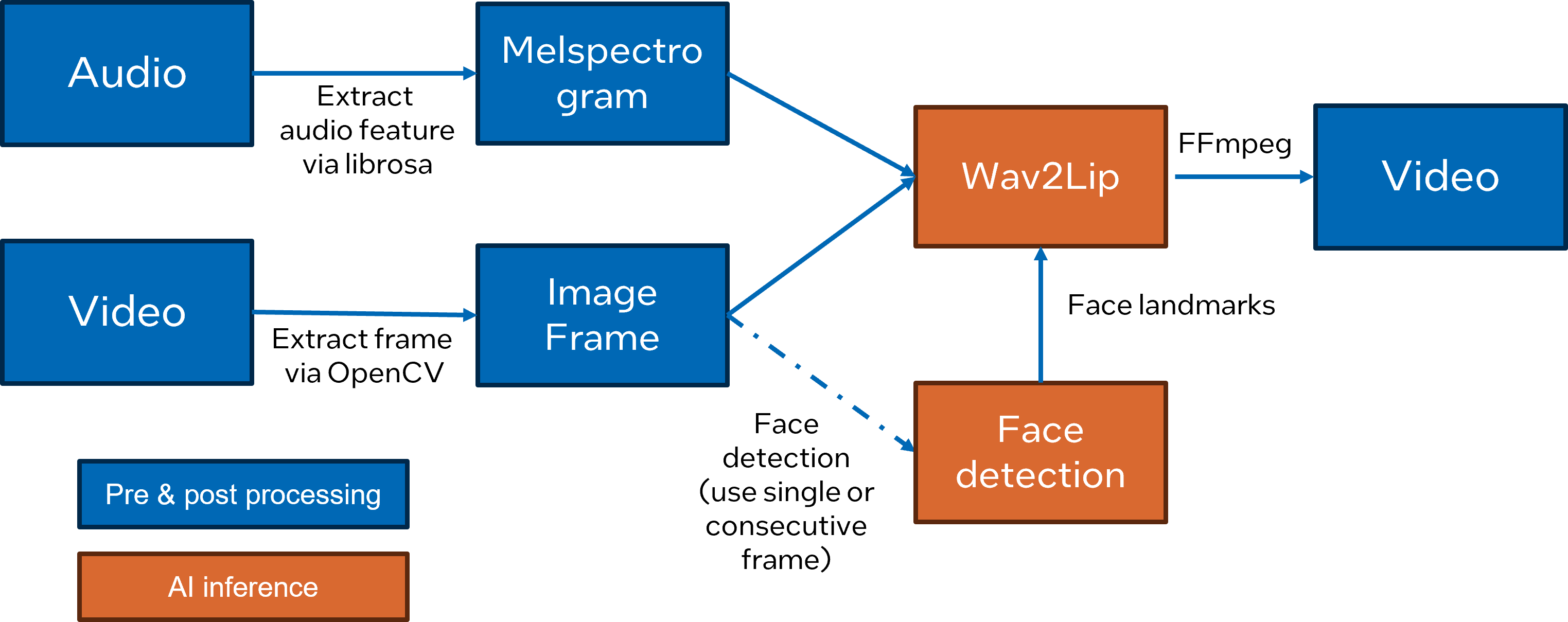

| [Wav2Lip](https://github.com/Rudrabha/Wav2Lip) is a novel approach to generate accurate 2D lip-synced videos in the wild with only one video and an audio clip. Wav2Lip leverages an accurate lip-sync “expert" model and consecutive face frames for accurate, natural lip motion generation. | ||

|

|

||

|  | ||

|

|

||

| In this notebook, we introduce how to enable and optimize Wav2Lippipeline with OpenVINO. This is adaptation of the blog article [Enable 2D Lip Sync Wav2Lip Pipeline with OpenVINO Runtime](https://blog.openvino.ai/blog-posts/enable-2d-lip-sync-wav2lip-pipeline-with-openvino-runtime). | ||

|

|

||

| Here is Wav2Lip pipeline overview: | ||

|

|

||

|  | ||

|

|

||

| ## Notebook contents | ||

| The tutorial consists from following steps: | ||

|

|

||

| - Prerequisites | ||

| - Convert the original model to OpenVINO Intermediate Representation (IR) format | ||

| - Compiling models and prepare pipeline | ||

| - Interactive inference | ||

|

|

||

| ## Installation instructions | ||

| This is a self-contained example that relies solely on its own code.</br> | ||

| We recommend running the notebook in a virtual environment. You only need a Jupyter server to start. | ||

| For details, please refer to [Installation Guide](../../README.md). | ||

| <img referrerpolicy="no-referrer-when-downgrade" src="https://static.scarf.sh/a.png?x-pxid=5b5a4db0-7875-4bfb-bdbd-01698b5b1a77&file=notebooks/wav2lip/README.md" /> |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,25 @@ | ||

| from typing import Callable | ||

| import gradio as gr | ||

| import numpy as np | ||

|

|

||

|

|

||

| examples = [ | ||

| [ | ||

| "data_video_sun_5s.mp4", | ||

| "data_audio_sun_5s.wav", | ||

| ], | ||

| ] | ||

|

|

||

|

|

||

| def make_demo(fn: Callable): | ||

| demo = gr.Interface( | ||

| fn=fn, | ||

| inputs=[ | ||

| gr.Video(label="Face video"), | ||

| gr.Audio(label="Audio", type="filepath"), | ||

| ], | ||

| outputs="video", | ||

| examples=examples, | ||

| allow_flagging="never", | ||

| ) | ||

| return demo |

Oops, something went wrong.