-

Notifications

You must be signed in to change notification settings - Fork 798

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Wav2Lip: Accurately Lip-syncing Videos #2417

Merged

Merged

Changes from all commits

Commits

Show all changes

24 commits

Select commit

Hold shift + click to select a range

bcb6089

Wav2lip initial commit

aleksandr-mokrov f137047

Spellchecking

aleksandr-mokrov b31d9dd

tags

aleksandr-mokrov 706285b

Extra tags

aleksandr-mokrov ae3a46b

Add folder creation

aleksandr-mokrov c7df4d1

Fix pathes

aleksandr-mokrov f196227

Skip macos

aleksandr-mokrov e53ff6e

Add gradio and fixes

aleksandr-mokrov ed11046

pip install

aleksandr-mokrov fb4e51d

pip install

aleksandr-mokrov 2c7af90

Windows execution

aleksandr-mokrov e190df4

Check macos

aleksandr-mokrov f4bfce8

Exclude macos

aleksandr-mokrov 32dbec8

some improvements

aleksandr-mokrov adb8502

some improvements

aleksandr-mokrov 9a24668

some improvements

aleksandr-mokrov 12f5b68

Output + file merging

aleksandr-mokrov 9b04753

Update output

aleksandr-mokrov 196fd52

Check macos

aleksandr-mokrov 69a2a75

Merge branch 'latest' into wav2lip

aleksandr-mokrov 1ab65fb

Remove shell=True for pip install to support <

aleksandr-mokrov aa11474

Merge branch 'wav2lip' of https://github.com/aleksandr-mokrov/openvin…

aleksandr-mokrov 64a260d

Remove shell=True for pip install to support <

aleksandr-mokrov fca60a0

Remove shell=True for pip install to support <

aleksandr-mokrov File filter

Filter by extension

Conversations

Failed to load comments.

Loading

Jump to

Jump to file

Failed to load files.

Loading

Diff view

Diff view

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -427,6 +427,7 @@ LibriSpeech | |

| librispeech | ||

| Lim | ||

| LinearCameraEmbedder | ||

| Lippipeline | ||

| Liu | ||

| LLama | ||

| LLaMa | ||

|

|

||

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,27 @@ | ||

| # Wav2Lip: Accurately Lip-syncing Videos and OpenVINO | ||

|

|

||

| Lip sync technologies are widely used for digital human use cases, which enhance the user experience in dialog scenarios. | ||

|

|

||

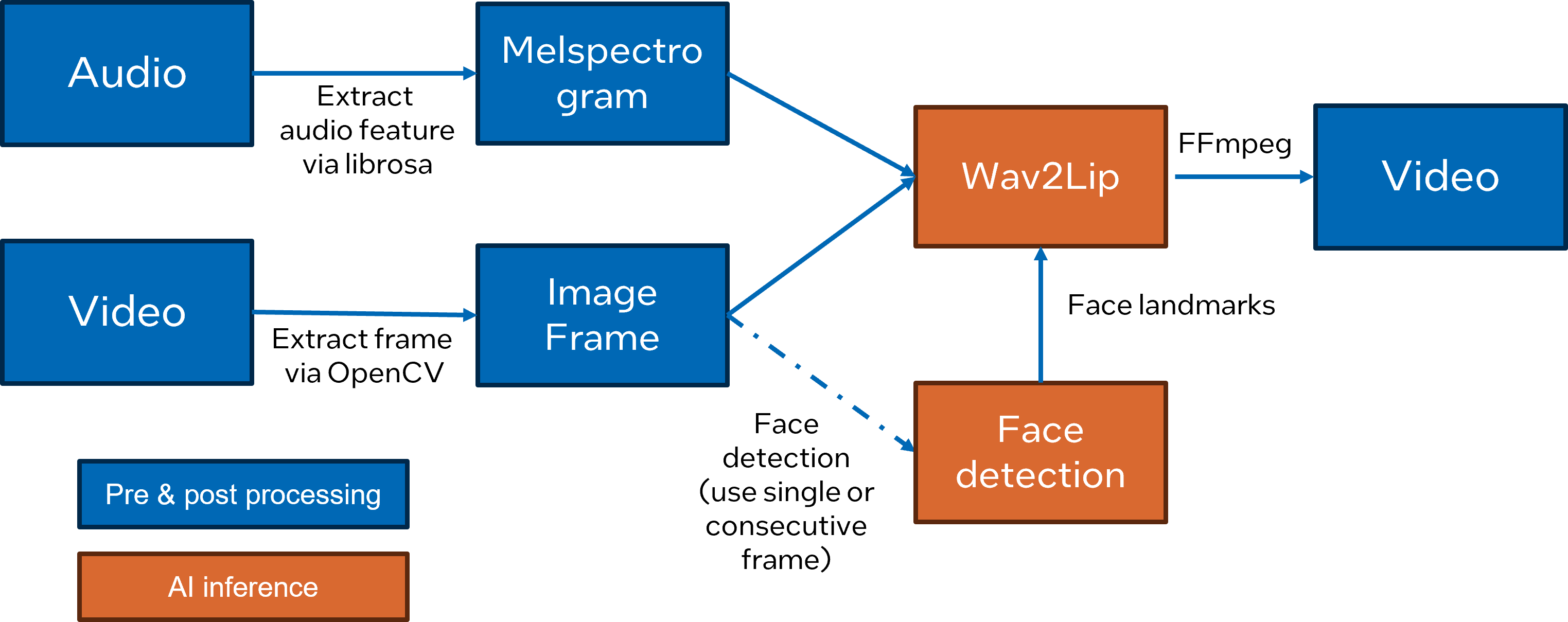

| [Wav2Lip](https://github.com/Rudrabha/Wav2Lip) is a novel approach to generate accurate 2D lip-synced videos in the wild with only one video and an audio clip. Wav2Lip leverages an accurate lip-sync “expert" model and consecutive face frames for accurate, natural lip motion generation. | ||

|

|

||

|  | ||

|

|

||

| In this notebook, we introduce how to enable and optimize Wav2Lippipeline with OpenVINO. This is adaptation of the blog article [Enable 2D Lip Sync Wav2Lip Pipeline with OpenVINO Runtime](https://blog.openvino.ai/blog-posts/enable-2d-lip-sync-wav2lip-pipeline-with-openvino-runtime). | ||

|

|

||

| Here is Wav2Lip pipeline overview: | ||

|

|

||

|  | ||

|

|

||

| ## Notebook contents | ||

| The tutorial consists from following steps: | ||

|

|

||

| - Prerequisites | ||

| - Convert the original model to OpenVINO Intermediate Representation (IR) format | ||

| - Compiling models and prepare pipeline | ||

| - Interactive inference | ||

|

|

||

| ## Installation instructions | ||

| This is a self-contained example that relies solely on its own code.</br> | ||

| We recommend running the notebook in a virtual environment. You only need a Jupyter server to start. | ||

| For details, please refer to [Installation Guide](../../README.md). | ||

| <img referrerpolicy="no-referrer-when-downgrade" src="https://static.scarf.sh/a.png?x-pxid=5b5a4db0-7875-4bfb-bdbd-01698b5b1a77&file=notebooks/wav2lip/README.md" /> | ||

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,25 @@ | ||

| from typing import Callable | ||

| import gradio as gr | ||

| import numpy as np | ||

|

|

||

|

|

||

| examples = [ | ||

| [ | ||

| "data_video_sun_5s.mp4", | ||

| "data_audio_sun_5s.wav", | ||

| ], | ||

| ] | ||

|

|

||

|

|

||

| def make_demo(fn: Callable): | ||

| demo = gr.Interface( | ||

| fn=fn, | ||

| inputs=[ | ||

| gr.Video(label="Face video"), | ||

| gr.Audio(label="Audio", type="filepath"), | ||

| ], | ||

| outputs="video", | ||

| examples=examples, | ||

| allow_flagging="never", | ||

| ) | ||

| return demo |

Oops, something went wrong.

Oops, something went wrong.

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can you please put some images/animations related to inference results?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This animation was added. In notebook properties previous one was replaced by this