Shubham Tulsiani, Alexei A. Efros, Jitendra Malik.

First, you'll need a working implementation of Torch. The subsequent installation steps are:

##### Install 3D spatial transformer ######

cd external/stn3d

luarocks make stn3d-scm-1.rockspec

##### Additional Dependencies (json and matio) #####

sudo apt-get install libmatio2

luarocks install matio

luarocks install json

To train or evaluate the (trained/downloaded) models, it is first required to download the Shapenet dataset (v1) and preprocess the data to compute renderings and voxelizations. Please see the detailed README files for Training or Evaluation of models for subsequent instructions.

Please check out the interactive notebook which shows reconstructions using the learned models. You'll need to -

- Install a working implementation of torch and itorch.

- Download the pre-trained models (1.5GB) and extract them to 'cachedir/snapshots/shapenet/'

- Edit the absolute paths to the blender executable and the provided '.blend' file in the rendering utility script.

If you use this code for your research, please consider citing:

@inProceedings{mvcTulsiani18,

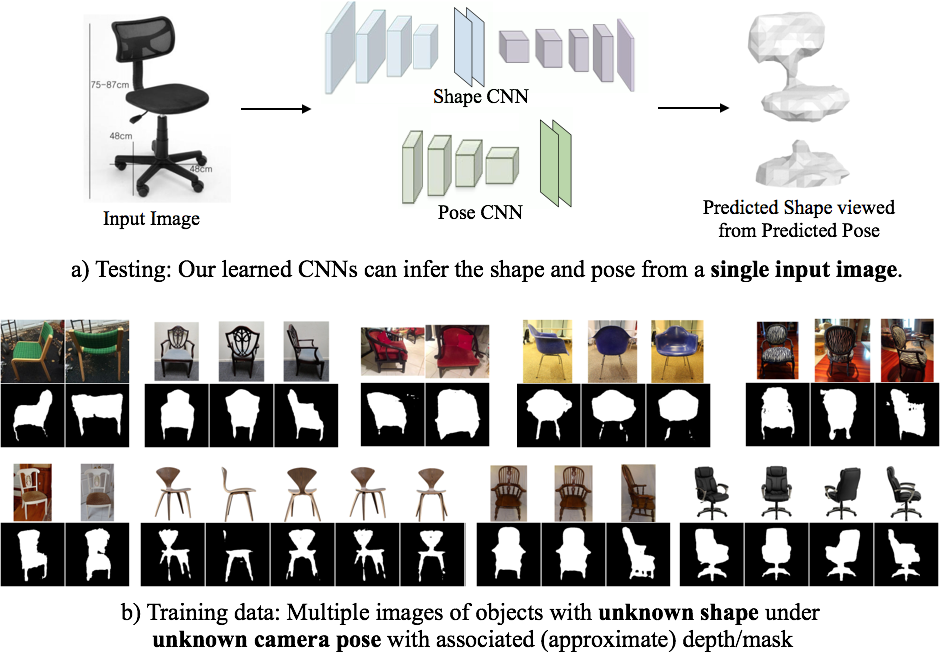

title={Multi-view Consistency as Supervisory Signal

for Learning Shape and Pose Prediction},

author = {Shubham Tulsiani

and Alexei A. Efros

and Jitendra Malik},

booktitle={Computer Vision and Pattern Regognition (CVPR)},

year={2018}

}