-

Notifications

You must be signed in to change notification settings - Fork 18

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[HELP] duckplyr Benchmarking Comparison #61

Comments

|

Thanks for the benchmark! This looks like a case where we don't support particular semantics but fall back to R instead. What happens if you change the code to options(conflicts.policy = list(warn = FALSE))

library(duckplyr)

options(duckdb.materialize_message = TRUE)

Sys.setenv(DUCKPLYR_FALLBACK_INFO = TRUE)

data.frame(a = 1:3, b = as.integer(c(1, 1, 2))) |>

as_duckplyr_df() |>

summarize(sum(a, na.rm = TRUE), .by = b)

#> Error processing with relational.

#> Caused by error:

#> ! Binder Error: No function matches the given name and argument types 'sum(INTEGER, BOOLEAN)'. You might need to add explicit type casts.

#> Candidate functions:

#> sum(DECIMAL) -> DECIMAL

#> sum(SMALLINT) -> HUGEINT

#> sum(INTEGER) -> HUGEINT

#> sum(BIGINT) -> HUGEINT

#> sum(HUGEINT) -> HUGEINT

#> sum(DOUBLE) -> DOUBLE

#> b sum(a, na.rm = TRUE)

#> 1 1 3

#> 2 2 3

data.frame(a = 1:3, b = as.integer(c(1, 1, 2))) |>

as_duckplyr_df() |>

summarize(sum(a), .by = b)

#> materializing:

#> ---------------------

#> --- Relation Tree ---

#> ---------------------

#> Aggregate [b, sum(a)]

#> r_dataframe_scan(0x10daa72c8)

#>

#> ---------------------

#> -- Result Columns --

#> ---------------------

#> - b (INTEGER)

#> - sum(a) (HUGEINT)

#>

#> b sum(a)

#> 1 2 3

#> 2 1 3Created on 2023-10-15 with reprex v2.0.2 |

|

@krlmlr This was definitely the fix and you can see below the significantly improved performance with duckplyr, thank you! 🚀 Below is the revised There are (often) times where I want to keep NAs in my data without removing them in some upstream operation... In the future, if duckplyr could support 1 million rows10 million rows100 million rows500 million rows |

|

And a quick follow-up... Typically you wouldn't care about NAs if you're |

|

Thanks for the follow-up! Supporting |

I was at Posit Conf when I learned about duckplyr and this package is awesome! I'm looking forward to developing with it and thanks to the DuckDB Labs team for spearheading this initiative.

I ran some benchmarks to compare speed across a few ETL approaches using dplyr, arrow, duckdb,

arrow::to_duckdb(), and duckplyr. I assume the reason for the slower ETL speeds using duckplyr boils down to user error 🙋♂️ but having said that, I'd appreciate any insight if my duckplyr setup and/or application is flawed in some way.Any pointers would help, thank you! Below is the code that I used to benchmark performance including the plots generated by

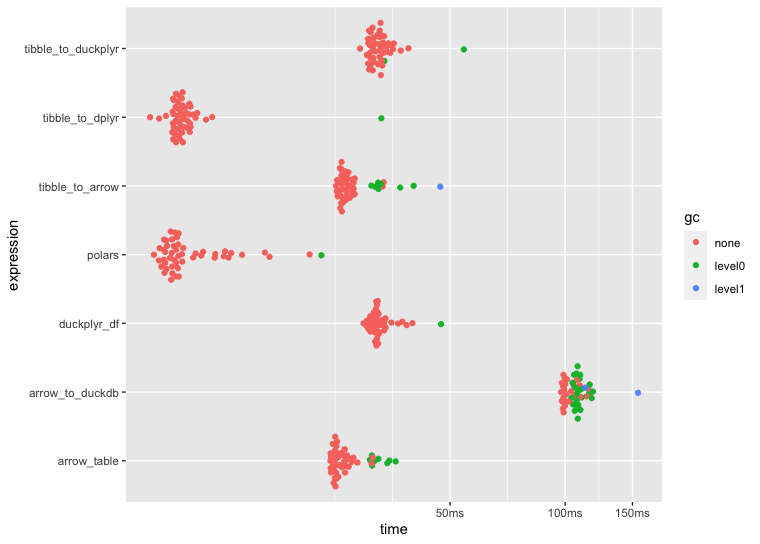

autoplot(bnch):1 million rows

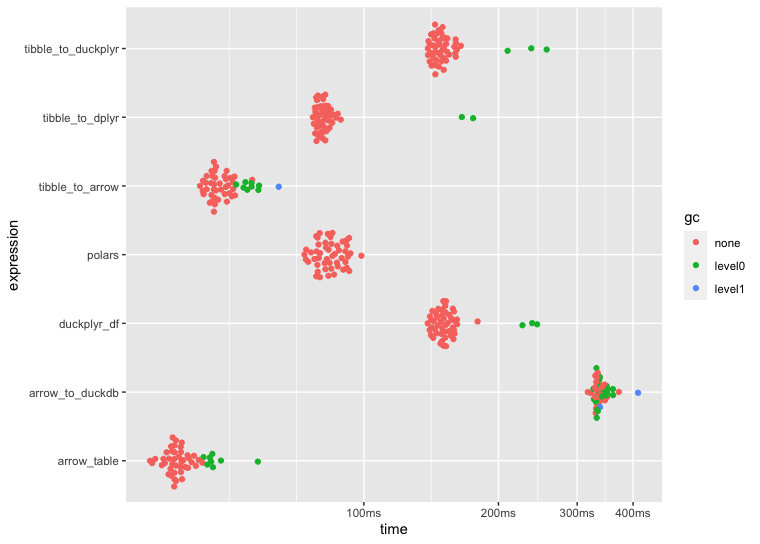

10 million rows

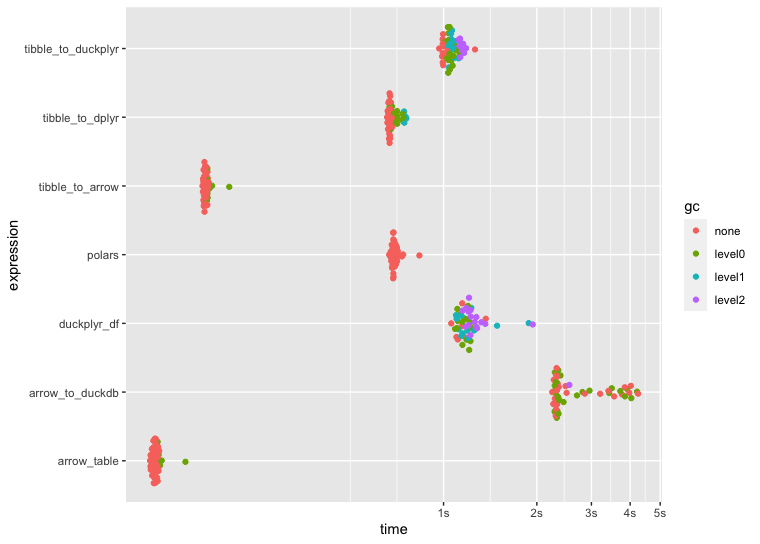

100 million rows

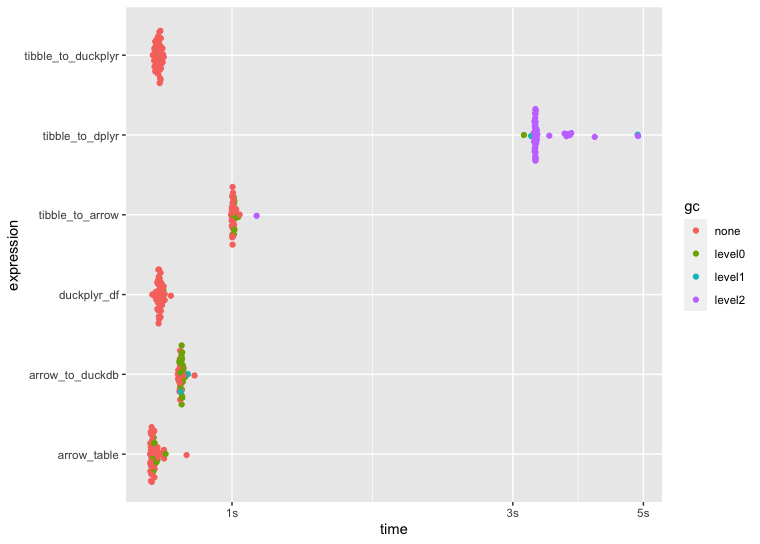

500 million rows

The text was updated successfully, but these errors were encountered: