-

Notifications

You must be signed in to change notification settings - Fork 77

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

fix minikube start failure #2771

base: main

Are you sure you want to change the base?

Conversation

|

Warning There were issues while running some tools. Please review the errors and either fix the tool’s configuration or disable the tool if it’s a critical failure. 🔧 golangci-lint (1.62.2)level=warning msg="[config_reader] The configuration option 📝 Walkthrough<details>

<summary>📝 Walkthrough</summary>

## Walkthrough

This pull request introduces significant updates to the development and testing infrastructure for a Kubernetes-based project. The modifications enhance cluster management, particularly for Kind (Kubernetes in Docker) environments. Key changes include the installation of the Docker CLI, configuration for volume snapshot support, updates to GitHub Actions workflows, and preparations for read replica deployments. The changes aim to streamline the setup and testing processes across various configuration files and build scripts.

## Changes

| File | Change Summary |

|------|----------------|

| `Makefile.d/e2e.mk` | Updated readiness checks for metrics-server and agent, replaced `minikube/restart` with `kind/vs/restart`. |

| `Makefile.d/minikube.mk` | Added Docker binary prerequisite, updated Minikube start command with Docker driver and logging options, enabled metrics-server addon. |

| `Makefile.d/tools.mk` | Added new `docker-cli/install` phony target for Docker CLI installation. |

| `dockers/ci/base/Dockerfile` | Added `sudo` package and Docker CLI installation step. |

| `dockers/dev/Dockerfile` | Integrated Docker CLI installation step. |

| `Makefile.d/kind.mk` | Added volume snapshot support, new targets for Kind cluster management, Docker binary dependency. |

| `.github/actions/setup-e2e/action.yaml` | Added `require_kind` input parameter and Kind environment setup step. |

| `.github/workflows/e2e.yaml` | Updated E2E job to use Kind, added read replica deployment step. |

| `.gitignore` | Refined Rust-related ignored files and added new ignore rules. |

| `k8s/debug/kind/e2e.yaml` | New Kubernetes Kind cluster configuration file. |

| `Makefile.d/k8s.mk` | Increased sleep duration before checking read replica pod readiness. |

| `.github/helm/values/values-readreplica.yaml` | Added read replica specific name and volume configuration. |

| `.github/actions/e2e-deploy-vald-readreplica/action.yaml` | Commented out readiness checks for pod deployment. |

| `.github/actions/e2e-deploy-vald/action.yaml` | Updated conditions for waiting on pod readiness. |

## Possibly related PRs

- **#2498**: This PR introduces a new workflow for updating actions, which may relate to the overall management of GitHub Actions in the main PR.

- **#2595**: This PR focuses on automatically generating workflows, which could be relevant to the refactoring of GitHub Actions in the main PR.

- **#2596**: This PR adds a check for context cancellation, which may indirectly relate to the robustness of workflows in the main PR.

- **#2639**: This PR includes various enhancements and refactorings, which may connect with the overall improvements in the main PR.

- **#2670**: This PR refactors the Makefile to use absolute paths, which could relate to the changes in the main PR regarding path management.

- **#2673**: This PR backports changes related to the Makefile, which may connect with the refactoring efforts in the main PR.

- **#2696**: This PR introduces a string sorted topological sort, which may relate to the overall improvements in the main PR.

- **#2699**: This PR focuses on preparing for the release, which may connect with the overall enhancements in the main PR.

- **#2743**: This PR refactors Dockerfiles and updates `.gitattributes`, which may relate to the changes in the main PR.

- **#2745**: This PR backports the changes from PR #2743, which may connect with the refactoring efforts in the main PR.

- **#2773**: This PR refactors GitHub Actions, which is directly relevant to the main PR.

- **#2779**: This PR backports changes from PR #2773, making it directly related to the main PR.

## Suggested labels

`area/internal`

## Suggested reviewers

- hlts2

- vankichi

</details>Tip CodeRabbit's docstrings feature is now available as part of our Early Access Program! Simply use the command Thank you for using CodeRabbit. We offer it for free to the OSS community and would appreciate your support in helping us grow. If you find it useful, would you consider giving us a shout-out on your favorite social media? 🪧 TipsChatThere are 3 ways to chat with CodeRabbit:

Note: Be mindful of the bot's finite context window. It's strongly recommended to break down tasks such as reading entire modules into smaller chunks. For a focused discussion, use review comments to chat about specific files and their changes, instead of using the PR comments. CodeRabbit Commands (Invoked using PR comments)

Other keywords and placeholders

CodeRabbit Configuration File (

|

|

[CHATOPS:HELP] ChatOps commands.

|

Deploying vald with

|

| Latest commit: |

75d1062

|

| Status: | ✅ Deploy successful! |

| Preview URL: | https://de39cedf.vald.pages.dev |

| Branch Preview URL: | https://bugfix-e2e-readreplica-minik.vald.pages.dev |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actionable comments posted: 2

📜 Review details

Configuration used: CodeRabbit UI

Review profile: CHILL

⛔ Files ignored due to path filters (1)

hack/docker/gen/main.gois excluded by!**/gen/**

📒 Files selected for processing (6)

.github/workflows/e2e.yaml(1 hunks)Makefile.d/e2e.mk(1 hunks)Makefile.d/minikube.mk(2 hunks)Makefile.d/tools.mk(1 hunks)dockers/ci/base/Dockerfile(2 hunks)dockers/dev/Dockerfile(1 hunks)

🔇 Additional comments (7)

Makefile.d/minikube.mk (2)

28-30: LGTM: Explicit Docker driver configuration

The changes properly ensure Docker CLI availability and explicitly specify the Docker driver, which helps prevent driver selection issues during Minikube startup.

35-35: Verify metrics-server requirement

The addition of metrics-server addon might be related to the readreplica e2e test requirements.

✅ Verification successful

Metrics-server requirement is verified for readreplica e2e tests

Based on the analysis of the e2e test files, specifically in Makefile.d/e2e.mk, the metrics-server is indeed required for the readreplica e2e tests. The e2e/actions/run/readreplica target explicitly waits for the metrics-server to be available and ready before proceeding with the readreplica tests:

kubectl wait -n kube-system --for=condition=Available deployment/metrics-server --timeout=$(E2E_WAIT_FOR_START_TIMEOUT)

kubectl wait -n kube-system --for=condition=Ready pod -l k8s-app=metrics-server --timeout=$(E2E_WAIT_FOR_START_TIMEOUT)

kubectl wait -n kube-system --for=condition=ContainersReady pod -l k8s-app=metrics-server --timeout=$(E2E_WAIT_FOR_START_TIMEOUT)Additionally, the readreplica configuration in k8s/readreplica/configmap.yaml shows metrics-related configurations, indicating that metrics monitoring is an integral part of the readreplica functionality.

🏁 Scripts executed

The following scripts were executed for the analysis:

Script:

#!/bin/bash

# Description: Check if readreplica tests depend on metrics-server

# Search for metrics-server usage in e2e tests

rg -l "metrics-server|metrics\.k8s\.io" --type yaml --type go | grep -i "e2e\|test"

# Check if readreplica manifests have resource requirements

rg -l "readreplica.*resources:" --type yaml

Length of output: 131

Script:

#!/bin/bash

# Let's try a broader search to understand the context better

# Search for metrics-server references in all files

rg -l "metrics-server"

# Search for any metrics-related configurations in test files

rg -l "metrics" test/ e2e/

# Look for resource requirements or monitoring in test files

rg -A 5 "resources:|monitoring" test/ e2e/

# Check if there are any readreplica test files

fd readreplica

Length of output: 732

Script:

#!/bin/bash

# Let's check the content of relevant files to understand the metrics-server requirement

# Check Makefile.d/e2e.mk for metrics-server usage context

cat Makefile.d/e2e.mk

# Check readreplica job manifests for resource requirements

rg -A 5 "resources:" charts/vald/templates/index/job/readreplica/

rg -A 5 "resources:" charts/vald-readreplica/

# Check if metrics-server is mentioned in readreplica related files

rg "metrics" k8s/readreplica/

rg "metrics" pkg/index/job/readreplica/

Length of output: 10509

dockers/ci/base/Dockerfile (2)

126-126: LGTM: Docker CLI installation

Adding docker-cli installation aligns with the Minikube prerequisites and supports the fix for Minikube start failure.

75-75: Verify sudo requirement

The addition of sudo package might be related to Docker operations. However, since we're running as root (USER root:root), verify if sudo is actually needed.

dockers/dev/Dockerfile (1)

140-140: LGTM: Consistent Docker CLI installation

Adding docker-cli installation in the dev environment maintains consistency with the CI environment and ensures Minikube works in both contexts.

Makefile.d/tools.mk (1)

264-272: LGTM: Docker CLI installation implementation

The implementation is well-structured and follows the established pattern for tool installations:

- Uses official Docker repository

- Handles architecture detection

- Includes proper cleanup

- Follows the same pattern as other tool installations

.github/workflows/e2e.yaml (1)

Line range hint 312-316: Verify the necessity of disabling k3d

The change to use Minikube exclusively (by disabling k3d) for this test might indicate an underlying issue that should be investigated.

Let's check if there are any k3d-specific configurations that might be causing issues:

✅ Verification successful

Let me gather more information about the usage of k3d and minikube in the e2e workflow.

Let me check the actual setup-k3d and setup-minikube actions to understand their configurations and potential differences.

Let me check the e2e workflow file to understand the specific test that's being modified and its requirements.

Let me check the specific test configuration and Makefile to understand the minikube setup.

The switch to Minikube for read replica test is justified and necessary

The read replica test specifically requires CSI Driver and Volume Snapshots support for testing the read replica functionality, as evidenced by the configuration in values-readreplica.yaml which uses:

storageClass: csi-hostpath-scsnapshot_classname: "csi-hostpath-snapclass"readreplica.enabled: true

Minikube is explicitly configured in minikube.mk to support these features with the commands:

minikube addons enable volumesnapshots

minikube addons enable csi-hostpath-driver

The comment in minikube.mk also confirms this: "Only use this for development related to Volume Snapshots. Usually k3d is faster."

🏁 Scripts executed

The following scripts were executed for the analysis:

Script:

#!/bin/bash

# Check for k3d-specific configurations

rg -A 5 "k3d" .github/helm/values/

rg -A 5 "k3d" .github/actions/setup-e2e/

Length of output: 1407

Script:

#!/bin/bash

# Check for k3d and minikube usage in e2e workflow

rg -A 5 "setup-e2e" .github/workflows/e2e.yaml

# Check for any k3d or minikube related issues or discussions

rg -i "k3d|minikube" .github/ISSUE_TEMPLATE/

rg -i "k3d|minikube" .github/pull_request_template.md

Length of output: 2255

Script:

#!/bin/bash

# Check k3d and minikube setup actions

cat .github/actions/setup-k3d/action.yaml

cat .github/actions/setup-minikube/action.yaml

# Check for any related test failures or issues

rg -i "failed.*k3d|k3d.*failed" .github/workflows/

rg -i "failed.*minikube|minikube.*failed" .github/workflows/

Length of output: 4113

Script:

#!/bin/bash

# Check the specific e2e test configuration and its context

rg -B 10 -A 10 'require_k3d: "false"' .github/workflows/e2e.yaml

# Check if there are any minikube-specific configurations in the repository

rg -l "minikube" .

Length of output: 1049

Script:

#!/bin/bash

# Check the read replica test configuration

cat .github/helm/values/values-readreplica.yaml

# Check minikube configuration in Makefile

cat Makefile.d/minikube.mk

# Check if this is the only test using minikube

rg "require_minikube: \"true\"" .github/workflows/

Length of output: 3809

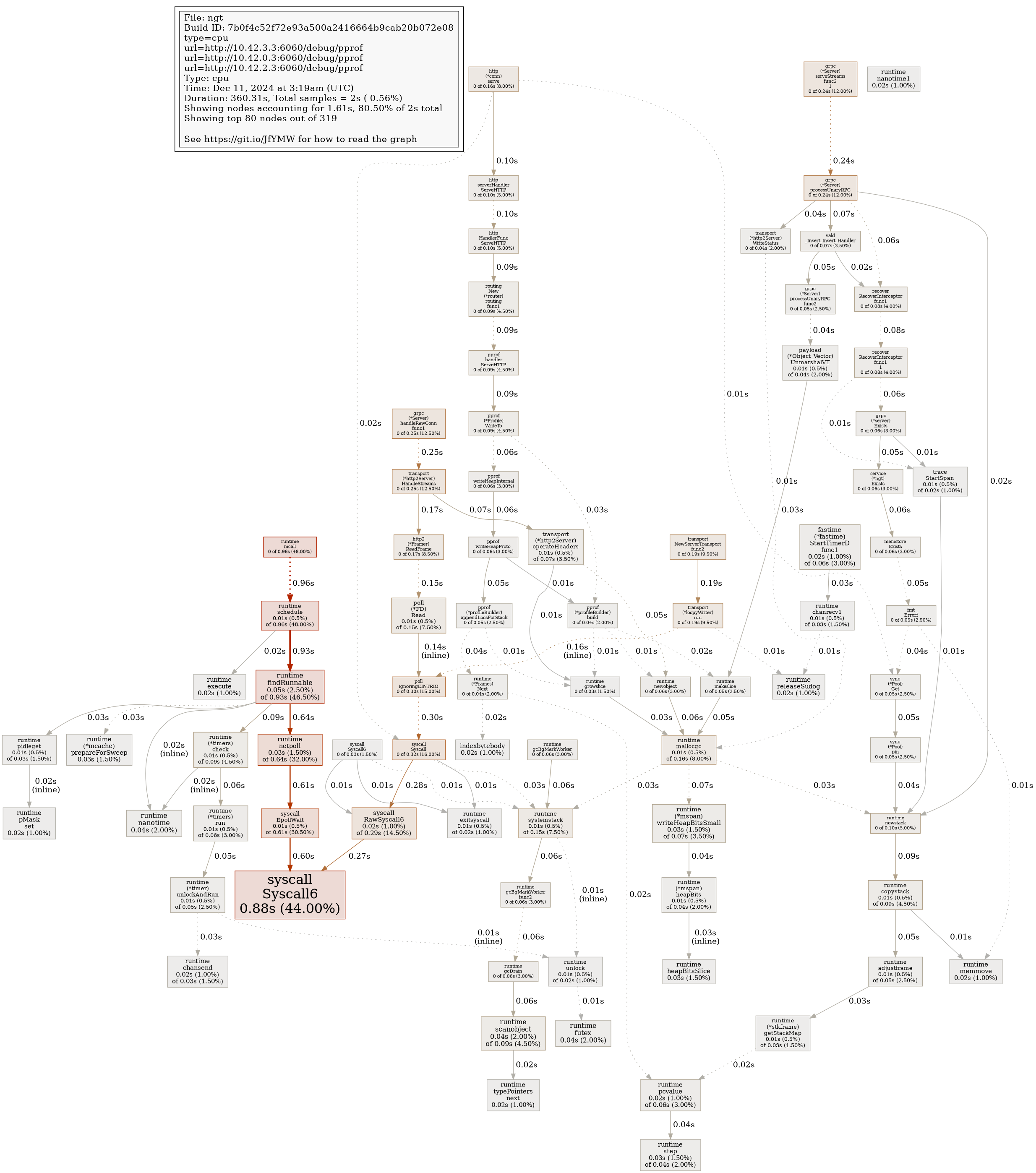

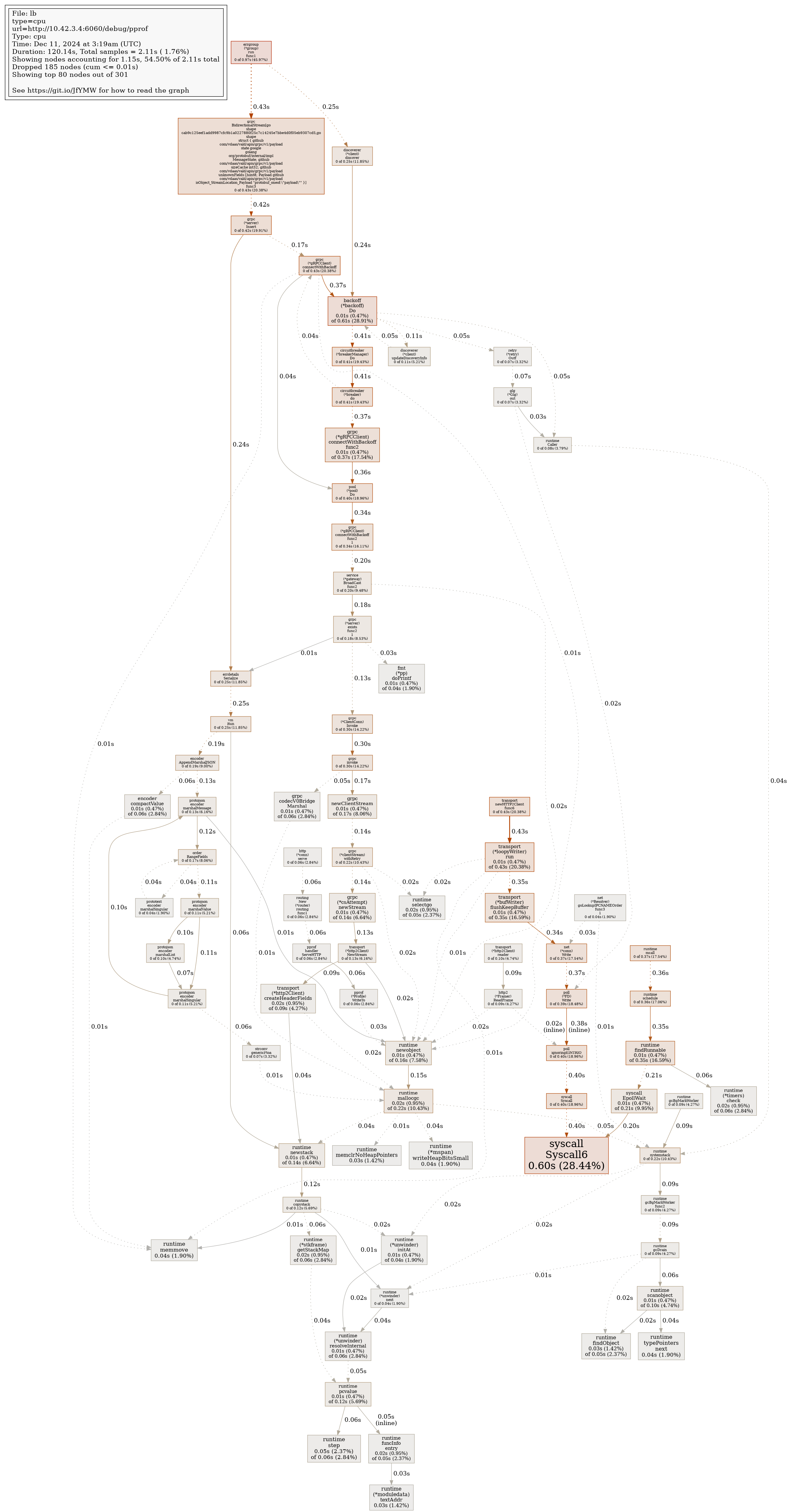

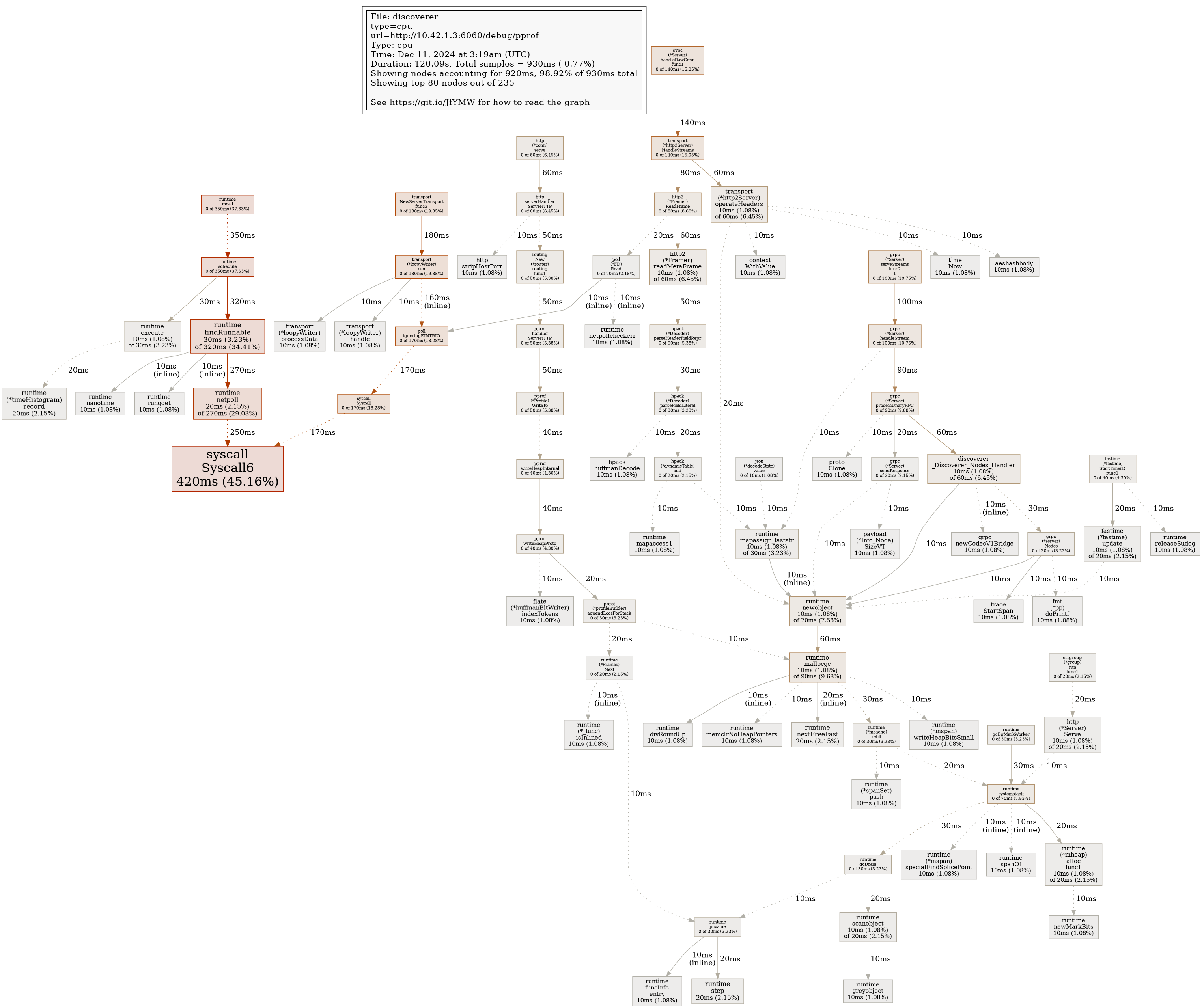

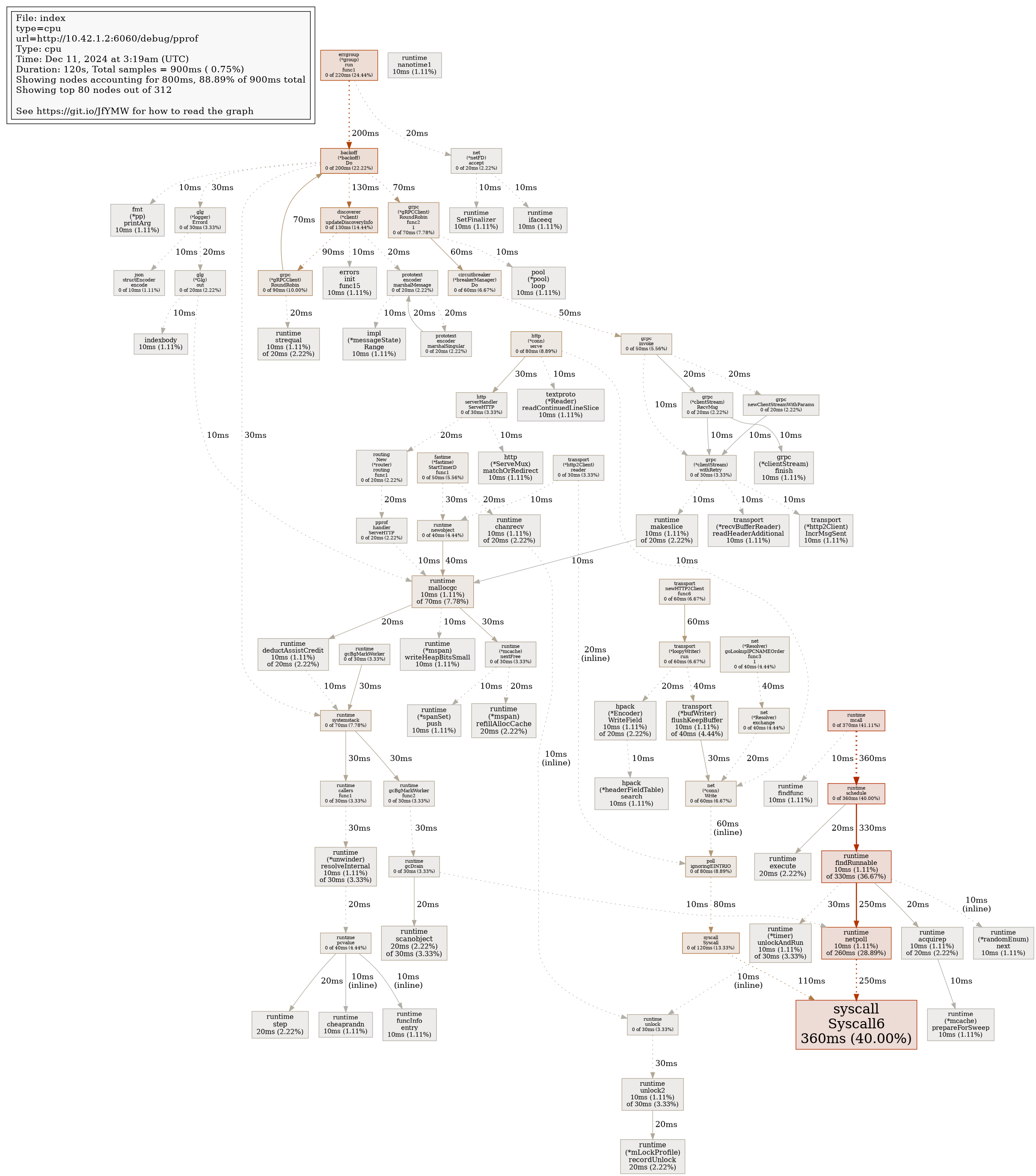

Profile Report

|

|

[FOSSA] The scan result will be available at https://app.fossa.com/projects/custom%2B21465%2Fvald/refs/branch/bugfix%2Fe2e%2Freadreplica-minikube-start-failure/c60fb5dfdba098b78236afdf742ba98017e9a68b |

Profile Report

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actionable comments posted: 0

🧹 Nitpick comments (3)

.github/helm/values/values-readreplica.yaml (3)

19-19: Consider reverting debug logging after troubleshootingWhile debug logging is helpful for troubleshooting the minikube start failure, it can impact performance and generate excessive logs. Consider adding a TODO to revert this to

infolevel after the issues are resolved.

42-60: Optimize init containers configurationThe init containers configuration could be optimized:

- Consider using

IfNotPresentinstead ofAlwaysfor the pull policy of init containers- The configuration is repetitive and could be templated

Consider refactoring to reduce duplication, for example:

initContainers: defaults: &initContainerDefaults type: wait-for image: busybox:stable imagePullPolicy: IfNotPresent sleepDuration: 2 containers: - <<: *initContainerDefaults name: wait-for-discoverer target: discoverer - <<: *initContainerDefaults name: wait-for-agent target: agent - <<: *initContainerDefaults name: wait-for-agent-readreplica target: agent-readreplica

Line range hint

19-90: Overall changes look well-structuredThe modifications form a cohesive solution for the minikube start failure by:

- Enabling detailed logging for troubleshooting

- Adding appropriate health checks

- Ensuring proper startup order through init containers

- Configuring read replica volumes correctly

Consider documenting these startup dependencies and timing requirements in the project's operational documentation to help with future troubleshooting and configuration tuning.

📜 Review details

Configuration used: CodeRabbit UI

Review profile: CHILL

Plan: Pro

📒 Files selected for processing (1)

.github/helm/values/values-readreplica.yaml(3 hunks)

🔇 Additional comments (2)

.github/helm/values/values-readreplica.yaml (2)

89-90: Verify volume configuration consistency

The read replica volume configuration looks correct, but let's ensure:

- The PVC name matches any existing claims

- The configuration aligns with the snapshot class configuration

✅ Verification successful

Volume configuration is consistent with project standards

The volume configuration is correctly structured and aligns with the project's patterns:

- The volume name

vald-agent-readreplica-pvcfollows the established naming convention, similar to other volume configurations likevald-agent-ngt-readreplica-pvc - The configuration is properly integrated with the snapshot system, as evidenced by the snapshot templates in

charts/vald-readreplica/templates/ - PVC and volume snapshot configurations are consistently defined across the Helm charts

🏁 Scripts executed

The following scripts were executed for the analysis:

Script:

#!/bin/bash

# Description: Check volume and snapshot configurations

# Search for related PVC configurations

rg -g '*.yaml' -g '*.yml' 'vald-agent-readreplica-pvc'

# Check for snapshot class references

rg -g '*.yaml' -g '*.yml' 'csi-hostpath-snapclass'

Length of output: 105

Script:

#!/bin/bash

# Let's broaden the search to find any volume-related configurations

# Search for PVC patterns in yaml files

rg -g '*.yaml' -g '*.yml' 'persistentVolumeClaim|volumeClaimTemplates'

# Search for volume name patterns

rg -g '*.yaml' -g '*.yml' 'volume_name:|volumeName:'

# Search for snapshot-related configurations

rg -g '*.yaml' -g '*.yml' 'snapshot|VolumeSnapshot'

Length of output: 2659

22-29: Verify if 120s initial delay is necessary

The 120-second initial delay for both liveness and readiness probes seems quite long. This could significantly impact:

- Pod startup time

- Recovery time during failures

- Overall deployment time

Let's analyze the startup times to determine if this delay is justified:

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actionable comments posted: 1

📜 Review details

Configuration used: CodeRabbit UI

Review profile: CHILL

Plan: Pro

📒 Files selected for processing (5)

.github/helm/values/values-readreplica.yaml(3 hunks).github/workflows/e2e.yaml(2 hunks)Makefile.d/e2e.mk(2 hunks)Makefile.d/k8s.mk(5 hunks)Makefile.d/minikube.mk(2 hunks)

🚧 Files skipped from review as they are similar to previous changes (4)

- Makefile.d/minikube.mk

- Makefile.d/e2e.mk

- Makefile.d/k8s.mk

- .github/helm/values/values-readreplica.yaml

🧰 Additional context used

🪛 actionlint (1.7.4)

.github/workflows/e2e.yaml

343-343: property "deploy_vald" is not defined in object type {deploy_vald_readreplica: {conclusion: string; outcome: string; outputs: {pod_name: string}}; setup_e2e: {conclusion: string; outcome: string; outputs: {helm_extra_options: string; image_tags: string}}}

(expression)

🔇 Additional comments (1)

.github/workflows/e2e.yaml (1)

318-318: LGTM: Infrastructure change from Minikube to Kind

The switch to Kind (Kubernetes in Docker) is a good solution to address the Minikube start failure mentioned in the PR objectives.

.github/workflows/e2e.yaml

Outdated

| @@ -347,7 +340,7 @@ jobs: | |||

| E2E_TARGET_NAMESPACE=default \ | |||

| e2e/readreplica | |||

| env: | |||

| POD_NAME: ${{ steps.deploy_vald_readreplica.outputs.POD_NAME }} | |||

| POD_NAME: ${{ steps.deploy_vald.outputs.POD_NAME }} | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Critical: Invalid step reference in POD_NAME environment variable

The POD_NAME environment variable references steps.deploy_vald.outputs.POD_NAME, but there is no deploy_vald step in this job. This will cause the workflow to fail.

Apply this diff to fix the reference:

- POD_NAME: ${{ steps.deploy_vald.outputs.POD_NAME }}

+ POD_NAME: ${{ steps.deploy_vald_readreplica.outputs.POD_NAME }}📝 Committable suggestion

‼️ IMPORTANT

Carefully review the code before committing. Ensure that it accurately replaces the highlighted code, contains no missing lines, and has no issues with indentation. Thoroughly test & benchmark the code to ensure it meets the requirements.

| POD_NAME: ${{ steps.deploy_vald.outputs.POD_NAME }} | |

| POD_NAME: ${{ steps.deploy_vald_readreplica.outputs.POD_NAME }} |

🧰 Tools

🪛 actionlint (1.7.4)

343-343: property "deploy_vald" is not defined in object type {deploy_vald_readreplica: {conclusion: string; outcome: string; outputs: {pod_name: string}}; setup_e2e: {conclusion: string; outcome: string; outputs: {helm_extra_options: string; image_tags: string}}}

(expression)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actionable comments posted: 0

♻️ Duplicate comments (1)

.github/workflows/e2e.yaml (1)

343-343:⚠️ Potential issueCritical: Invalid step reference in POD_NAME environment variable

The

POD_NAMEenvironment variable referencessteps.deploy_vald.outputs.POD_NAME, but there is nodeploy_valdstep in this job. This will cause the workflow to fail.Apply this diff to fix the reference:

- POD_NAME: ${{ steps.deploy_vald.outputs.POD_NAME }} + POD_NAME: ${{ steps.deploy_vald_readreplica.outputs.POD_NAME }}🧰 Tools

🪛 actionlint (1.7.4)

343-343: property "deploy_vald" is not defined in object type {deploy_vald_readreplica: {conclusion: string; outcome: string; outputs: {pod_name: string}}; setup_e2e: {conclusion: string; outcome: string; outputs: {helm_extra_options: string; image_tags: string}}}

(expression)

🧹 Nitpick comments (3)

.github/workflows/e2e.yaml (1)

344-347: Consider enhancing failure logging.The failure logging step could be improved by:

- Including more context (e.g., pod status, events)

- Setting a retention period for the logs

Apply this diff to enhance the logging:

- name: output logs on failure if: failure() run: | - cat ./gateway.log + echo "=== Pod Status ===" + kubectl get pods -o wide + echo "=== Recent Events ===" + kubectl get events --sort-by='.lastTimestamp' + echo "=== Gateway Logs ===" + cat ./gateway.logMakefile.d/k8s.mk (2)

Line range hint

135-155: Consider adding validation before deployment.The deployment target could benefit from pre-deployment validation to ensure manifest correctness.

Add this before the kubectl apply commands:

k8s/vald/deploy: k8s/vald/manifests + @echo "Validating manifests..." + @find $(TEMP_DIR)/vald/templates -type f -name "*.yaml" -exec kubectl apply --dry-run=client -f {} \; @echo "Permitting error because there's some cases nothing to apply"

Line range hint

239-270: Enhance pod readiness checks.The read replica deployment includes good pod readiness checks but could be improved with timeout handling and status reporting.

Apply this diff to enhance the readiness checks:

kubectl wait --for=condition=ready pod -l app=vald-agent --timeout=600s kubectl wait --for=condition=ready pod -l app.kubernetes.io/name=vald-readreplica --timeout=600s + @echo "=== Deployment Status ===" + @kubectl get pods -l app=vald-agent -o wide + @kubectl get pods -l app.kubernetes.io/name=vald-readreplica -o wide + @if [ $$? -ne 0 ]; then \ + echo "Deployment failed. Check pod events:"; \ + kubectl get events --sort-by='.lastTimestamp' | grep -E "vald-agent|vald-readreplica"; \ + exit 1; \ + fi

📜 Review details

Configuration used: CodeRabbit UI

Review profile: CHILL

Plan: Pro

📒 Files selected for processing (3)

.github/actions/e2e-deploy-vald-readreplica/action.yaml(1 hunks).github/workflows/e2e.yaml(2 hunks)Makefile.d/k8s.mk(5 hunks)

🚧 Files skipped from review as they are similar to previous changes (1)

- .github/actions/e2e-deploy-vald-readreplica/action.yaml

🧰 Additional context used

🪛 actionlint (1.7.4)

.github/workflows/e2e.yaml

343-343: property "deploy_vald" is not defined in object type {deploy_vald_readreplica: {conclusion: string; outcome: string; outputs: {pod_name: string}}; setup_e2e: {conclusion: string; outcome: string; outputs: {helm_extra_options: string; image_tags: string}}}

(expression)

🔇 Additional comments (2)

.github/workflows/e2e.yaml (1)

318-318: Verify Kind cluster configuration for read replica tests.

The change from require_minikube to require_kind needs proper Kind cluster configuration to support the read replica functionality.

✅ Verification successful

Based on the review of the setup-e2e action and helm values configuration, I can now provide a final response:

Kind cluster configuration is properly set up for read replica functionality

The change from require_minikube to require_kind is valid as:

- The setup-e2e action includes proper Kind cluster setup via

make kind/vs/start - The values-readreplica.yaml configures necessary components:

- CSI hostpath storage class for persistent volumes

- Snapshot support via

csi-hostpath-snapclass - Read replica configuration with proper volume management

🏁 Scripts executed

The following scripts were executed for the analysis:

Script:

#!/bin/bash

# Description: Verify Kind cluster configuration for read replica support.

# Check if Kind cluster configuration exists

fd -t f "kind.*\.yaml" -x cat {} \; | grep -A 5 "extraMounts\|extraPortMappings"

# Check if Kind cluster has the necessary features enabled

kubectl get nodes -o json | jq -r '.items[].spec.podCIDR'

Length of output: 243

Script:

#!/bin/bash

# Let's check the workflow configuration and related files to understand the Kind setup

# Check the workflow file for Kind setup

cat .github/workflows/e2e.yaml

# Look for any Kind configuration files in the repository

fd -t f "kind|k8s|cluster" -x cat {} \;

# Search for any documentation about Kind setup

fd -t f "README|CONTRIBUTING" -x cat {} \;

Length of output: 115445

Script:

#!/bin/bash

# Let's check the setup-e2e action and helm values to understand the Kind configuration

# Check setup-e2e action

cat .github/actions/setup-e2e/action.yaml

# Check helm values for read replica setup

cat .github/helm/values/values-readreplica.yaml

Length of output: 6583

Makefile.d/k8s.mk (1)

Line range hint 114-134: LGTM: Well-structured manifest generation.

The manifest generation target is well-organized and includes all necessary image repository configurations.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actionable comments posted: 0

🧹 Nitpick comments (1)

pkg/agent/core/ngt/service/ngt.go (1)

890-892: Return a closed channel instead of nil

Returning nil can be risky if any upstream code waits to receive from the channel. Consider returning a closed channel to avoid blocking or upstream panics.Example refactor:

890 func (n *ngt) Start(ctx context.Context) <-chan error { 891 if n.isReadReplica { - return nil + c := make(chan error) + close(c) // signals no operations needed + return c } ...

Codecov ReportAttention: Patch coverage is

Additional details and impacted files@@ Coverage Diff @@

## main #2771 +/- ##

=======================================

Coverage 23.92% 23.93%

=======================================

Files 546 546

Lines 54544 54547 +3

=======================================

+ Hits 13052 13054 +2

- Misses 40705 40707 +2

+ Partials 787 786 -1 ☔ View full report in Codecov by Sentry. |

3358169 to

a3fd605

Compare

Co-authored-by: coderabbitai[bot] <136622811+coderabbitai[bot]@users.noreply.github.com> Signed-off-by: Kosuke Morimoto <kou.morimoto@gmail.com>

a3fd605 to

75d1062

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actionable comments posted: 0

♻️ Duplicate comments (3)

Makefile.d/kind.mk (3)

78-78: 🛠️ Refactor suggestionImprove reliability of e2e.yaml modification

The current sed command might fail silently if:

- host.docker.internal is not in /etc/hosts

- The file pattern isn't found in e2e.yaml

-sed -e 's/apiServerAddress: "127.0.0.1"/apiServerAddress: "$(shell grep host.docker.internal /etc/hosts | cut -f1)"/' $(ROOTDIR)/k8s/debug/kind/e2e.yaml | kind create cluster --name $(NAME)-vs --config - +HOST_IP := $(shell grep host.docker.internal /etc/hosts | cut -f1) +ifeq ($(HOST_IP),) + $(error host.docker.internal not found in /etc/hosts) +endif +sed -e 's/apiServerAddress: "127.0.0.1"/apiServerAddress: "$(HOST_IP)"/' $(ROOTDIR)/k8s/debug/kind/e2e.yaml > /tmp/e2e_modified.yaml +test -s /tmp/e2e_modified.yaml || (echo "Failed to modify e2e.yaml" && exit 1) +kind create cluster --name $(NAME)-vs --config /tmp/e2e_modified.yaml

81-85: 🛠️ Refactor suggestionAdd verification for CRD and controller installation

The installation of CRDs and controller should be verified to ensure successful deployment.

+define verify_resource + @echo "Verifying $$1..." + @kubectl wait --for=condition=established --timeout=60s crd/$$1 || (echo "Failed to install $$1" && exit 1) +endef kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/$(SNAPSHOTTER_VERSION)/client/config/crd/snapshot.storage.k8s.io_volumesnapshotclasses.yaml +$(call verify_resource,volumesnapshotclasses.snapshot.storage.k8s.io) kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/$(SNAPSHOTTER_VERSION)/client/config/crd/snapshot.storage.k8s.io_volumesnapshotcontents.yaml +$(call verify_resource,volumesnapshotcontents.snapshot.storage.k8s.io) kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/$(SNAPSHOTTER_VERSION)/client/config/crd/snapshot.storage.k8s.io_volumesnapshots.yaml +$(call verify_resource,volumesnapshots.snapshot.storage.k8s.io) kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/$(SNAPSHOTTER_VERSION)/deploy/kubernetes/snapshot-controller/rbac-snapshot-controller.yaml kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/$(SNAPSHOTTER_VERSION)/deploy/kubernetes/snapshot-controller/setup-snapshot-controller.yaml +kubectl -n kube-system wait --for=condition=ready pod -l app=snapshot-controller --timeout=60s || (echo "Snapshot controller not ready" && exit 1)

95-97: 🛠️ Refactor suggestionAdd readiness check for metrics-server

Replace the sleep command with a proper readiness check.

@make k8s/metrics/metrics-server/deploy helm upgrade --install --set args={--kubelet-insecure-tls} metrics-server metrics-server/metrics-server -n kube-system -sleep $(K8S_SLEEP_DURATION_FOR_WAIT_COMMAND) +kubectl wait --for=condition=available deployment/metrics-server -n kube-system --timeout=60s || (echo "metrics-server not ready" && exit 1)

🧹 Nitpick comments (3)

Makefile.d/k8s.mk (2)

Line range hint

135-156: Improved deployment process with manifest dependency.The

k8s/vald/deploytarget now properly depends on manifest generation and includes comprehensive resource deployment with error handling.Consider adding a comment explaining why errors are permitted:

- @echo "Permitting error because there's some cases nothing to apply" + @echo "Note: Errors are permitted as some resources might already exist or be unavailable in certain configurations"

Line range hint

239-270: Enhanced readreplica deployment with proper sequencing.The

k8s/vald-readreplica/deploytarget includes several improvements:

- Proper dependency on base deployment

- Gateway cleanup and redeployment

- Pod status verification and readiness checks

However, there's a potential race condition in the pod deletion wait.

Consider adding a verification step before proceeding with gateway deletion:

kubectl delete -f $(TEMP_DIR)/vald/templates/gateway || true kubectl delete -f $(TEMP_DIR)/vald/templates/gateway/lb || true + # Verify no gateway pods are in terminating state before proceeding + while kubectl get pods -l app=vald-lb-gateway 2>/dev/null | grep Terminating; do + echo "Waiting for gateway pods to terminate..." + sleep 5 + done kubectl wait --for=delete pod -l app=vald-lb-gateway --timeout=600spkg/agent/core/ngt/service/ngt.go (1)

890-892: Add test coverage for read replica functionality.The read replica handling code is not covered by tests. Consider adding test cases to verify the behavior.

Would you like me to help generate test cases for:

- Verifying that write operations are blocked on read replicas

- Ensuring read operations work correctly on read replicas

- Testing the early return in the Start method

🧰 Tools

🪛 GitHub Check: codecov/patch

[warning] 891-892: pkg/agent/core/ngt/service/ngt.go#L891-L892

Added lines #L891 - L892 were not covered by tests

📜 Review details

Configuration used: CodeRabbit UI

Review profile: CHILL

Plan: Pro

⛔ Files ignored due to path filters (1)

hack/docker/gen/main.gois excluded by!**/gen/**

📒 Files selected for processing (17)

.github/actions/e2e-deploy-vald-readreplica/action.yaml(1 hunks).github/actions/e2e-deploy-vald/action.yaml(2 hunks).github/actions/setup-e2e/action.yaml(2 hunks).github/helm/values/values-readreplica.yaml(2 hunks).github/workflows/_detect-ci-container.yaml(1 hunks).github/workflows/e2e.yaml(2 hunks).gitignore(1 hunks)Makefile.d/e2e.mk(2 hunks)Makefile.d/k8s.mk(5 hunks)Makefile.d/kind.mk(2 hunks)Makefile.d/minikube.mk(2 hunks)Makefile.d/tools.mk(1 hunks)charts/vald/templates/_helpers.tpl(1 hunks)dockers/ci/base/Dockerfile(2 hunks)dockers/dev/Dockerfile(1 hunks)k8s/debug/kind/e2e.yaml(1 hunks)pkg/agent/core/ngt/service/ngt.go(1 hunks)

🚧 Files skipped from review as they are similar to previous changes (12)

- dockers/ci/base/Dockerfile

- Makefile.d/minikube.mk

- k8s/debug/kind/e2e.yaml

- .github/actions/e2e-deploy-vald/action.yaml

- Makefile.d/tools.mk

- Makefile.d/e2e.mk

- dockers/dev/Dockerfile

- .github/actions/setup-e2e/action.yaml

- .github/actions/e2e-deploy-vald-readreplica/action.yaml

- charts/vald/templates/_helpers.tpl

- .github/helm/values/values-readreplica.yaml

- .gitignore

🧰 Additional context used

🪛 GitHub Check: codecov/patch

pkg/agent/core/ngt/service/ngt.go

[warning] 891-892: pkg/agent/core/ngt/service/ngt.go#L891-L892

Added lines #L891 - L892 were not covered by tests

🔇 Additional comments (8)

.github/workflows/_detect-ci-container.yaml (1)

44-44: LGTM: Improved tag extraction by removing double quotes.

The addition of sed -e 's/"//g' ensures clean Docker image tag values by removing any double quotes, which is a good practice for tag handling.

.github/workflows/e2e.yaml (2)

338-338: Extended index creation wait duration for better reliability.

The wait duration for index creation has been increased from 3 minutes to 10 minutes. This change provides better reliability for the test by allowing more time for the index creation process to complete.

318-318: Infrastructure change: Switched from minikube to kind.

The change from require_minikube to require_kind aligns with the PR objective to fix the minikube start failure. Using Kind (Kubernetes in Docker) is a good choice as it's more lightweight and faster than minikube.

Run the following script to verify the infrastructure change is consistent across the codebase:

✅ Verification successful

The switch from minikube to kind is consistent across the codebase

The verification shows that there are no remaining references to minikube in any YAML files, while kind is properly configured in the codebase, particularly in:

k8s/debug/kind/e2e.yaml- Contains the kind cluster configuration for e2e testsk8s/debug/kind/config.yaml- Contains the kind cluster configuration

🏁 Scripts executed

The following scripts were executed for the analysis:

Script:

#!/bin/bash

# Description: Verify the switch from minikube to kind is consistent.

# Test 1: Check for any remaining minikube references

echo "Checking for remaining minikube references..."

rg -i "minikube" --type yaml

# Test 2: Verify kind configuration

echo "Verifying kind configuration..."

rg -i "kind" --type yaml

Length of output: 67507

Makefile.d/k8s.mk (2)

Line range hint 114-134: LGTM: Well-structured manifest generation target.

The new k8s/vald/manifests target properly generates Kubernetes manifests using Helm template with appropriate image configurations and CRDs.

273-273: LGTM: Proper dependency for readreplica deletion.

The k8s/vald-readreplica/delete target correctly depends on k8s/vald/delete ensuring proper cleanup order.

pkg/agent/core/ngt/service/ngt.go (1)

890-892: LGTM! Early return for read replicas prevents write operations.

The early return for read replicas is a good addition that prevents unnecessary index creation operations on read-only instances.

Run the following script to verify the read replica handling is consistent across the codebase:

✅ Verification successful

Early return for read replicas is consistently implemented across write operations

The early return for read replicas in the Start method is part of a consistent pattern of preventing write operations on read replicas. The codebase shows that:

- The

RegenerateIndexesmethod also has the same read replica check and returnsErrWriteOperationToReadReplica - Other write operations like

Insert,Update,Delete, etc. are protected by theIsFlushing()check which prevents concurrent modifications - The read replica check is appropriately placed at the start of methods that modify the index

🏁 Scripts executed

The following scripts were executed for the analysis:

Script:

#!/bin/bash

# Description: Verify read replica handling across write operations

# Test: Search for write operations in read replica context

# Check for write operation methods that should handle read replicas

rg -A 5 'func.*\b(Insert|Update|Delete|Create|Save|Regenerate).*\('

# Check for isReadReplica checks

rg 'isReadReplica'

Length of output: 84523

🧰 Tools

🪛 GitHub Check: codecov/patch

[warning] 891-892: pkg/agent/core/ngt/service/ngt.go#L891-L892

Added lines #L891 - L892 were not covered by tests

Makefile.d/kind.mk (2)

87-93: 🛠️ Refactor suggestion

Improve CSI driver installation robustness

The CSI driver installation process needs several improvements:

- Version should be a variable

- Error handling is missing

- Cleanup might fail silently

+CSI_DRIVER_VERSION ?= v1.15.0

+CSI_TEMP_DIR := $(shell mktemp -d)

+

+.PHONY: csi/cleanup

+csi/cleanup:

+ @if [ -d "$(CSI_TEMP_DIR)" ]; then \

+ rm -rf "$(CSI_TEMP_DIR)" || (echo "Failed to cleanup CSI temp directory" >&2; exit 1); \

+ fi

-mkdir -p $(TEMP_DIR)/csi-driver-hostpath \

- && curl -fsSL https://github.com/kubernetes-csi/csi-driver-host-path/archive/refs/tags/v1.15.0.tar.gz | tar zxf - -C $(TEMP_DIR)/csi-driver-hostpath --strip-components 1 \

- && cd $(TEMP_DIR)/csi-driver-hostpath \

- && deploy/kubernetes-latest/deploy.sh \

- && kubectl apply -f examples/csi-storageclass.yaml \

- && kubectl apply -f examples/csi-pvc.yaml \

- && rm -rf $(TEMP_DIR)/csi-driver-hostpath

+mkdir -p "$(CSI_TEMP_DIR)" || (echo "Failed to create temp directory" >&2; exit 1)

+curl -fsSL "https://github.com/kubernetes-csi/csi-driver-host-path/archive/refs/tags/$(CSI_DRIVER_VERSION).tar.gz" | \

+ tar zxf - -C "$(CSI_TEMP_DIR)" --strip-components 1 || (echo "Failed to download/extract CSI driver" >&2; $(MAKE) csi/cleanup; exit 1)

+cd "$(CSI_TEMP_DIR)" && \

+ ./deploy/kubernetes-latest/deploy.sh || (echo "Failed to deploy CSI driver" >&2; $(MAKE) csi/cleanup; exit 1)

+kubectl apply -f "$(CSI_TEMP_DIR)/examples/csi-storageclass.yaml" || (echo "Failed to apply storage class" >&2; $(MAKE) csi/cleanup; exit 1)

+kubectl apply -f "$(CSI_TEMP_DIR)/examples/csi-pvc.yaml" || (echo "Failed to apply PVC" >&2; $(MAKE) csi/cleanup; exit 1)

+$(MAKE) csi/cleanupLikely invalid or redundant comment.

17-17: Verify the snapshotter version compatibility

The external-snapshotter version is pinned to v8.2.0. Let's verify this version's compatibility with the cluster version.

✅ Verification successful

External-snapshotter version v8.2.0 is appropriate for the setup

The external-snapshotter version v8.2.0 is the latest stable version and is appropriate for use with the current setup. The implementation includes:

- Installation of all necessary CRDs and RBAC configurations

- Deployment of the snapshot controller

- Integration with CSI driver host path v1.15.0

- Proper configuration of storage classes and PVCs

🏁 Scripts executed

The following scripts were executed for the analysis:

Script:

#!/bin/bash

# Description: Check external-snapshotter version compatibility

# Get the latest stable version and compare with current version

LATEST=$(curl -s https://api.github.com/repos/kubernetes-csi/external-snapshotter/releases/latest | jq -r '.tag_name')

echo "Current version: v8.2.0"

echo "Latest version: $LATEST"

# Check compatibility matrix in README

curl -s https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/README.md | grep -A 5 "Compatibility Matrix"

Length of output: 370

Script:

#!/bin/bash

# Let's check the Kubernetes version used in the project and related configurations

# First, check if Kind version or K8s version is defined in the Makefile

grep -r "KIND_VERSION\|KUBERNETES_VERSION" .

# Also check for any Kind configuration files that might specify K8s version

fd -e yaml -e yml | xargs grep -l "kind:\|kubernetes:"

# Let's also check the README for any version information

cat README.md

Length of output: 33060

Script:

#!/bin/bash

# Let's check the Kind configuration and Kubernetes version used

fd -e yaml -e yml kind | xargs cat

# Also check if there are any version constraints or compatibility info in kind.mk

cat Makefile.d/kind.mk

Length of output: 4769

Script:

#!/bin/bash

# Let's check the e2e.yaml file to see the Kubernetes version used

cat k8s/debug/kind/e2e.yaml

# Also check the csi-driver-host-path version compatibility

curl -s https://raw.githubusercontent.com/kubernetes-csi/csi-driver-host-path/v1.15.0/README.md | grep -A 5 "Compatibility"

Length of output: 881

Description

Fix minikube start failure at readreplica e2e test.

Related Issue

Versions

Checklist

Special notes for your reviewer

Summary by CodeRabbit

Release Notes

New Features

Improvements

.gitignorefor Rust-related files.Bug Fixes